mirror of

https://github.com/wakatime/sublime-wakatime.git

synced 2023-08-10 21:13:02 +03:00

Compare commits

74 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| c90a4863e9 | |||

| 94343e5b07 | |||

| 03acea6e25 | |||

| 77594700bd | |||

| 6681409e98 | |||

| 8f7837269a | |||

| a523b3aa4d | |||

| 6985ce32bb | |||

| 4be40c7720 | |||

| eeb7fd8219 | |||

| 11fbd2d2a6 | |||

| 3cecd0de5d | |||

| c50100e675 | |||

| c1da94bc18 | |||

| 7f9d6ede9d | |||

| 192a5c7aa7 | |||

| 16bbe21be9 | |||

| 5ebaf12a99 | |||

| 1834e8978a | |||

| 22c8ed74bd | |||

| 12bbb4e561 | |||

| c71cb21cc1 | |||

| eb11b991f0 | |||

| 7ea51d09ba | |||

| b07b59e0c8 | |||

| 9d715e95b7 | |||

| 3edaed53aa | |||

| 865b0bcee9 | |||

| d440fe912c | |||

| 627455167f | |||

| aba89d3948 | |||

| 18d87118e1 | |||

| fd91b9e032 | |||

| 16b15773bf | |||

| f0b518862a | |||

| 7ee7de70d5 | |||

| fb479f8e84 | |||

| 7d37193f65 | |||

| 6bd62b95db | |||

| abf4a94a59 | |||

| 9337e3173b | |||

| 57fa4d4d84 | |||

| 9b5c59e677 | |||

| 71ce25a326 | |||

| f2f14207f5 | |||

| ac2ec0e73c | |||

| 040a76b93c | |||

| dab0621b97 | |||

| 675f9ecd69 | |||

| a6f92b9c74 | |||

| bfcc242d7e | |||

| 762027644f | |||

| 3c4ceb95fa | |||

| d6d8bceca0 | |||

| acaad2dc83 | |||

| 23c5801080 | |||

| 05a3bfbb53 | |||

| 8faaa3b0e3 | |||

| 4bcddf2a98 | |||

| b51ae5c2c4 | |||

| 5cd0061653 | |||

| 651c84325e | |||

| 89368529cb | |||

| f1f408284b | |||

| 7053932731 | |||

| b6c4956521 | |||

| 68a2557884 | |||

| c7ee7258fb | |||

| aaff2503fb | |||

| 00a1193bd3 | |||

| 2371daac1b | |||

| 4395db2b2d | |||

| fc8c61fa3f | |||

| aa30110343 |

200

HISTORY.rst

200

HISTORY.rst

@ -3,6 +3,206 @@ History

|

||||

-------

|

||||

|

||||

|

||||

5.0.1 (2015-10-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- look for python in system PATH again

|

||||

|

||||

|

||||

5.0.0 (2015-10-02)

|

||||

++++++++++++++++++

|

||||

|

||||

- improve logging with levels and log function

|

||||

- switch registry warnings to debug log level

|

||||

|

||||

|

||||

4.0.20 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- correctly find python binary in non-Windows environments

|

||||

|

||||

|

||||

4.0.19 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- handle case where ST builtin python does not have _winreg or winreg module

|

||||

|

||||

|

||||

4.0.18 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- find python location from windows registry

|

||||

|

||||

|

||||

4.0.17 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- download python in non blocking background thread for Windows machines

|

||||

|

||||

|

||||

4.0.16 (2015-09-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.8

|

||||

- fix bug in guess_language function

|

||||

- improve dependency detection

|

||||

- default request timeout of 30 seconds

|

||||

- new --timeout command line argument to change request timeout in seconds

|

||||

- allow passing command line arguments using sys.argv

|

||||

- fix entry point for pypi distribution

|

||||

- new --entity and --entitytype command line arguments

|

||||

|

||||

|

||||

4.0.15 (2015-08-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.3

|

||||

- fix local session caching

|

||||

|

||||

|

||||

4.0.14 (2015-08-25)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.2

|

||||

- fix bug in offline caching which prevented heartbeats from being cleaned up

|

||||

|

||||

|

||||

4.0.13 (2015-08-25)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.1

|

||||

- send hostname in X-Machine-Name header

|

||||

- catch exceptions from pygments.modeline.get_filetype_from_buffer

|

||||

- upgrade requests package to v2.7.0

|

||||

- handle non-ASCII characters in import path on Windows, won't fix for Python2

|

||||

- upgrade argparse to v1.3.0

|

||||

- move language translations to api server

|

||||

- move extension rules to api server

|

||||

- detect correct header file language based on presence of .cpp or .c files named the same as the .h file

|

||||

|

||||

|

||||

4.0.12 (2015-07-31)

|

||||

++++++++++++++++++

|

||||

|

||||

- correctly use urllib in Python3

|

||||

|

||||

|

||||

4.0.11 (2015-07-31)

|

||||

++++++++++++++++++

|

||||

|

||||

- install python if missing on Windows OS

|

||||

|

||||

|

||||

4.0.10 (2015-07-31)

|

||||

++++++++++++++++++

|

||||

|

||||

- downgrade requests library to v2.6.0

|

||||

|

||||

|

||||

4.0.9 (2015-07-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- catch exceptions from pygments.modeline.get_filetype_from_buffer

|

||||

|

||||

|

||||

4.0.8 (2015-06-23)

|

||||

++++++++++++++++++

|

||||

|

||||

- fix offline logging

|

||||

- limit language detection to known file extensions, unless file contents has a vim modeline

|

||||

- upgrade wakatime cli to v4.0.16

|

||||

|

||||

|

||||

4.0.7 (2015-06-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- allow customizing status bar message in sublime-settings file

|

||||

- guess language using multiple methods, then use most accurate guess

|

||||

- use entity and type for new heartbeats api resource schema

|

||||

- correctly log message from py.warnings module

|

||||

- upgrade wakatime cli to v4.0.15

|

||||

|

||||

|

||||

4.0.6 (2015-05-16)

|

||||

++++++++++++++++++

|

||||

|

||||

- fix bug with auto detecting project name

|

||||

- upgrade wakatime cli to v4.0.13

|

||||

|

||||

|

||||

4.0.5 (2015-05-15)

|

||||

++++++++++++++++++

|

||||

|

||||

- correctly display caller and lineno in log file when debug is true

|

||||

- project passed with --project argument will always be used

|

||||

- new --alternate-project argument

|

||||

- upgrade wakatime cli to v4.0.12

|

||||

|

||||

|

||||

4.0.4 (2015-05-12)

|

||||

++++++++++++++++++

|

||||

|

||||

- reuse SSL connection over multiple processes for improved performance

|

||||

- upgrade wakatime cli to v4.0.11

|

||||

|

||||

|

||||

4.0.3 (2015-05-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- send cursorpos to wakatime cli

|

||||

- upgrade wakatime cli to v4.0.10

|

||||

|

||||

|

||||

4.0.2 (2015-05-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- only send heartbeats for the currently active buffer

|

||||

|

||||

|

||||

4.0.1 (2015-05-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- ignore git temporary files

|

||||

- don't send two write heartbeats within 2 seconds of eachother

|

||||

|

||||

|

||||

4.0.0 (2015-04-12)

|

||||

++++++++++++++++++

|

||||

|

||||

- listen for selection modified instead of buffer activated for better performance

|

||||

|

||||

|

||||

3.0.19 (2015-04-07)

|

||||

+++++++++++++++++++

|

||||

|

||||

- fix bug in project detection when folder not found

|

||||

|

||||

|

||||

3.0.18 (2015-04-04)

|

||||

+++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.0.8

|

||||

- added api_url config option to .wakatime.cfg file

|

||||

|

||||

|

||||

3.0.17 (2015-04-02)

|

||||

+++++++++++++++++++

|

||||

|

||||

- use open folder as current project when not using revision control

|

||||

|

||||

|

||||

3.0.16 (2015-04-02)

|

||||

+++++++++++++++++++

|

||||

|

||||

- copy list when obfuscating api key so original command is not modified

|

||||

|

||||

|

||||

3.0.15 (2015-04-01)

|

||||

+++++++++++++++++++

|

||||

|

||||

- obfuscate api key when logging to Sublime Text Console in debug mode

|

||||

|

||||

|

||||

3.0.14 (2015-03-31)

|

||||

+++++++++++++++++++

|

||||

|

||||

|

||||

16

README.md

16

README.md

@ -1,7 +1,7 @@

|

||||

sublime-wakatime

|

||||

================

|

||||

|

||||

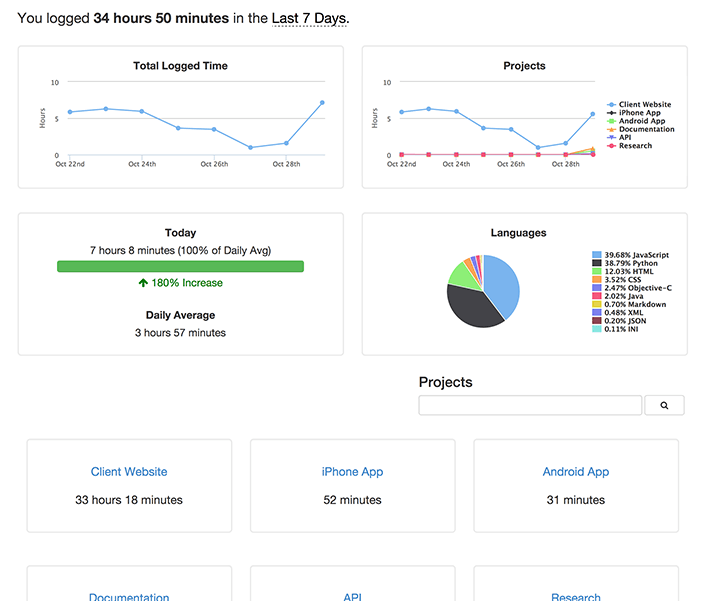

Fully automatic time tracking for Sublime Text 2 & 3.

|

||||

Sublime Text 2 & 3 plugin to quantify your coding using https://wakatime.com/.

|

||||

|

||||

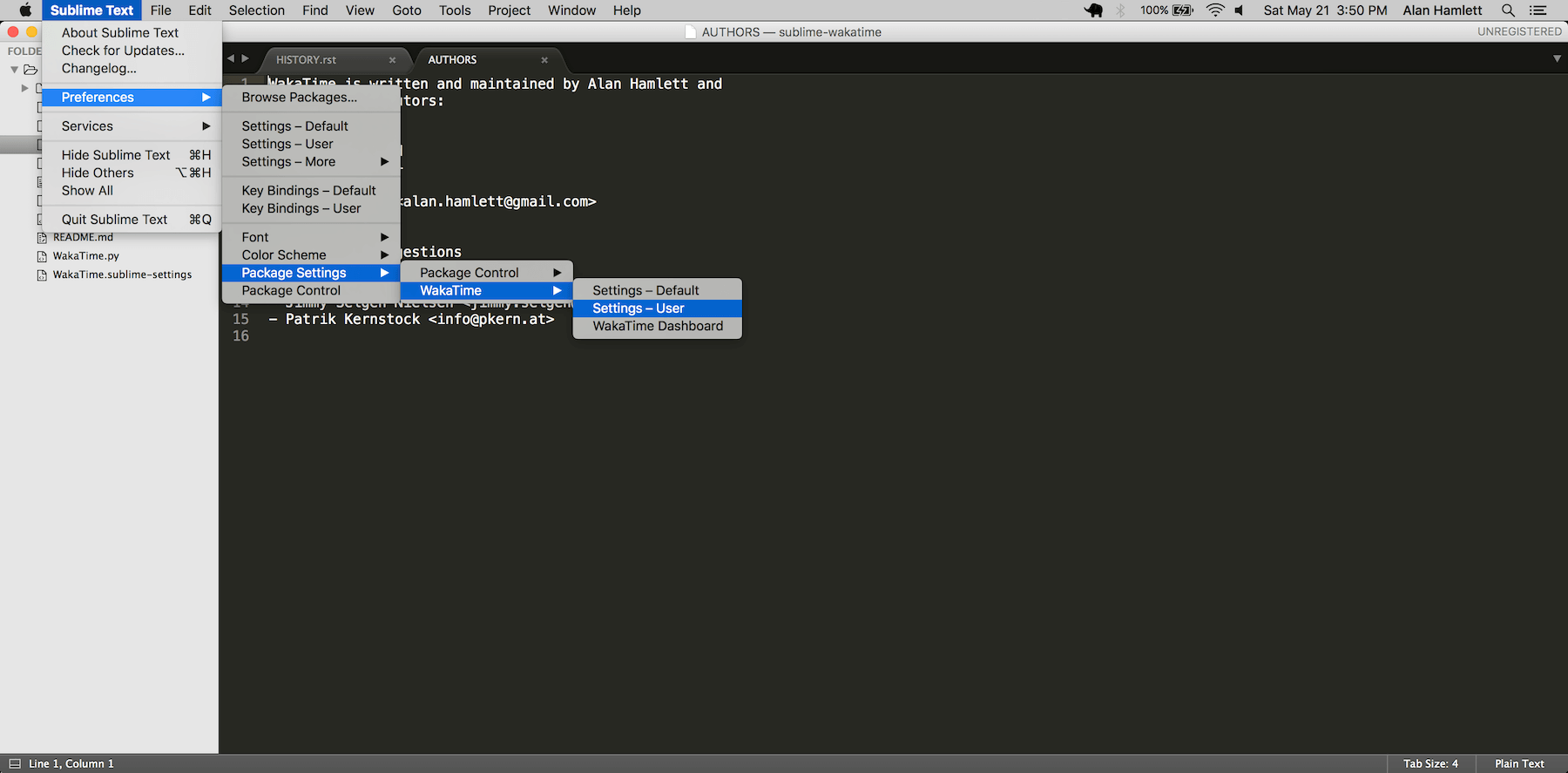

Installation

|

||||

------------

|

||||

@ -18,7 +18,7 @@ Heads Up! For Sublime Text 2 on Windows & Linux, WakaTime depends on [Python](ht

|

||||

|

||||

c) Type `wakatime`, then press `enter` with the `WakaTime` plugin selected.

|

||||

|

||||

3. Enter your [api key](https://wakatime.com/settings#apikey) from https://wakatime.com/settings#apikey, then press `enter`.

|

||||

3. Enter your [api key](https://wakatime.com/settings#apikey), then press `enter`.

|

||||

|

||||

4. Use Sublime and your time will be tracked for you automatically.

|

||||

|

||||

@ -29,3 +29,15 @@ Screen Shots

|

||||

|

||||

|

||||

|

||||

Troubleshooting

|

||||

---------------

|

||||

|

||||

First, turn on debug mode in your `WakaTime.sublime-settings` file.

|

||||

|

||||

|

||||

|

||||

Add the line: `"debug": true`

|

||||

|

||||

Then, open your Sublime Console with `View -> Show Console` to see the plugin executing the wakatime cli process when sending a heartbeat. Also, tail your `$HOME/.wakatime.log` file to debug wakatime cli problems.

|

||||

|

||||

For more general troubleshooting information, see [wakatime/wakatime#troubleshooting](https://github.com/wakatime/wakatime#troubleshooting).

|

||||

|

||||

315

WakaTime.py

315

WakaTime.py

@ -7,31 +7,39 @@ Website: https://wakatime.com/

|

||||

==========================================================="""

|

||||

|

||||

|

||||

__version__ = '3.0.14'

|

||||

__version__ = '5.0.1'

|

||||

|

||||

|

||||

import sublime

|

||||

import sublime_plugin

|

||||

|

||||

import glob

|

||||

import os

|

||||

import platform

|

||||

import re

|

||||

import sys

|

||||

import time

|

||||

import threading

|

||||

import urllib

|

||||

import webbrowser

|

||||

from datetime import datetime

|

||||

from subprocess import Popen

|

||||

try:

|

||||

import _winreg as winreg # py2

|

||||

except ImportError:

|

||||

try:

|

||||

import winreg # py3

|

||||

except ImportError:

|

||||

winreg = None

|

||||

|

||||

|

||||

# globals

|

||||

ACTION_FREQUENCY = 2

|

||||

HEARTBEAT_FREQUENCY = 2

|

||||

ST_VERSION = int(sublime.version())

|

||||

PLUGIN_DIR = os.path.dirname(os.path.realpath(__file__))

|

||||

API_CLIENT = os.path.join(PLUGIN_DIR, 'packages', 'wakatime', 'cli.py')

|

||||

SETTINGS_FILE = 'WakaTime.sublime-settings'

|

||||

SETTINGS = {}

|

||||

LAST_ACTION = {

|

||||

LAST_HEARTBEAT = {

|

||||

'time': 0,

|

||||

'file': None,

|

||||

'is_write': False,

|

||||

@ -40,6 +48,13 @@ LOCK = threading.RLock()

|

||||

PYTHON_LOCATION = None

|

||||

|

||||

|

||||

# Log Levels

|

||||

DEBUG = 'DEBUG'

|

||||

INFO = 'INFO'

|

||||

WARNING = 'WARNING'

|

||||

ERROR = 'ERROR'

|

||||

|

||||

|

||||

# add wakatime package to path

|

||||

sys.path.insert(0, os.path.join(PLUGIN_DIR, 'packages'))

|

||||

try:

|

||||

@ -48,6 +63,20 @@ except ImportError:

|

||||

pass

|

||||

|

||||

|

||||

def log(lvl, message, *args, **kwargs):

|

||||

try:

|

||||

if lvl == DEBUG and not SETTINGS.get('debug'):

|

||||

return

|

||||

msg = message

|

||||

if len(args) > 0:

|

||||

msg = message.format(*args)

|

||||

elif len(kwargs) > 0:

|

||||

msg = message.format(**kwargs)

|

||||

print('[WakaTime] [{lvl}] {msg}'.format(lvl=lvl, msg=msg))

|

||||

except RuntimeError:

|

||||

sublime.set_timeout(lambda: log(lvl, message, *args, **kwargs), 0)

|

||||

|

||||

|

||||

def createConfigFile():

|

||||

"""Creates the .wakatime.cfg INI file in $HOME directory, if it does

|

||||

not already exist.

|

||||

@ -92,66 +121,196 @@ def prompt_api_key():

|

||||

window.show_input_panel('[WakaTime] Enter your wakatime.com api key:', default_key, got_key, None, None)

|

||||

return True

|

||||

else:

|

||||

print('[WakaTime] Error: Could not prompt for api key because no window found.')

|

||||

log(ERROR, 'Could not prompt for api key because no window found.')

|

||||

return False

|

||||

|

||||

|

||||

def python_binary():

|

||||

global PYTHON_LOCATION

|

||||

if PYTHON_LOCATION is not None:

|

||||

return PYTHON_LOCATION

|

||||

|

||||

# look for python in PATH and common install locations

|

||||

paths = [

|

||||

"pythonw",

|

||||

"python",

|

||||

"/usr/local/bin/python",

|

||||

"/usr/bin/python",

|

||||

None,

|

||||

'/',

|

||||

'/usr/local/bin/',

|

||||

'/usr/bin/',

|

||||

]

|

||||

for path in paths:

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

PYTHON_LOCATION = path

|

||||

path = find_python_in_folder(path)

|

||||

if path is not None:

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

for path in glob.iglob('/python*'):

|

||||

path = os.path.realpath(os.path.join(path, 'pythonw'))

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

PYTHON_LOCATION = path

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

|

||||

# look for python in windows registry

|

||||

path = find_python_from_registry(r'SOFTWARE\Python\PythonCore')

|

||||

if path is not None:

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

path = find_python_from_registry(r'SOFTWARE\Wow6432Node\Python\PythonCore')

|

||||

if path is not None:

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

|

||||

return None

|

||||

|

||||

|

||||

def enough_time_passed(now, last_time):

|

||||

if now - last_time > ACTION_FREQUENCY * 60:

|

||||

return True

|

||||

return False

|

||||

def set_python_binary_location(path):

|

||||

global PYTHON_LOCATION

|

||||

PYTHON_LOCATION = path

|

||||

log(DEBUG, 'Python Binary Found: {0}'.format(path))

|

||||

|

||||

|

||||

def find_project_name_from_folders(folders):

|

||||

def find_python_from_registry(location, reg=None):

|

||||

if platform.system() != 'Windows' or winreg is None:

|

||||

return None

|

||||

|

||||

if reg is None:

|

||||

path = find_python_from_registry(location, reg=winreg.HKEY_CURRENT_USER)

|

||||

if path is None:

|

||||

path = find_python_from_registry(location, reg=winreg.HKEY_LOCAL_MACHINE)

|

||||

return path

|

||||

|

||||

val = None

|

||||

sub_key = 'InstallPath'

|

||||

compiled = re.compile(r'^\d+\.\d+$')

|

||||

|

||||

try:

|

||||

for folder in folders:

|

||||

for file_name in os.listdir(folder):

|

||||

if file_name.endswith('.sublime-project'):

|

||||

return file_name.replace('.sublime-project', '', 1)

|

||||

with winreg.OpenKey(reg, location) as handle:

|

||||

versions = []

|

||||

try:

|

||||

for index in range(1024):

|

||||

version = winreg.EnumKey(handle, index)

|

||||

try:

|

||||

if compiled.search(version):

|

||||

versions.append(version)

|

||||

except re.error:

|

||||

pass

|

||||

except EnvironmentError:

|

||||

pass

|

||||

versions.sort(reverse=True)

|

||||

for version in versions:

|

||||

try:

|

||||

path = winreg.QueryValue(handle, version + '\\' + sub_key)

|

||||

if path is not None:

|

||||

path = find_python_in_folder(path)

|

||||

if path is not None:

|

||||

log(DEBUG, 'Found python from {reg}\\{key}\\{version}\\{sub_key}.'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

version=version,

|

||||

sub_key=sub_key,

|

||||

))

|

||||

return path

|

||||

except WindowsError:

|

||||

log(DEBUG, 'Could not read registry value "{reg}\\{key}\\{version}\\{sub_key}".'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

version=version,

|

||||

sub_key=sub_key,

|

||||

))

|

||||

except WindowsError:

|

||||

if SETTINGS.get('debug'):

|

||||

log(DEBUG, 'Could not read registry value "{reg}\\{key}".'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

))

|

||||

|

||||

return val

|

||||

|

||||

|

||||

def find_python_in_folder(folder):

|

||||

path = 'pythonw'

|

||||

if folder is not None:

|

||||

path = os.path.realpath(os.path.join(folder, 'pythonw'))

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

path = 'python'

|

||||

if folder is not None:

|

||||

path = os.path.realpath(os.path.join(folder, 'python'))

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

return None

|

||||

|

||||

|

||||

def handle_action(view, is_write=False):

|

||||

def obfuscate_apikey(command_list):

|

||||

cmd = list(command_list)

|

||||

apikey_index = None

|

||||

for num in range(len(cmd)):

|

||||

if cmd[num] == '--key':

|

||||

apikey_index = num + 1

|

||||

break

|

||||

if apikey_index is not None and apikey_index < len(cmd):

|

||||

cmd[apikey_index] = 'XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXX' + cmd[apikey_index][-4:]

|

||||

return cmd

|

||||

|

||||

|

||||

def enough_time_passed(now, last_heartbeat, is_write):

|

||||

if now - last_heartbeat['time'] > HEARTBEAT_FREQUENCY * 60:

|

||||

return True

|

||||

if is_write and now - last_heartbeat['time'] > 2:

|

||||

return True

|

||||

return False

|

||||

|

||||

|

||||

def find_folder_containing_file(folders, current_file):

|

||||

"""Returns absolute path to folder containing the file.

|

||||

"""

|

||||

|

||||

parent_folder = None

|

||||

|

||||

current_folder = current_file

|

||||

while True:

|

||||

for folder in folders:

|

||||

if os.path.realpath(os.path.dirname(current_folder)) == os.path.realpath(folder):

|

||||

parent_folder = folder

|

||||

break

|

||||

if parent_folder is not None:

|

||||

break

|

||||

if not current_folder or os.path.dirname(current_folder) == current_folder:

|

||||

break

|

||||

current_folder = os.path.dirname(current_folder)

|

||||

|

||||

return parent_folder

|

||||

|

||||

|

||||

def find_project_from_folders(folders, current_file):

|

||||

"""Find project name from open folders.

|

||||

"""

|

||||

|

||||

folder = find_folder_containing_file(folders, current_file)

|

||||

return os.path.basename(folder) if folder else None

|

||||

|

||||

|

||||

def is_view_active(view):

|

||||

if view:

|

||||

active_window = sublime.active_window()

|

||||

if active_window:

|

||||

active_view = active_window.active_view()

|

||||

if active_view:

|

||||

return active_view.buffer_id() == view.buffer_id()

|

||||

return False

|

||||

|

||||

|

||||

def handle_heartbeat(view, is_write=False):

|

||||

window = view.window()

|

||||

if window is not None:

|

||||

target_file = view.file_name()

|

||||

project = window.project_file_name() if hasattr(window, 'project_file_name') else None

|

||||

project = window.project_data() if hasattr(window, 'project_data') else None

|

||||

folders = window.folders()

|

||||

thread = SendActionThread(target_file, view, is_write=is_write, project=project, folders=folders)

|

||||

thread = SendHeartbeatThread(target_file, view, is_write=is_write, project=project, folders=folders)

|

||||

thread.start()

|

||||

|

||||

|

||||

class SendActionThread(threading.Thread):

|

||||

class SendHeartbeatThread(threading.Thread):

|

||||

"""Non-blocking thread for sending heartbeats to api.

|

||||

"""

|

||||

|

||||

def __init__(self, target_file, view, is_write=False, project=None, folders=None, force=False):

|

||||

threading.Thread.__init__(self)

|

||||

@ -164,19 +323,20 @@ class SendActionThread(threading.Thread):

|

||||

self.debug = SETTINGS.get('debug')

|

||||

self.api_key = SETTINGS.get('api_key', '')

|

||||

self.ignore = SETTINGS.get('ignore', [])

|

||||

self.last_action = LAST_ACTION.copy()

|

||||

self.last_heartbeat = LAST_HEARTBEAT.copy()

|

||||

self.cursorpos = view.sel()[0].begin() if view.sel() else None

|

||||

self.view = view

|

||||

|

||||

def run(self):

|

||||

with self.lock:

|

||||

if self.target_file:

|

||||

self.timestamp = time.time()

|

||||

if self.force or (self.is_write and not self.last_action['is_write']) or self.target_file != self.last_action['file'] or enough_time_passed(self.timestamp, self.last_action['time']):

|

||||

if self.force or self.target_file != self.last_heartbeat['file'] or enough_time_passed(self.timestamp, self.last_heartbeat, self.is_write):

|

||||

self.send_heartbeat()

|

||||

|

||||

def send_heartbeat(self):

|

||||

if not self.api_key:

|

||||

print('[WakaTime] Error: missing api key.')

|

||||

log(ERROR, 'missing api key.')

|

||||

return

|

||||

ua = 'sublime/%d sublime-wakatime/%s' % (ST_VERSION, __version__)

|

||||

cmd = [

|

||||

@ -188,22 +348,21 @@ class SendActionThread(threading.Thread):

|

||||

]

|

||||

if self.is_write:

|

||||

cmd.append('--write')

|

||||

if self.project:

|

||||

self.project = os.path.basename(self.project).replace('.sublime-project', '', 1)

|

||||

if self.project:

|

||||

cmd.extend(['--project', self.project])

|

||||

if self.project and self.project.get('name'):

|

||||

cmd.extend(['--alternate-project', self.project.get('name')])

|

||||

elif self.folders:

|

||||

project_name = find_project_name_from_folders(self.folders)

|

||||

project_name = find_project_from_folders(self.folders, self.target_file)

|

||||

if project_name:

|

||||

cmd.extend(['--project', project_name])

|

||||

cmd.extend(['--alternate-project', project_name])

|

||||

if self.cursorpos is not None:

|

||||

cmd.extend(['--cursorpos', '{0}'.format(self.cursorpos)])

|

||||

for pattern in self.ignore:

|

||||

cmd.extend(['--ignore', pattern])

|

||||

if self.debug:

|

||||

cmd.append('--verbose')

|

||||

if python_binary():

|

||||

cmd.insert(0, python_binary())

|

||||

if self.debug:

|

||||

print('[WakaTime] %s' % ' '.join(cmd))

|

||||

log(DEBUG, ' '.join(obfuscate_apikey(cmd)))

|

||||

if platform.system() == 'Windows':

|

||||

Popen(cmd, shell=False)

|

||||

else:

|

||||

@ -211,34 +370,64 @@ class SendActionThread(threading.Thread):

|

||||

Popen(cmd, stderr=stderr)

|

||||

self.sent()

|

||||

else:

|

||||

print('[WakaTime] Error: Unable to find python binary.')

|

||||

log(ERROR, 'Unable to find python binary.')

|

||||

|

||||

def sent(self):

|

||||

sublime.set_timeout(self.set_status_bar, 0)

|

||||

sublime.set_timeout(self.set_last_action, 0)

|

||||

sublime.set_timeout(self.set_last_heartbeat, 0)

|

||||

|

||||

def set_status_bar(self):

|

||||

if SETTINGS.get('status_bar_message'):

|

||||

self.view.set_status('wakatime', 'WakaTime active {0}'.format(datetime.now().strftime('%I:%M %p')))

|

||||

self.view.set_status('wakatime', datetime.now().strftime(SETTINGS.get('status_bar_message_fmt')))

|

||||

|

||||

def set_last_action(self):

|

||||

global LAST_ACTION

|

||||

LAST_ACTION = {

|

||||

def set_last_heartbeat(self):

|

||||

global LAST_HEARTBEAT

|

||||

LAST_HEARTBEAT = {

|

||||

'file': self.target_file,

|

||||

'time': self.timestamp,

|

||||

'is_write': self.is_write,

|

||||

}

|

||||

|

||||

|

||||

class InstallPython(threading.Thread):

|

||||

"""Non-blocking thread for installing Python on Windows machines.

|

||||

"""

|

||||

|

||||

def run(self):

|

||||

log(INFO, 'Downloading and installing python...')

|

||||

url = 'https://www.python.org/ftp/python/3.4.3/python-3.4.3.msi'

|

||||

if platform.architecture()[0] == '64bit':

|

||||

url = 'https://www.python.org/ftp/python/3.4.3/python-3.4.3.amd64.msi'

|

||||

python_msi = os.path.join(os.path.expanduser('~'), 'python.msi')

|

||||

try:

|

||||

urllib.urlretrieve(url, python_msi)

|

||||

except AttributeError:

|

||||

urllib.request.urlretrieve(url, python_msi)

|

||||

args = [

|

||||

'msiexec',

|

||||

'/i',

|

||||

python_msi,

|

||||

'/norestart',

|

||||

'/qb!',

|

||||

]

|

||||

Popen(args)

|

||||

|

||||

|

||||

def plugin_loaded():

|

||||

global SETTINGS

|

||||

print('[WakaTime] Initializing WakaTime plugin v%s' % __version__)

|

||||

|

||||

if not python_binary():

|

||||

sublime.error_message("Unable to find Python binary!\nWakaTime needs Python to work correctly.\n\nGo to https://www.python.org/downloads")

|

||||

return

|

||||

log(INFO, 'Initializing WakaTime plugin v%s' % __version__)

|

||||

|

||||

SETTINGS = sublime.load_settings(SETTINGS_FILE)

|

||||

|

||||

if not python_binary():

|

||||

log(WARNING, 'Python binary not found.')

|

||||

if platform.system() == 'Windows':

|

||||

thread = InstallPython()

|

||||

thread.start()

|

||||

else:

|

||||

sublime.error_message("Unable to find Python binary!\nWakaTime needs Python to work correctly.\n\nGo to https://www.python.org/downloads")

|

||||

return

|

||||

|

||||

after_loaded()

|

||||

|

||||

|

||||

@ -255,13 +444,15 @@ if ST_VERSION < 3000:

|

||||

class WakatimeListener(sublime_plugin.EventListener):

|

||||

|

||||

def on_post_save(self, view):

|

||||

handle_action(view, is_write=True)

|

||||

handle_heartbeat(view, is_write=True)

|

||||

|

||||

def on_activated(self, view):

|

||||

handle_action(view)

|

||||

def on_selection_modified(self, view):

|

||||

if is_view_active(view):

|

||||

handle_heartbeat(view)

|

||||

|

||||

def on_modified(self, view):

|

||||

handle_action(view)

|

||||

if is_view_active(view):

|

||||

handle_heartbeat(view)

|

||||

|

||||

|

||||

class WakatimeDashboardCommand(sublime_plugin.ApplicationCommand):

|

||||

|

||||

@ -9,12 +9,15 @@

|

||||

|

||||

// Ignore files; Files (including absolute paths) that match one of these

|

||||

// POSIX regular expressions will not be logged.

|

||||

"ignore": ["^/tmp/", "^/etc/", "^/var/"],

|

||||

"ignore": ["^/tmp/", "^/etc/", "^/var/", "COMMIT_EDITMSG$", "PULLREQ_EDITMSG$", "MERGE_MSG$", "TAG_EDITMSG$"],

|

||||

|

||||

// Debug mode. Set to true for verbose logging. Defaults to false.

|

||||

"debug": false,

|

||||

|

||||

// Status bar message. Set to false to hide status bar message.

|

||||

// Defaults to true.

|

||||

"status_bar_message": true

|

||||

"status_bar_message": true,

|

||||

|

||||

// Status bar message format.

|

||||

"status_bar_message_fmt": "WakaTime active %I:%M %p"

|

||||

}

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

__title__ = 'wakatime'

|

||||

__description__ = 'Common interface to the WakaTime api.'

|

||||

__url__ = 'https://github.com/wakatime/wakatime'

|

||||

__version_info__ = ('4', '0', '6')

|

||||

__version_info__ = ('4', '1', '8')

|

||||

__version__ = '.'.join(__version_info__)

|

||||

__author__ = 'Alan Hamlett'

|

||||

__author_email__ = 'alan@wakatime.com'

|

||||

|

||||

@ -14,4 +14,4 @@

|

||||

__all__ = ['main']

|

||||

|

||||

|

||||

from .base import main

|

||||

from .main import execute

|

||||

|

||||

@ -11,8 +11,25 @@

|

||||

|

||||

import os

|

||||

import sys

|

||||

sys.path.insert(0, os.path.dirname(os.path.dirname(os.path.abspath(__file__))))

|

||||

import wakatime

|

||||

|

||||

|

||||

# get path to local wakatime package

|

||||

package_folder = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

|

||||

|

||||

# add local wakatime package to sys.path

|

||||

sys.path.insert(0, package_folder)

|

||||

|

||||

# import local wakatime package

|

||||

try:

|

||||

import wakatime

|

||||

except (TypeError, ImportError):

|

||||

# on Windows, non-ASCII characters in import path can be fixed using

|

||||

# the script path from sys.argv[0].

|

||||

# More info at https://github.com/wakatime/wakatime/issues/32

|

||||

package_folder = os.path.dirname(os.path.dirname(os.path.abspath(sys.argv[0])))

|

||||

sys.path.insert(0, package_folder)

|

||||

import wakatime

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

sys.exit(wakatime.main(sys.argv))

|

||||

sys.exit(wakatime.execute(sys.argv[1:]))

|

||||

|

||||

@ -17,32 +17,49 @@ is_py2 = (sys.version_info[0] == 2)

|

||||

is_py3 = (sys.version_info[0] == 3)

|

||||

|

||||

|

||||

if is_py2:

|

||||

if is_py2: # pragma: nocover

|

||||

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return unicode(text)

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

return text

|

||||

try:

|

||||

return unicode(text)

|

||||

except:

|

||||

return text

|

||||

open = codecs.open

|

||||

basestring = basestring

|

||||

|

||||

|

||||

elif is_py3:

|

||||

elif is_py3: # pragma: nocover

|

||||

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

if isinstance(text, bytes):

|

||||

return text.decode('utf-8')

|

||||

return str(text)

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

return str(text)

|

||||

except:

|

||||

return text

|

||||

open = open

|

||||

basestring = (str, bytes)

|

||||

|

||||

|

||||

try:

|

||||

from importlib import import_module

|

||||

except ImportError:

|

||||

except ImportError: # pragma: nocover

|

||||

def _resolve_name(name, package, level):

|

||||

"""Return the absolute name of the module to be imported."""

|

||||

if not hasattr(package, 'rindex'):

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages

|

||||

~~~~~~~~~~~~~~~~~~

|

||||

wakatime.dependencies

|

||||

~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from a source code file.

|

||||

|

||||

@ -10,9 +10,12 @@

|

||||

"""

|

||||

|

||||

import logging

|

||||

import re

|

||||

import sys

|

||||

import traceback

|

||||

|

||||

from ..compat import u, open, import_module

|

||||

from ..exceptions import NotYetImplemented

|

||||

|

||||

|

||||

log = logging.getLogger('WakaTime')

|

||||

@ -23,26 +26,28 @@ class TokenParser(object):

|

||||

language, inherit from this class and implement the :meth:`parse` method

|

||||

to return a list of dependency strings.

|

||||

"""

|

||||

source_file = None

|

||||

lexer = None

|

||||

dependencies = []

|

||||

tokens = []

|

||||

exclude = []

|

||||

|

||||

def __init__(self, source_file, lexer=None):

|

||||

self._tokens = None

|

||||

self.dependencies = []

|

||||

self.source_file = source_file

|

||||

self.lexer = lexer

|

||||

self.exclude = [re.compile(x, re.IGNORECASE) for x in self.exclude]

|

||||

|

||||

@property

|

||||

def tokens(self):

|

||||

if self._tokens is None:

|

||||

self._tokens = self._extract_tokens()

|

||||

return self._tokens

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

""" Should return a list of dependencies.

|

||||

"""

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

raise Exception('Not yet implemented.')

|

||||

raise NotYetImplemented()

|

||||

|

||||

def append(self, dep, truncate=False, separator=None, truncate_to=None,

|

||||

strip_whitespace=True):

|

||||

if dep == 'as':

|

||||

print('***************** as')

|

||||

self._save_dependency(

|

||||

dep,

|

||||

truncate=truncate,

|

||||

@ -51,10 +56,21 @@ class TokenParser(object):

|

||||

strip_whitespace=strip_whitespace,

|

||||

)

|

||||

|

||||

def partial(self, token):

|

||||

return u(token).split('.')[-1]

|

||||

|

||||

def _extract_tokens(self):

|

||||

if self.lexer:

|

||||

with open(self.source_file, 'r', encoding='utf-8') as fh:

|

||||

return self.lexer.get_tokens_unprocessed(fh.read(512000))

|

||||

try:

|

||||

with open(self.source_file, 'r', encoding='utf-8') as fh:

|

||||

return self.lexer.get_tokens_unprocessed(fh.read(512000))

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

with open(self.source_file, 'r', encoding=sys.getfilesystemencoding()) as fh:

|

||||

return self.lexer.get_tokens_unprocessed(fh.read(512000))

|

||||

except:

|

||||

pass

|

||||

return []

|

||||

|

||||

def _save_dependency(self, dep, truncate=False, separator=None,

|

||||

@ -64,13 +80,21 @@ class TokenParser(object):

|

||||

separator = u('.')

|

||||

separator = u(separator)

|

||||

dep = dep.split(separator)

|

||||

if truncate_to is None or truncate_to < 0 or truncate_to > len(dep) - 1:

|

||||

truncate_to = len(dep) - 1

|

||||

dep = dep[0] if len(dep) == 1 else separator.join(dep[0:truncate_to])

|

||||

if truncate_to is None or truncate_to < 1:

|

||||

truncate_to = 1

|

||||

if truncate_to > len(dep):

|

||||

truncate_to = len(dep)

|

||||

dep = dep[0] if len(dep) == 1 else separator.join(dep[:truncate_to])

|

||||

if strip_whitespace:

|

||||

dep = dep.strip()

|

||||

if dep:

|

||||

self.dependencies.append(dep)

|

||||

if dep and (not separator or not dep.startswith(separator)):

|

||||

should_exclude = False

|

||||

for compiled in self.exclude:

|

||||

if compiled.search(dep):

|

||||

should_exclude = True

|

||||

break

|

||||

if not should_exclude:

|

||||

self.dependencies.append(dep)

|

||||

|

||||

|

||||

class DependencyParser(object):

|

||||

@ -83,7 +107,7 @@ class DependencyParser(object):

|

||||

self.lexer = lexer

|

||||

|

||||

if self.lexer:

|

||||

module_name = self.lexer.__module__.split('.')[-1]

|

||||

module_name = self.lexer.__module__.rsplit('.', 1)[-1]

|

||||

class_name = self.lexer.__class__.__name__.replace('Lexer', 'Parser', 1)

|

||||

else:

|

||||

module_name = 'unknown'

|

||||

68

packages/wakatime/dependencies/c_cpp.py

Normal file

68

packages/wakatime/dependencies/c_cpp.py

Normal file

@ -0,0 +1,68 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.c_cpp

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from C++ code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

|

||||

|

||||

class CppParser(TokenParser):

|

||||

exclude = [

|

||||

r'^stdio\.h$',

|

||||

r'^stdlib\.h$',

|

||||

r'^string\.h$',

|

||||

r'^time\.h$',

|

||||

]

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Preproc':

|

||||

self._process_preproc(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_preproc(self, token, content):

|

||||

if content.strip().startswith('include ') or content.strip().startswith("include\t"):

|

||||

content = content.replace('include', '', 1).strip().strip('"').strip('<').strip('>').strip()

|

||||

self.append(content)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

|

||||

|

||||

class CParser(TokenParser):

|

||||

exclude = [

|

||||

r'^stdio\.h$',

|

||||

r'^stdlib\.h$',

|

||||

r'^string\.h$',

|

||||

r'^time\.h$',

|

||||

]

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Preproc':

|

||||

self._process_preproc(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_preproc(self, token, content):

|

||||

if content.strip().startswith('include ') or content.strip().startswith("include\t"):

|

||||

content = content.replace('include', '', 1).strip().strip('"').strip('<').strip('>').strip()

|

||||

self.append(content)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

@ -26,10 +26,8 @@ class JsonParser(TokenParser):

|

||||

state = None

|

||||

level = 0

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

def parse(self):

|

||||

self._process_file_name(os.path.basename(self.source_file))

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

64

packages/wakatime/dependencies/dotnet.py

Normal file

64

packages/wakatime/dependencies/dotnet.py

Normal file

@ -0,0 +1,64 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.dotnet

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from .NET code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class CSharpParser(TokenParser):

|

||||

exclude = [

|

||||

r'^system$',

|

||||

r'^microsoft$',

|

||||

]

|

||||

state = None

|

||||

buffer = u('')

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Keyword':

|

||||

self._process_keyword(token, content)

|

||||

if self.partial(token) == 'Namespace' or self.partial(token) == 'Name':

|

||||

self._process_namespace(token, content)

|

||||

elif self.partial(token) == 'Punctuation':

|

||||

self._process_punctuation(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_keyword(self, token, content):

|

||||

if content == 'using':

|

||||

self.state = 'import'

|

||||

self.buffer = u('')

|

||||

|

||||

def _process_namespace(self, token, content):

|

||||

if self.state == 'import':

|

||||

if u(content) != u('import') and u(content) != u('package') and u(content) != u('namespace') and u(content) != u('static'):

|

||||

if u(content) == u(';'): # pragma: nocover

|

||||

self._process_punctuation(token, content)

|

||||

else:

|

||||

self.buffer += u(content)

|

||||

|

||||

def _process_punctuation(self, token, content):

|

||||

if self.state == 'import':

|

||||

if u(content) == u(';'):

|

||||

self.append(self.buffer, truncate=True)

|

||||

self.buffer = u('')

|

||||

self.state = None

|

||||

elif u(content) == u('='):

|

||||

self.buffer = u('')

|

||||

else:

|

||||

self.buffer += u(content)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

96

packages/wakatime/dependencies/jvm.py

Normal file

96

packages/wakatime/dependencies/jvm.py

Normal file

@ -0,0 +1,96 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.java

|

||||

~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from Java code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class JavaParser(TokenParser):

|

||||

exclude = [

|

||||

r'^java\.',

|

||||

r'^javax\.',

|

||||

r'^import$',

|

||||

r'^package$',

|

||||

r'^namespace$',

|

||||

r'^static$',

|

||||

]

|

||||

state = None

|

||||

buffer = u('')

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Namespace':

|

||||

self._process_namespace(token, content)

|

||||

if self.partial(token) == 'Name':

|

||||

self._process_name(token, content)

|

||||

elif self.partial(token) == 'Attribute':

|

||||

self._process_attribute(token, content)

|

||||

elif self.partial(token) == 'Operator':

|

||||

self._process_operator(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_namespace(self, token, content):

|

||||

if u(content) == u('import'):

|

||||

self.state = 'import'

|

||||

|

||||

elif self.state == 'import':

|

||||

keywords = [

|

||||

u('package'),

|

||||

u('namespace'),

|

||||

u('static'),

|

||||

]

|

||||

if u(content) in keywords:

|

||||

return

|

||||

self.buffer = u('{0}{1}').format(self.buffer, u(content))

|

||||

|

||||

elif self.state == 'import-finished':

|

||||

content = content.split(u('.'))

|

||||

|

||||

if len(content) == 1:

|

||||

self.append(content[0])

|

||||

|

||||

elif len(content) > 1:

|

||||

if len(content[0]) == 3:

|

||||

content = content[1:]

|

||||

if content[-1] == u('*'):

|

||||

content = content[:len(content) - 1]

|

||||

|

||||

if len(content) == 1:

|

||||

self.append(content[0])

|

||||

elif len(content) > 1:

|

||||

self.append(u('.').join(content[:2]))

|

||||

|

||||

self.state = None

|

||||

|

||||

def _process_name(self, token, content):

|

||||

if self.state == 'import':

|

||||

self.buffer = u('{0}{1}').format(self.buffer, u(content))

|

||||

|

||||

def _process_attribute(self, token, content):

|

||||

if self.state == 'import':

|

||||

self.buffer = u('{0}{1}').format(self.buffer, u(content))

|

||||

|

||||

def _process_operator(self, token, content):

|

||||

if u(content) == u(';'):

|

||||

self.state = 'import-finished'

|

||||

self._process_namespace(token, self.buffer)

|

||||

self.state = None

|

||||

self.buffer = u('')

|

||||

elif self.state == 'import':

|

||||

self.buffer = u('{0}{1}').format(self.buffer, u(content))

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

@ -17,15 +17,13 @@ class PhpParser(TokenParser):

|

||||

state = None

|

||||

parens = 0

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if u(token).split('.')[-1] == 'Keyword':

|

||||

if self.partial(token) == 'Keyword':

|

||||

self._process_keyword(token, content)

|

||||

elif u(token) == 'Token.Literal.String.Single' or u(token) == 'Token.Literal.String.Double':

|

||||

self._process_literal_string(token, content)

|

||||

@ -33,9 +31,9 @@ class PhpParser(TokenParser):

|

||||

self._process_name(token, content)

|

||||

elif u(token) == 'Token.Name.Function':

|

||||

self._process_function(token, content)

|

||||

elif u(token).split('.')[-1] == 'Punctuation':

|

||||

elif self.partial(token) == 'Punctuation':

|

||||

self._process_punctuation(token, content)

|

||||

elif u(token).split('.')[-1] == 'Text':

|

||||

elif self.partial(token) == 'Text':

|

||||

self._process_text(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

@ -63,10 +61,10 @@ class PhpParser(TokenParser):

|

||||

|

||||

def _process_literal_string(self, token, content):

|

||||

if self.state == 'include':

|

||||

if content != '"':

|

||||

if content != '"' and content != "'":

|

||||

content = content.strip()

|

||||

if u(token) == 'Token.Literal.String.Double':

|

||||

content = u('"{0}"').format(content)

|

||||

content = u("'{0}'").format(content)

|

||||

self.append(content)

|

||||

self.state = None

|

||||

|

||||

@ -10,33 +10,30 @@

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class PythonParser(TokenParser):

|

||||

state = None

|

||||

parens = 0

|

||||

nonpackage = False

|

||||

exclude = [

|

||||

r'^os$',

|

||||

r'^sys\.',

|

||||

]

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if u(token).split('.')[-1] == 'Namespace':

|

||||

if self.partial(token) == 'Namespace':

|

||||

self._process_namespace(token, content)

|

||||

elif u(token).split('.')[-1] == 'Name':

|

||||

self._process_name(token, content)

|

||||

elif u(token).split('.')[-1] == 'Word':

|

||||

self._process_word(token, content)

|

||||

elif u(token).split('.')[-1] == 'Operator':

|

||||

elif self.partial(token) == 'Operator':

|

||||

self._process_operator(token, content)

|

||||

elif u(token).split('.')[-1] == 'Punctuation':

|

||||

elif self.partial(token) == 'Punctuation':

|

||||

self._process_punctuation(token, content)

|

||||

elif u(token).split('.')[-1] == 'Text':

|

||||

elif self.partial(token) == 'Text':

|

||||

self._process_text(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

@ -50,38 +47,6 @@ class PythonParser(TokenParser):

|

||||

else:

|

||||

self._process_import(token, content)

|

||||

|

||||

def _process_name(self, token, content):

|

||||

if self.state is not None:

|

||||

if self.nonpackage:

|

||||

self.nonpackage = False

|

||||

else:

|

||||

if self.state == 'from':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

if self.state == 'from-2' and content != 'import':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

elif self.state == 'import':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

elif self.state == 'import-2':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

else:

|

||||

self.state = None

|

||||

|

||||

def _process_word(self, token, content):

|

||||

if self.state is not None:

|

||||

if self.nonpackage:

|

||||

self.nonpackage = False

|

||||

else:

|

||||

if self.state == 'from':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

if self.state == 'from-2' and content != 'import':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

elif self.state == 'import':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

elif self.state == 'import-2':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

else:

|

||||

self.state = None

|

||||

|

||||

def _process_operator(self, token, content):

|

||||

if self.state is not None:

|

||||

if content == '.':

|

||||

@ -106,15 +71,15 @@ class PythonParser(TokenParser):

|

||||

def _process_import(self, token, content):

|

||||

if not self.nonpackage:

|

||||

if self.state == 'from':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

self.append(content, truncate=True, truncate_to=1)

|

||||

self.state = 'from-2'

|

||||

elif self.state == 'from-2' and content != 'import':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

self.append(content, truncate=True, truncate_to=1)

|

||||

elif self.state == 'import':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

self.append(content, truncate=True, truncate_to=1)

|

||||

self.state = 'import-2'

|

||||

elif self.state == 'import-2':

|

||||

self.append(content, truncate=True, truncate_to=0)

|

||||

self.append(content, truncate=True, truncate_to=1)

|

||||

else:

|

||||

self.state = None

|

||||

self.nonpackage = False

|

||||

@ -71,9 +71,7 @@ KEYWORDS = [

|

||||

|

||||

class LassoJavascriptParser(TokenParser):

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

@ -99,9 +97,7 @@ class HtmlDjangoParser(TokenParser):

|

||||

current_attr = None

|

||||

current_attr_value = None

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

@ -22,7 +22,7 @@ FILES = {

|

||||

|

||||

class UnknownParser(TokenParser):

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

def parse(self):

|

||||

self._process_file_name(os.path.basename(self.source_file))

|

||||

return self.dependencies

|

||||

|

||||

14

packages/wakatime/exceptions.py

Normal file

14

packages/wakatime/exceptions.py

Normal file

@ -0,0 +1,14 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.exceptions

|

||||

~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Custom exceptions.

|

||||

|

||||

:copyright: (c) 2015 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

|

||||

class NotYetImplemented(Exception):

|

||||

"""This method needs to be implemented."""

|

||||

@ -1,37 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.c_cpp

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from C++ code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class CppParser(TokenParser):

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if u(token).split('.')[-1] == 'Preproc':

|

||||

self._process_preproc(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_preproc(self, token, content):

|

||||

if content.strip().startswith('include ') or content.strip().startswith("include\t"):

|

||||

content = content.replace('include', '', 1).strip()

|

||||

self.append(content)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

@ -1,36 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.dotnet

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from .NET code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class CSharpParser(TokenParser):

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if u(token).split('.')[-1] == 'Namespace':

|

||||

self._process_namespace(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_namespace(self, token, content):

|

||||

if content != 'import' and content != 'package' and content != 'namespace':

|

||||

self.append(content, truncate=True)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

@ -1,36 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.java

|

||||

~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from Java code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class JavaParser(TokenParser):

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if u(token).split('.')[-1] == 'Namespace':

|

||||

self._process_namespace(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_namespace(self, token, content):

|

||||

if content != 'import' and content != 'package' and content != 'namespace':

|

||||

self.append(content, truncate=True)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

@ -1,103 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.log

|

||||

~~~~~~~~~~~~

|

||||

|

||||

Provides the configured logger for writing JSON to the log file.

|

||||

|

||||

:copyright: (c) 2013 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

import logging

|

||||

import os

|

||||

import sys

|

||||

|

||||

from .packages import simplejson as json

|

||||

from .compat import u

|

||||

try:

|

||||

from collections import OrderedDict

|

||||

except ImportError:

|

||||

from .packages.ordereddict import OrderedDict

|

||||

|

||||

|

||||

class CustomEncoder(json.JSONEncoder):

|

||||

|

||||

def default(self, obj):

|

||||

if isinstance(obj, bytes):

|

||||

obj = bytes.decode(obj)

|

||||

return json.dumps(obj)

|

||||

try:

|

||||

encoded = super(CustomEncoder, self).default(obj)

|

||||

except UnicodeDecodeError:

|

||||

obj = u(obj)

|

||||

encoded = super(CustomEncoder, self).default(obj)

|

||||

return encoded

|