mirror of

https://github.com/wakatime/sublime-wakatime.git

synced 2023-08-10 21:13:02 +03:00

Compare commits

71 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 21601f9688 | |||

| 4c3ec87341 | |||

| b149d7fc87 | |||

| 52e6107c6e | |||

| b340637331 | |||

| 044867449a | |||

| 9e3f438823 | |||

| 887d55c3f3 | |||

| 19d54f3310 | |||

| 514a8762eb | |||

| 957c74d226 | |||

| 7b0432d6ff | |||

| 09754849be | |||

| 25ad48a97a | |||

| 3b2520afa9 | |||

| 77c2041ad3 | |||

| 8af3b53937 | |||

| 5ef2e6954e | |||

| ca94272de5 | |||

| f19a448d95 | |||

| e178765412 | |||

| 6a7de84b9c | |||

| 48810f2977 | |||

| 260eedb31d | |||

| 02e2bfcad2 | |||

| f14ece63f3 | |||

| cb7f786ec8 | |||

| ab8711d0b1 | |||

| 2354be358c | |||

| 443215bd90 | |||

| c64f125dc4 | |||

| 050b14fb53 | |||

| c7efc33463 | |||

| d0ddbed006 | |||

| 3ce8f388ab | |||

| 90731146f9 | |||

| e1ab92be6d | |||

| 8b59e46c64 | |||

| 006341eb72 | |||

| b54e0e13f6 | |||

| 835c7db864 | |||

| 53e8bb04e9 | |||

| 4aa06e3829 | |||

| 297f65733f | |||

| 5ba5e6d21b | |||

| 32eadda81f | |||

| c537044801 | |||

| a97792c23c | |||

| 4223f3575f | |||

| 284cdf3ce4 | |||

| 27afc41bf4 | |||

| 1fdda0d64a | |||

| c90a4863e9 | |||

| 94343e5b07 | |||

| 03acea6e25 | |||

| 77594700bd | |||

| 6681409e98 | |||

| 8f7837269a | |||

| a523b3aa4d | |||

| 6985ce32bb | |||

| 4be40c7720 | |||

| eeb7fd8219 | |||

| 11fbd2d2a6 | |||

| 3cecd0de5d | |||

| c50100e675 | |||

| c1da94bc18 | |||

| 7f9d6ede9d | |||

| 192a5c7aa7 | |||

| 16bbe21be9 | |||

| 5ebaf12a99 | |||

| 1834e8978a |

205

HISTORY.rst

205

HISTORY.rst

@ -3,6 +3,211 @@ History

|

||||

-------

|

||||

|

||||

|

||||

7.0.8 (2016-07-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to master version to fix debug logging encoding bug.

|

||||

|

||||

|

||||

7.0.7 (2016-07-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.7.

|

||||

- Handle unknown exceptions from requests library by deleting cached session

|

||||

object because it could be from a previous conflicting version.

|

||||

- New hostname setting in config file to set machine hostname. Hostname

|

||||

argument takes priority over hostname from config file.

|

||||

- Prevent logging unrelated exception when logging tracebacks.

|

||||

- Use correct namespace for pygments.lexers.ClassNotFound exception so it is

|

||||

caught when dependency detection not available for a language.

|

||||

|

||||

|

||||

7.0.6 (2016-06-13)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.5.

|

||||

- Upgrade pygments to v2.1.3 for better language coverage.

|

||||

|

||||

|

||||

7.0.5 (2016-06-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to master version to fix bug in urllib3 package causing

|

||||

unhandled retry exceptions.

|

||||

- Prevent tracking git branch with detached head.

|

||||

|

||||

|

||||

7.0.4 (2016-05-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.3.

|

||||

- Upgrade requests dependency to v2.10.0.

|

||||

- Support for SOCKS proxies.

|

||||

|

||||

|

||||

7.0.3 (2016-05-16)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.2.

|

||||

- Prevent popup on Mac when xcode-tools is not installed.

|

||||

|

||||

|

||||

7.0.2 (2016-04-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- Prevent implicit unicode decoding from string format when logging output

|

||||

from Python version check.

|

||||

|

||||

|

||||

7.0.1 (2016-04-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.1.

|

||||

- Fix bug which prevented plugin from being sent with extra heartbeats.

|

||||

|

||||

|

||||

7.0.0 (2016-04-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- Queue heartbeats and send to wakatime-cli after 4 seconds.

|

||||

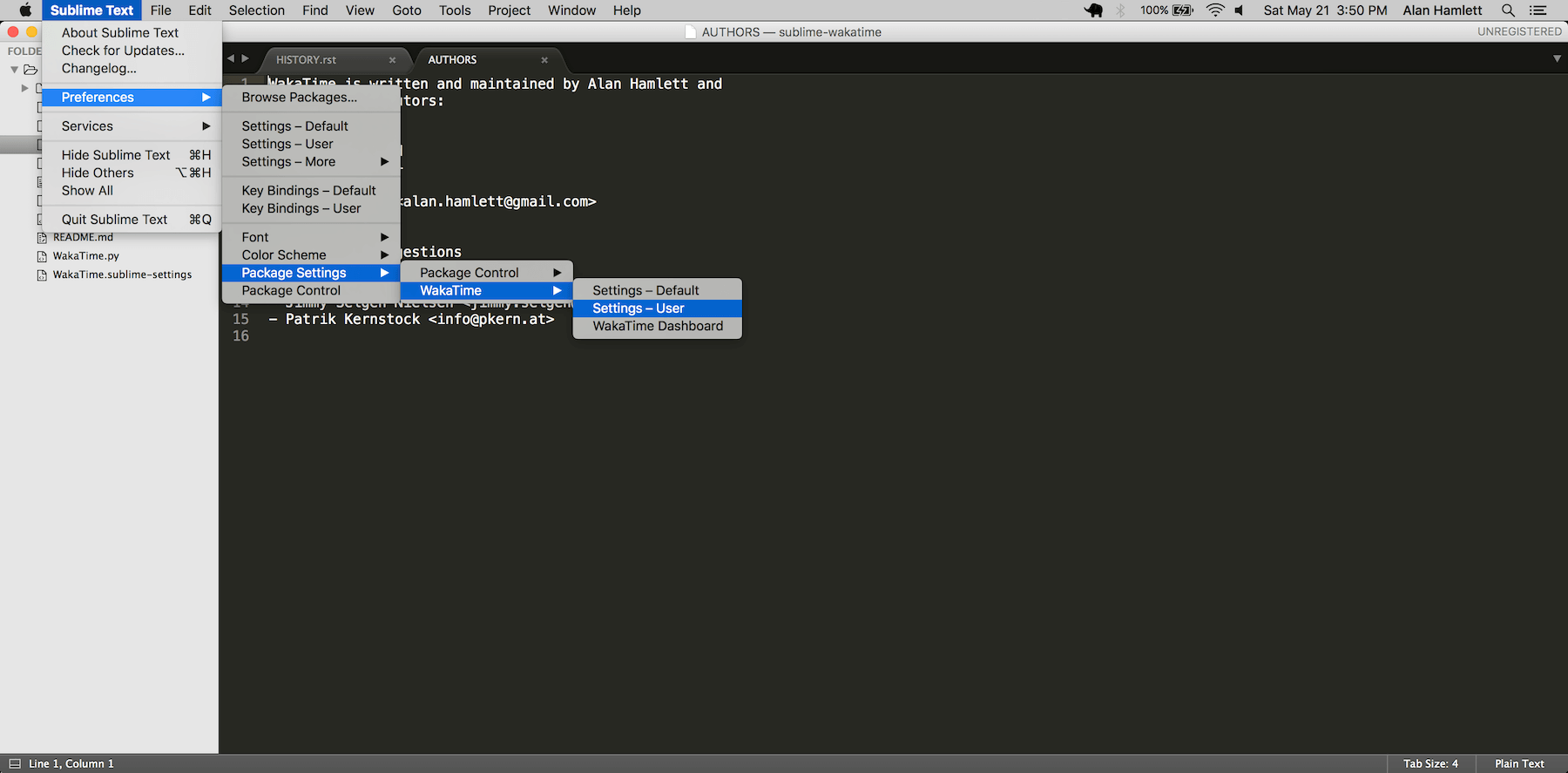

- Nest settings menu under Package Settings.

|

||||

- Upgrade wakatime-cli to v6.0.0.

|

||||

- Increase default network timeout to 60 seconds when sending heartbeats to

|

||||

the api.

|

||||

- New --extra-heartbeats command line argument for sending a JSON array of

|

||||

extra queued heartbeats to STDIN.

|

||||

- Change --entitytype command line argument to --entity-type.

|

||||

- No longer allowing --entity-type of url.

|

||||

- Support passing an alternate language to cli to be used when a language can

|

||||

not be guessed from the code file.

|

||||

|

||||

|

||||

6.0.8 (2016-04-18)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v5.0.0.

|

||||

- Support regex patterns in projectmap config section for renaming projects.

|

||||

- Upgrade pytz to v2016.3.

|

||||

- Upgrade tzlocal to v1.2.2.

|

||||

|

||||

|

||||

6.0.7 (2016-03-11)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fix bug causing RuntimeError when finding Python location

|

||||

|

||||

|

||||

6.0.6 (2016-03-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli to v4.1.13

|

||||

- encode TimeZone as utf-8 before adding to headers

|

||||

- encode X-Machine-Name as utf-8 before adding to headers

|

||||

|

||||

|

||||

6.0.5 (2016-03-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli to v4.1.11

|

||||

- encode machine hostname as Unicode when adding to X-Machine-Name header

|

||||

|

||||

|

||||

6.0.4 (2016-01-15)

|

||||

++++++++++++++++++

|

||||

|

||||

- fix UnicodeDecodeError on ST2 with non-English locale

|

||||

|

||||

|

||||

6.0.3 (2016-01-11)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli core to v4.1.10

|

||||

- accept 201 or 202 response codes as success from api

|

||||

- upgrade requests package to v2.9.1

|

||||

|

||||

|

||||

6.0.2 (2016-01-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli core to v4.1.9

|

||||

- improve C# dependency detection

|

||||

- correctly log exception tracebacks

|

||||

- log all unknown exceptions to wakatime.log file

|

||||

- disable urllib3 SSL warning from every request

|

||||

- detect dependencies from golang files

|

||||

- use api.wakatime.com for sending heartbeats

|

||||

|

||||

|

||||

6.0.1 (2016-01-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- use embedded python if system python is broken, or doesn't output a version number

|

||||

- log output from wakatime-cli in ST console when in debug mode

|

||||

|

||||

|

||||

6.0.0 (2015-12-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- use embeddable Python instead of installing on Windows

|

||||

|

||||

|

||||

5.0.1 (2015-10-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- look for python in system PATH again

|

||||

|

||||

|

||||

5.0.0 (2015-10-02)

|

||||

++++++++++++++++++

|

||||

|

||||

- improve logging with levels and log function

|

||||

- switch registry warnings to debug log level

|

||||

|

||||

|

||||

4.0.20 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- correctly find python binary in non-Windows environments

|

||||

|

||||

|

||||

4.0.19 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- handle case where ST builtin python does not have _winreg or winreg module

|

||||

|

||||

|

||||

4.0.18 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- find python location from windows registry

|

||||

|

||||

|

||||

4.0.17 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- download python in non blocking background thread for Windows machines

|

||||

|

||||

|

||||

4.0.16 (2015-09-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.8

|

||||

- fix bug in guess_language function

|

||||

- improve dependency detection

|

||||

- default request timeout of 30 seconds

|

||||

- new --timeout command line argument to change request timeout in seconds

|

||||

- allow passing command line arguments using sys.argv

|

||||

- fix entry point for pypi distribution

|

||||

- new --entity and --entitytype command line arguments

|

||||

|

||||

|

||||

4.0.15 (2015-08-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.3

|

||||

- fix local session caching

|

||||

|

||||

|

||||

4.0.14 (2015-08-25)

|

||||

++++++++++++++++++

|

||||

|

||||

|

||||

@ -6,24 +6,37 @@

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"caption": "WakaTime",

|

||||

"mnemonic": "W",

|

||||

"id": "wakatime-settings",

|

||||

"caption": "Package Settings",

|

||||

"mnemonic": "P",

|

||||

"id": "package-settings",

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/WakaTime/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – Default"

|

||||

},

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/User/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – User"

|

||||

"caption": "WakaTime",

|

||||

"mnemonic": "W",

|

||||

"id": "wakatime-settings",

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/WakaTime/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – Default"

|

||||

},

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/User/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – User"

|

||||

},

|

||||

{

|

||||

"command": "wakatime_dashboard",

|

||||

"args": {},

|

||||

"caption": "WakaTime Dashboard"

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

23

README.md

23

README.md

@ -1,13 +1,12 @@

|

||||

sublime-wakatime

|

||||

================

|

||||

|

||||

Fully automatic time tracking for Sublime Text 2 & 3.

|

||||

Metrics, insights, and time tracking automatically generated from your programming activity.

|

||||

|

||||

|

||||

Installation

|

||||

------------

|

||||

|

||||

Heads Up! For Sublime Text 2 on Windows & Linux, WakaTime depends on [Python](http://www.python.org/getit/) being installed to work correctly.

|

||||

|

||||

1. Install [Package Control](https://packagecontrol.io/installation).

|

||||

|

||||

2. Using [Package Control](https://packagecontrol.io/docs/usage):

|

||||

@ -24,17 +23,31 @@ Heads Up! For Sublime Text 2 on Windows & Linux, WakaTime depends on [Python](ht

|

||||

|

||||

5. Visit https://wakatime.com/dashboard to see your logged time.

|

||||

|

||||

|

||||

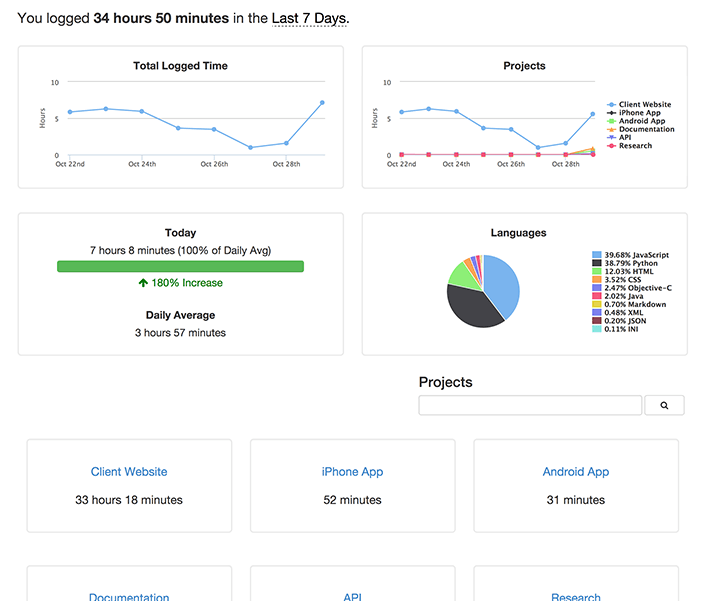

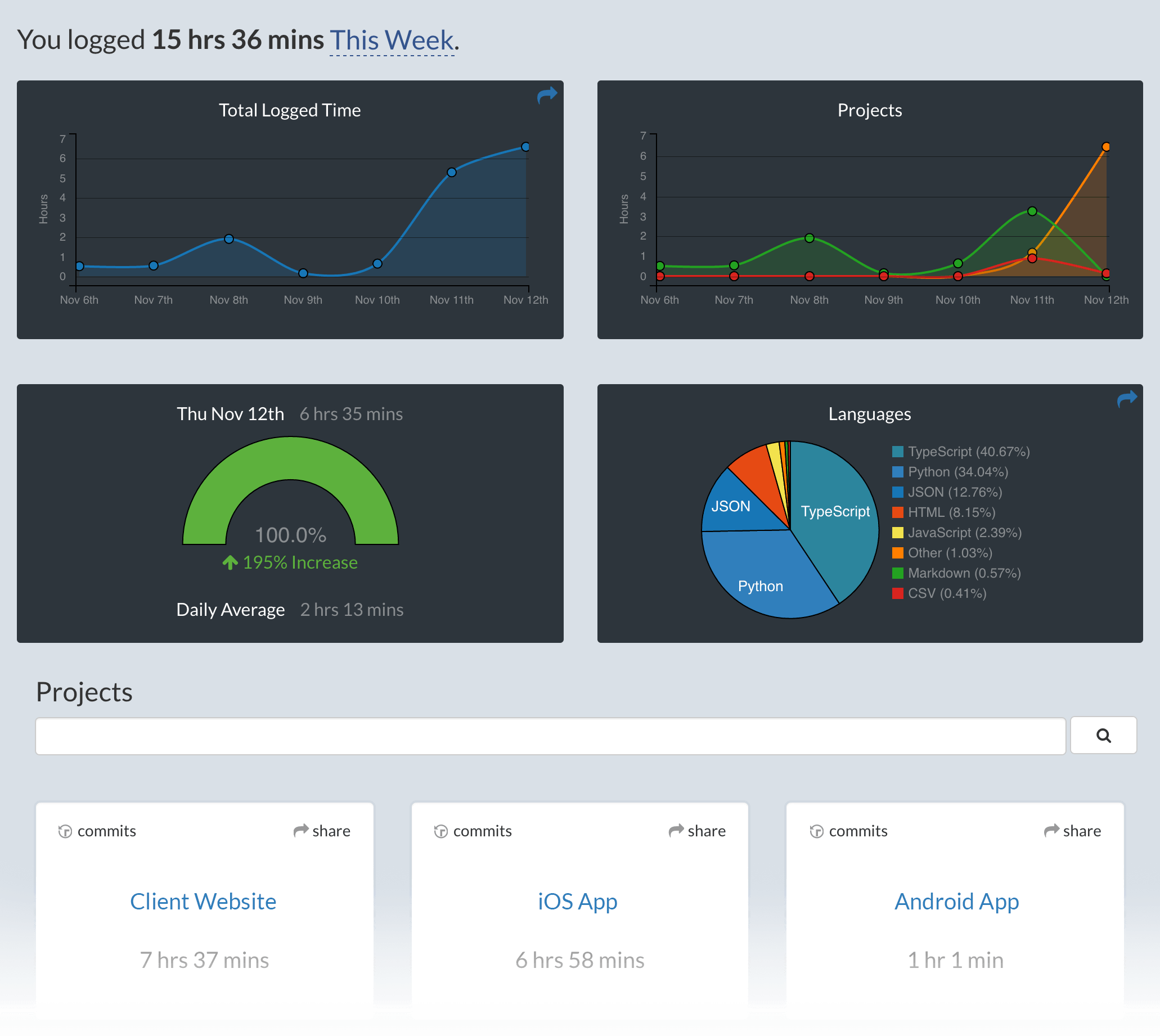

Screen Shots

|

||||

------------

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Unresponsive Plugin Warning

|

||||

---------------------------

|

||||

|

||||

In Sublime Text 2, if you get a warning message:

|

||||

|

||||

A plugin (WakaTime) may be making Sublime Text unresponsive by taking too long (0.017332s) in its on_modified callback.

|

||||

|

||||

To fix this, go to `Preferences > Settings - User` then add the following setting:

|

||||

|

||||

`"detect_slow_plugins": false`

|

||||

|

||||

|

||||

Troubleshooting

|

||||

---------------

|

||||

|

||||

First, turn on debug mode in your `WakaTime.sublime-settings` file.

|

||||

|

||||

|

||||

|

||||

|

||||

Add the line: `"debug": true`

|

||||

|

||||

|

||||

555

WakaTime.py

555

WakaTime.py

@ -7,22 +7,78 @@ Website: https://wakatime.com/

|

||||

==========================================================="""

|

||||

|

||||

|

||||

__version__ = '4.0.14'

|

||||

__version__ = '7.0.8'

|

||||

|

||||

|

||||

import sublime

|

||||

import sublime_plugin

|

||||

|

||||

import glob

|

||||

import json

|

||||

import os

|

||||

import platform

|

||||

import re

|

||||

import sys

|

||||

import time

|

||||

import threading

|

||||

import urllib

|

||||

import webbrowser

|

||||

from datetime import datetime

|

||||

from subprocess import Popen

|

||||

from zipfile import ZipFile

|

||||

from subprocess import Popen, STDOUT, PIPE

|

||||

try:

|

||||

import _winreg as winreg # py2

|

||||

except ImportError:

|

||||

try:

|

||||

import winreg # py3

|

||||

except ImportError:

|

||||

winreg = None

|

||||

try:

|

||||

import Queue as queue # py2

|

||||

except ImportError:

|

||||

import queue # py3

|

||||

|

||||

|

||||

is_py2 = (sys.version_info[0] == 2)

|

||||

is_py3 = (sys.version_info[0] == 3)

|

||||

|

||||

if is_py2:

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

try:

|

||||

return unicode(text)

|

||||

except:

|

||||

return text

|

||||

|

||||

elif is_py3:

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

if isinstance(text, bytes):

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

return str(text)

|

||||

except:

|

||||

return text

|

||||

|

||||

else:

|

||||

raise Exception('Unsupported Python version: {0}.{1}.{2}'.format(

|

||||

sys.version_info[0],

|

||||

sys.version_info[1],

|

||||

sys.version_info[2],

|

||||

))

|

||||

|

||||

|

||||

# globals

|

||||

@ -37,8 +93,15 @@ LAST_HEARTBEAT = {

|

||||

'file': None,

|

||||

'is_write': False,

|

||||

}

|

||||

LOCK = threading.RLock()

|

||||

PYTHON_LOCATION = None

|

||||

HEARTBEATS = queue.Queue()

|

||||

|

||||

|

||||

# Log Levels

|

||||

DEBUG = 'DEBUG'

|

||||

INFO = 'INFO'

|

||||

WARNING = 'WARNING'

|

||||

ERROR = 'ERROR'

|

||||

|

||||

|

||||

# add wakatime package to path

|

||||

@ -49,7 +112,59 @@ except ImportError:

|

||||

pass

|

||||

|

||||

|

||||

def createConfigFile():

|

||||

def set_timeout(callback, seconds):

|

||||

"""Runs the callback after the given seconds delay.

|

||||

|

||||

If this is Sublime Text 3, runs the callback on an alternate thread. If this

|

||||

is Sublime Text 2, runs the callback in the main thread.

|

||||

"""

|

||||

|

||||

milliseconds = int(seconds * 1000)

|

||||

try:

|

||||

sublime.set_timeout_async(callback, milliseconds)

|

||||

except AttributeError:

|

||||

sublime.set_timeout(callback, milliseconds)

|

||||

|

||||

|

||||

def log(lvl, message, *args, **kwargs):

|

||||

try:

|

||||

if lvl == DEBUG and not SETTINGS.get('debug'):

|

||||

return

|

||||

msg = message

|

||||

if len(args) > 0:

|

||||

msg = message.format(*args)

|

||||

elif len(kwargs) > 0:

|

||||

msg = message.format(**kwargs)

|

||||

print('[WakaTime] [{lvl}] {msg}'.format(lvl=lvl, msg=msg))

|

||||

except RuntimeError:

|

||||

set_timeout(lambda: log(lvl, message, *args, **kwargs), 0)

|

||||

|

||||

|

||||

def resources_folder():

|

||||

if platform.system() == 'Windows':

|

||||

return os.path.join(os.getenv('APPDATA'), 'WakaTime')

|

||||

else:

|

||||

return os.path.join(os.path.expanduser('~'), '.wakatime')

|

||||

|

||||

|

||||

def update_status_bar(status):

|

||||

"""Updates the status bar."""

|

||||

|

||||

try:

|

||||

if SETTINGS.get('status_bar_message'):

|

||||

msg = datetime.now().strftime(SETTINGS.get('status_bar_message_fmt'))

|

||||

if '{status}' in msg:

|

||||

msg = msg.format(status=status)

|

||||

|

||||

active_window = sublime.active_window()

|

||||

if active_window:

|

||||

for view in active_window.views():

|

||||

view.set_status('wakatime', msg)

|

||||

except RuntimeError:

|

||||

set_timeout(lambda: update_status_bar(status), 0)

|

||||

|

||||

|

||||

def create_config_file():

|

||||

"""Creates the .wakatime.cfg INI file in $HOME directory, if it does

|

||||

not already exist.

|

||||

"""

|

||||

@ -70,7 +185,7 @@ def createConfigFile():

|

||||

def prompt_api_key():

|

||||

global SETTINGS

|

||||

|

||||

createConfigFile()

|

||||

create_config_file()

|

||||

|

||||

default_key = ''

|

||||

try:

|

||||

@ -93,35 +208,129 @@ def prompt_api_key():

|

||||

window.show_input_panel('[WakaTime] Enter your wakatime.com api key:', default_key, got_key, None, None)

|

||||

return True

|

||||

else:

|

||||

print('[WakaTime] Error: Could not prompt for api key because no window found.')

|

||||

log(ERROR, 'Could not prompt for api key because no window found.')

|

||||

return False

|

||||

|

||||

|

||||

def python_binary():

|

||||

global PYTHON_LOCATION

|

||||

if PYTHON_LOCATION is not None:

|

||||

return PYTHON_LOCATION

|

||||

|

||||

# look for python in PATH and common install locations

|

||||

paths = [

|

||||

"pythonw",

|

||||

"python",

|

||||

"/usr/local/bin/python",

|

||||

"/usr/bin/python",

|

||||

os.path.join(resources_folder(), 'python'),

|

||||

None,

|

||||

'/',

|

||||

'/usr/local/bin/',

|

||||

'/usr/bin/',

|

||||

]

|

||||

for path in paths:

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

PYTHON_LOCATION = path

|

||||

path = find_python_in_folder(path)

|

||||

if path is not None:

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

for path in glob.iglob('/python*'):

|

||||

path = os.path.realpath(os.path.join(path, 'pythonw'))

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

PYTHON_LOCATION = path

|

||||

|

||||

# look for python in windows registry

|

||||

path = find_python_from_registry(r'SOFTWARE\Python\PythonCore')

|

||||

if path is not None:

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

path = find_python_from_registry(r'SOFTWARE\Wow6432Node\Python\PythonCore')

|

||||

if path is not None:

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

|

||||

return None

|

||||

|

||||

|

||||

def set_python_binary_location(path):

|

||||

global PYTHON_LOCATION

|

||||

PYTHON_LOCATION = path

|

||||

log(DEBUG, 'Found Python at: {0}'.format(path))

|

||||

|

||||

|

||||

def find_python_from_registry(location, reg=None):

|

||||

if platform.system() != 'Windows' or winreg is None:

|

||||

return None

|

||||

|

||||

if reg is None:

|

||||

path = find_python_from_registry(location, reg=winreg.HKEY_CURRENT_USER)

|

||||

if path is None:

|

||||

path = find_python_from_registry(location, reg=winreg.HKEY_LOCAL_MACHINE)

|

||||

return path

|

||||

|

||||

val = None

|

||||

sub_key = 'InstallPath'

|

||||

compiled = re.compile(r'^\d+\.\d+$')

|

||||

|

||||

try:

|

||||

with winreg.OpenKey(reg, location) as handle:

|

||||

versions = []

|

||||

try:

|

||||

for index in range(1024):

|

||||

version = winreg.EnumKey(handle, index)

|

||||

try:

|

||||

if compiled.search(version):

|

||||

versions.append(version)

|

||||

except re.error:

|

||||

pass

|

||||

except EnvironmentError:

|

||||

pass

|

||||

versions.sort(reverse=True)

|

||||

for version in versions:

|

||||

try:

|

||||

path = winreg.QueryValue(handle, version + '\\' + sub_key)

|

||||

if path is not None:

|

||||

path = find_python_in_folder(path)

|

||||

if path is not None:

|

||||

log(DEBUG, 'Found python from {reg}\\{key}\\{version}\\{sub_key}.'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

version=version,

|

||||

sub_key=sub_key,

|

||||

))

|

||||

return path

|

||||

except WindowsError:

|

||||

log(DEBUG, 'Could not read registry value "{reg}\\{key}\\{version}\\{sub_key}".'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

version=version,

|

||||

sub_key=sub_key,

|

||||

))

|

||||

except WindowsError:

|

||||

log(DEBUG, 'Could not read registry value "{reg}\\{key}".'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

))

|

||||

|

||||

return val

|

||||

|

||||

|

||||

def find_python_in_folder(folder, headless=True):

|

||||

pattern = re.compile(r'\d+\.\d+')

|

||||

|

||||

path = 'python'

|

||||

if folder is not None:

|

||||

path = os.path.realpath(os.path.join(folder, 'python'))

|

||||

if headless:

|

||||

path = u(path) + u('w')

|

||||

log(DEBUG, u('Looking for Python at: {0}').format(path))

|

||||

try:

|

||||

process = Popen([path, '--version'], stdout=PIPE, stderr=STDOUT)

|

||||

output, err = process.communicate()

|

||||

output = u(output).strip()

|

||||

retcode = process.poll()

|

||||

log(DEBUG, u('Python Version Output: {0}').format(output))

|

||||

if not retcode and pattern.search(output):

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

except:

|

||||

log(DEBUG, u(sys.exc_info()[1]))

|

||||

|

||||

if headless:

|

||||

path = find_python_in_folder(folder, headless=False)

|

||||

if path is not None:

|

||||

return path

|

||||

|

||||

return None

|

||||

|

||||

|

||||

@ -133,14 +342,14 @@ def obfuscate_apikey(command_list):

|

||||

apikey_index = num + 1

|

||||

break

|

||||

if apikey_index is not None and apikey_index < len(cmd):

|

||||

cmd[apikey_index] = '********-****-****-****-********' + cmd[apikey_index][-4:]

|

||||

cmd[apikey_index] = 'XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXX' + cmd[apikey_index][-4:]

|

||||

return cmd

|

||||

|

||||

|

||||

def enough_time_passed(now, last_heartbeat, is_write):

|

||||

if now - last_heartbeat['time'] > HEARTBEAT_FREQUENCY * 60:

|

||||

def enough_time_passed(now, is_write):

|

||||

if now - LAST_HEARTBEAT['time'] > HEARTBEAT_FREQUENCY * 60:

|

||||

return True

|

||||

if is_write and now - last_heartbeat['time'] > 2:

|

||||

if is_write and now - LAST_HEARTBEAT['time'] > 2:

|

||||

return True

|

||||

return False

|

||||

|

||||

@ -184,135 +393,235 @@ def is_view_active(view):

|

||||

return False

|

||||

|

||||

|

||||

def handle_heartbeat(view, is_write=False):

|

||||

def handle_activity(view, is_write=False):

|

||||

window = view.window()

|

||||

if window is not None:

|

||||

target_file = view.file_name()

|

||||

project = window.project_data() if hasattr(window, 'project_data') else None

|

||||

folders = window.folders()

|

||||

thread = SendHeartbeatThread(target_file, view, is_write=is_write, project=project, folders=folders)

|

||||

thread.start()

|

||||

entity = view.file_name()

|

||||

if entity:

|

||||

timestamp = time.time()

|

||||

last_file = LAST_HEARTBEAT['file']

|

||||

if entity != last_file or enough_time_passed(timestamp, is_write):

|

||||

project = window.project_data() if hasattr(window, 'project_data') else None

|

||||

folders = window.folders()

|

||||

append_heartbeat(entity, timestamp, is_write, view, project, folders)

|

||||

|

||||

|

||||

class SendHeartbeatThread(threading.Thread):

|

||||

def append_heartbeat(entity, timestamp, is_write, view, project, folders):

|

||||

global LAST_HEARTBEAT

|

||||

|

||||

def __init__(self, target_file, view, is_write=False, project=None, folders=None, force=False):

|

||||

# add this heartbeat to queue

|

||||

heartbeat = {

|

||||

'entity': entity,

|

||||

'timestamp': timestamp,

|

||||

'is_write': is_write,

|

||||

'cursorpos': view.sel()[0].begin() if view.sel() else None,

|

||||

'project': project,

|

||||

'folders': folders,

|

||||

}

|

||||

HEARTBEATS.put_nowait(heartbeat)

|

||||

|

||||

# make this heartbeat the LAST_HEARTBEAT

|

||||

LAST_HEARTBEAT = {

|

||||

'file': entity,

|

||||

'time': timestamp,

|

||||

'is_write': is_write,

|

||||

}

|

||||

|

||||

# process the queue of heartbeats in the future

|

||||

seconds = 4

|

||||

set_timeout(process_queue, seconds)

|

||||

|

||||

|

||||

def process_queue():

|

||||

try:

|

||||

heartbeat = HEARTBEATS.get_nowait()

|

||||

except queue.Empty:

|

||||

return

|

||||

|

||||

has_extra_heartbeats = False

|

||||

extra_heartbeats = []

|

||||

try:

|

||||

while True:

|

||||

extra_heartbeats.append(HEARTBEATS.get_nowait())

|

||||

has_extra_heartbeats = True

|

||||

except queue.Empty:

|

||||

pass

|

||||

|

||||

thread = SendHeartbeatsThread(heartbeat)

|

||||

if has_extra_heartbeats:

|

||||

thread.add_extra_heartbeats(extra_heartbeats)

|

||||

thread.start()

|

||||

|

||||

|

||||

class SendHeartbeatsThread(threading.Thread):

|

||||

"""Non-blocking thread for sending heartbeats to api.

|

||||

"""

|

||||

|

||||

def __init__(self, heartbeat):

|

||||

threading.Thread.__init__(self)

|

||||

self.lock = LOCK

|

||||

self.target_file = target_file

|

||||

self.is_write = is_write

|

||||

self.project = project

|

||||

self.folders = folders

|

||||

self.force = force

|

||||

|

||||

self.debug = SETTINGS.get('debug')

|

||||

self.api_key = SETTINGS.get('api_key', '')

|

||||

self.ignore = SETTINGS.get('ignore', [])

|

||||

self.last_heartbeat = LAST_HEARTBEAT.copy()

|

||||

self.cursorpos = view.sel()[0].begin() if view.sel() else None

|

||||

self.view = view

|

||||

|

||||

self.heartbeat = heartbeat

|

||||

self.has_extra_heartbeats = False

|

||||

|

||||

def add_extra_heartbeats(self, extra_heartbeats):

|

||||

self.has_extra_heartbeats = True

|

||||

self.extra_heartbeats = extra_heartbeats

|

||||

|

||||

def run(self):

|

||||

with self.lock:

|

||||

if self.target_file:

|

||||

self.timestamp = time.time()

|

||||

if self.force or self.target_file != self.last_heartbeat['file'] or enough_time_passed(self.timestamp, self.last_heartbeat, self.is_write):

|

||||

self.send_heartbeat()

|

||||

"""Running in background thread."""

|

||||

|

||||

def send_heartbeat(self):

|

||||

if not self.api_key:

|

||||

print('[WakaTime] Error: missing api key.')

|

||||

return

|

||||

ua = 'sublime/%d sublime-wakatime/%s' % (ST_VERSION, __version__)

|

||||

cmd = [

|

||||

API_CLIENT,

|

||||

'--file', self.target_file,

|

||||

'--time', str('%f' % self.timestamp),

|

||||

'--plugin', ua,

|

||||

'--key', str(bytes.decode(self.api_key.encode('utf8'))),

|

||||

]

|

||||

if self.is_write:

|

||||

cmd.append('--write')

|

||||

if self.project and self.project.get('name'):

|

||||

cmd.extend(['--alternate-project', self.project.get('name')])

|

||||

elif self.folders:

|

||||

project_name = find_project_from_folders(self.folders, self.target_file)

|

||||

self.send_heartbeats()

|

||||

|

||||

def build_heartbeat(self, entity=None, timestamp=None, is_write=None,

|

||||

cursorpos=None, project=None, folders=None):

|

||||

"""Returns a dict for passing to wakatime-cli as arguments."""

|

||||

|

||||

heartbeat = {

|

||||

'entity': entity,

|

||||

'timestamp': timestamp,

|

||||

'is_write': is_write,

|

||||

}

|

||||

|

||||

if project and project.get('name'):

|

||||

heartbeat['alternate_project'] = project.get('name')

|

||||

elif folders:

|

||||

project_name = find_project_from_folders(folders, entity)

|

||||

if project_name:

|

||||

cmd.extend(['--alternate-project', project_name])

|

||||

if self.cursorpos is not None:

|

||||

cmd.extend(['--cursorpos', '{0}'.format(self.cursorpos)])

|

||||

for pattern in self.ignore:

|

||||

cmd.extend(['--ignore', pattern])

|

||||

if self.debug:

|

||||

cmd.append('--verbose')

|

||||

heartbeat['alternate_project'] = project_name

|

||||

|

||||

if cursorpos is not None:

|

||||

heartbeat['cursorpos'] = '{0}'.format(cursorpos)

|

||||

|

||||

return heartbeat

|

||||

|

||||

def send_heartbeats(self):

|

||||

if python_binary():

|

||||

cmd.insert(0, python_binary())

|

||||

heartbeat = self.build_heartbeat(**self.heartbeat)

|

||||

ua = 'sublime/%d sublime-wakatime/%s' % (ST_VERSION, __version__)

|

||||

cmd = [

|

||||

python_binary(),

|

||||

API_CLIENT,

|

||||

'--entity', heartbeat['entity'],

|

||||

'--time', str('%f' % heartbeat['timestamp']),

|

||||

'--plugin', ua,

|

||||

]

|

||||

if self.api_key:

|

||||

cmd.extend(['--key', str(bytes.decode(self.api_key.encode('utf8')))])

|

||||

if heartbeat['is_write']:

|

||||

cmd.append('--write')

|

||||

if heartbeat.get('alternate_project'):

|

||||

cmd.extend(['--alternate-project', heartbeat['alternate_project']])

|

||||

if heartbeat.get('cursorpos') is not None:

|

||||

cmd.extend(['--cursorpos', heartbeat['cursorpos']])

|

||||

for pattern in self.ignore:

|

||||

cmd.extend(['--ignore', pattern])

|

||||

if self.debug:

|

||||

print('[WakaTime] %s' % ' '.join(obfuscate_apikey(cmd)))

|

||||

if platform.system() == 'Windows':

|

||||

Popen(cmd, shell=False)

|

||||

cmd.append('--verbose')

|

||||

if self.has_extra_heartbeats:

|

||||

cmd.append('--extra-heartbeats')

|

||||

stdin = PIPE

|

||||

extra_heartbeats = [self.build_heartbeat(**x) for x in self.extra_heartbeats]

|

||||

extra_heartbeats = json.dumps(extra_heartbeats)

|

||||

else:

|

||||

with open(os.path.join(os.path.expanduser('~'), '.wakatime.log'), 'a') as stderr:

|

||||

Popen(cmd, stderr=stderr)

|

||||

self.sent()

|

||||

extra_heartbeats = None

|

||||

stdin = None

|

||||

|

||||

log(DEBUG, ' '.join(obfuscate_apikey(cmd)))

|

||||

try:

|

||||

process = Popen(cmd, stdin=stdin, stdout=PIPE, stderr=STDOUT)

|

||||

inp = None

|

||||

if self.has_extra_heartbeats:

|

||||

inp = "{0}\n".format(extra_heartbeats)

|

||||

inp = inp.encode('utf-8')

|

||||

output, err = process.communicate(input=inp)

|

||||

output = u(output)

|

||||

retcode = process.poll()

|

||||

if (not retcode or retcode == 102) and not output:

|

||||

self.sent()

|

||||

else:

|

||||

update_status_bar('Error')

|

||||

if retcode:

|

||||

log(DEBUG if retcode == 102 else ERROR, 'wakatime-core exited with status: {0}'.format(retcode))

|

||||

if output:

|

||||

log(ERROR, u('wakatime-core output: {0}').format(output))

|

||||

except:

|

||||

log(ERROR, u(sys.exc_info()[1]))

|

||||

update_status_bar('Error')

|

||||

|

||||

else:

|

||||

print('[WakaTime] Error: Unable to find python binary.')

|

||||

log(ERROR, 'Unable to find python binary.')

|

||||

update_status_bar('Error')

|

||||

|

||||

def sent(self):

|

||||

sublime.set_timeout(self.set_status_bar, 0)

|

||||

sublime.set_timeout(self.set_last_heartbeat, 0)

|

||||

update_status_bar('OK')

|

||||

|

||||

def set_status_bar(self):

|

||||

if SETTINGS.get('status_bar_message'):

|

||||

self.view.set_status('wakatime', datetime.now().strftime(SETTINGS.get('status_bar_message_fmt')))

|

||||

|

||||

def set_last_heartbeat(self):

|

||||

global LAST_HEARTBEAT

|

||||

LAST_HEARTBEAT = {

|

||||

'file': self.target_file,

|

||||

'time': self.timestamp,

|

||||

'is_write': self.is_write,

|

||||

}

|

||||

def download_python():

|

||||

thread = DownloadPython()

|

||||

thread.start()

|

||||

|

||||

|

||||

class DownloadPython(threading.Thread):

|

||||

"""Non-blocking thread for extracting embeddable Python on Windows machines.

|

||||

"""

|

||||

|

||||

def run(self):

|

||||

log(INFO, 'Downloading embeddable Python...')

|

||||

|

||||

ver = '3.5.0'

|

||||

arch = 'amd64' if platform.architecture()[0] == '64bit' else 'win32'

|

||||

url = 'https://www.python.org/ftp/python/{ver}/python-{ver}-embed-{arch}.zip'.format(

|

||||

ver=ver,

|

||||

arch=arch,

|

||||

)

|

||||

|

||||

if not os.path.exists(resources_folder()):

|

||||

os.makedirs(resources_folder())

|

||||

|

||||

zip_file = os.path.join(resources_folder(), 'python.zip')

|

||||

try:

|

||||

urllib.urlretrieve(url, zip_file)

|

||||

except AttributeError:

|

||||

urllib.request.urlretrieve(url, zip_file)

|

||||

|

||||

log(INFO, 'Extracting Python...')

|

||||

with ZipFile(zip_file) as zf:

|

||||

path = os.path.join(resources_folder(), 'python')

|

||||

zf.extractall(path)

|

||||

|

||||

try:

|

||||

os.remove(zip_file)

|

||||

except:

|

||||

pass

|

||||

|

||||

log(INFO, 'Finished extracting Python.')

|

||||

|

||||

|

||||

def plugin_loaded():

|

||||

global SETTINGS

|

||||

print('[WakaTime] Initializing WakaTime plugin v%s' % __version__)

|

||||

SETTINGS = sublime.load_settings(SETTINGS_FILE)

|

||||

|

||||

log(INFO, 'Initializing WakaTime plugin v%s' % __version__)

|

||||

update_status_bar('Initializing')

|

||||

|

||||

if not python_binary():

|

||||

print('[WakaTime] Warning: Python binary not found.')

|

||||

log(WARNING, 'Python binary not found.')

|

||||

if platform.system() == 'Windows':

|

||||

install_python()

|

||||

set_timeout(download_python, 0)

|

||||

else:

|

||||

sublime.error_message("Unable to find Python binary!\nWakaTime needs Python to work correctly.\n\nGo to https://www.python.org/downloads")

|

||||

return

|

||||

|

||||

SETTINGS = sublime.load_settings(SETTINGS_FILE)

|

||||

after_loaded()

|

||||

|

||||

|

||||

def after_loaded():

|

||||

if not prompt_api_key():

|

||||

sublime.set_timeout(after_loaded, 500)

|

||||

|

||||

|

||||

def install_python():

|

||||

print('[WakaTime] Downloading and installing python...')

|

||||

url = 'https://www.python.org/ftp/python/3.4.3/python-3.4.3.msi'

|

||||

if platform.architecture()[0] == '64bit':

|

||||

url = 'https://www.python.org/ftp/python/3.4.3/python-3.4.3.amd64.msi'

|

||||

python_msi = os.path.join(os.path.expanduser('~'), 'python.msi')

|

||||

try:

|

||||

urllib.urlretrieve(url, python_msi)

|

||||

except AttributeError:

|

||||

urllib.request.urlretrieve(url, python_msi)

|

||||

args = [

|

||||

'msiexec',

|

||||

'/i',

|

||||

python_msi,

|

||||

'/norestart',

|

||||

'/qb!',

|

||||

]

|

||||

Popen(args)

|

||||

set_timeout(after_loaded, 0.5)

|

||||

|

||||

|

||||

# need to call plugin_loaded because only ST3 will auto-call it

|

||||

@ -323,15 +632,15 @@ if ST_VERSION < 3000:

|

||||

class WakatimeListener(sublime_plugin.EventListener):

|

||||

|

||||

def on_post_save(self, view):

|

||||

handle_heartbeat(view, is_write=True)

|

||||

handle_activity(view, is_write=True)

|

||||

|

||||

def on_selection_modified(self, view):

|

||||

if is_view_active(view):

|

||||

handle_heartbeat(view)

|

||||

handle_activity(view)

|

||||

|

||||

def on_modified(self, view):

|

||||

if is_view_active(view):

|

||||

handle_heartbeat(view)

|

||||

handle_activity(view)

|

||||

|

||||

|

||||

class WakatimeDashboardCommand(sublime_plugin.ApplicationCommand):

|

||||

|

||||

@ -19,5 +19,5 @@

|

||||

"status_bar_message": true,

|

||||

|

||||

// Status bar message format.

|

||||

"status_bar_message_fmt": "WakaTime active %I:%M %p"

|

||||

"status_bar_message_fmt": "WakaTime {status} %I:%M %p"

|

||||

}

|

||||

|

||||

@ -1,9 +1,9 @@

|

||||

__title__ = 'wakatime'

|

||||

__description__ = 'Common interface to the WakaTime api.'

|

||||

__url__ = 'https://github.com/wakatime/wakatime'

|

||||

__version_info__ = ('4', '1', '2')

|

||||

__version_info__ = ('6', '0', '7')

|

||||

__version__ = '.'.join(__version_info__)

|

||||

__author__ = 'Alan Hamlett'

|

||||

__author_email__ = 'alan@wakatime.com'

|

||||

__license__ = 'BSD'

|

||||

__copyright__ = 'Copyright 2014 Alan Hamlett'

|

||||

__copyright__ = 'Copyright 2016 Alan Hamlett'

|

||||

|

||||

@ -14,4 +14,4 @@

|

||||

__all__ = ['main']

|

||||

|

||||

|

||||

from .base import main

|

||||

from .main import execute

|

||||

|

||||

@ -1,443 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.base

|

||||

~~~~~~~~~~~~~

|

||||

|

||||

wakatime module entry point.

|

||||

|

||||

:copyright: (c) 2013 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from __future__ import print_function

|

||||

|

||||

import base64

|

||||

import logging

|

||||

import os

|

||||

import platform

|

||||

import re

|

||||

import sys

|

||||

import time

|

||||

import traceback

|

||||

import socket

|

||||

try:

|

||||

import ConfigParser as configparser

|

||||

except ImportError: # pragma: nocover

|

||||

import configparser

|

||||

|

||||

sys.path.insert(0, os.path.dirname(os.path.dirname(os.path.abspath(__file__))))

|

||||

sys.path.insert(0, os.path.join(os.path.dirname(os.path.abspath(__file__)), 'packages'))

|

||||

|

||||

from .__about__ import __version__

|

||||

from .compat import u, open, is_py3

|

||||

from .logger import setup_logging

|

||||

from .offlinequeue import Queue

|

||||

from .packages import argparse

|

||||

from .packages.requests.exceptions import RequestException

|

||||

from .project import get_project_info

|

||||

from .session_cache import SessionCache

|

||||

from .stats import get_file_stats

|

||||

try:

|

||||

from .packages import simplejson as json # pragma: nocover

|

||||

except (ImportError, SyntaxError):

|

||||

import json # pragma: nocover

|

||||

try:

|

||||

from .packages import tzlocal # pragma: nocover

|

||||

except: # pragma: nocover

|

||||

from .packages import tzlocal3 as tzlocal # pragma: nocover

|

||||

|

||||

|

||||

log = logging.getLogger('WakaTime')

|

||||

|

||||

|

||||

class FileAction(argparse.Action):

|

||||

|

||||

def __call__(self, parser, namespace, values, option_string=None):

|

||||

values = os.path.realpath(values)

|

||||

setattr(namespace, self.dest, values)

|

||||

|

||||

|

||||

def parseConfigFile(configFile=None):

|

||||

"""Returns a configparser.SafeConfigParser instance with configs

|

||||

read from the config file. Default location of the config file is

|

||||

at ~/.wakatime.cfg.

|

||||

"""

|

||||

|

||||

if not configFile:

|

||||

configFile = os.path.join(os.path.expanduser('~'), '.wakatime.cfg')

|

||||

|

||||

configs = configparser.SafeConfigParser()

|

||||

try:

|

||||

with open(configFile, 'r', encoding='utf-8') as fh:

|

||||

try:

|

||||

configs.readfp(fh)

|

||||

except configparser.Error:

|

||||

print(traceback.format_exc())

|

||||

return None

|

||||

except IOError:

|

||||

print(u('Error: Could not read from config file {0}').format(u(configFile)))

|

||||

return configs

|

||||

|

||||

|

||||

def parseArguments():

|

||||

"""Parse command line arguments and configs from ~/.wakatime.cfg.

|

||||

Command line arguments take precedence over config file settings.

|

||||

Returns instances of ArgumentParser and SafeConfigParser.

|

||||

"""

|

||||

|

||||

# define supported command line arguments

|

||||

parser = argparse.ArgumentParser(

|

||||

description='Common interface for the WakaTime api.')

|

||||

parser.add_argument('--file', dest='targetFile', metavar='file',

|

||||

action=FileAction, required=True,

|

||||

help='absolute path to file for current heartbeat')

|

||||

parser.add_argument('--key', dest='key',

|

||||

help='your wakatime api key; uses api_key from '+

|

||||

'~/.wakatime.conf by default')

|

||||

parser.add_argument('--write', dest='isWrite',

|

||||

action='store_true',

|

||||

help='when set, tells api this heartbeat was triggered from '+

|

||||

'writing to a file')

|

||||

parser.add_argument('--plugin', dest='plugin',

|

||||

help='optional text editor plugin name and version '+

|

||||

'for User-Agent header')

|

||||

parser.add_argument('--time', dest='timestamp', metavar='time',

|

||||

type=float,

|

||||

help='optional floating-point unix epoch timestamp; '+

|

||||

'uses current time by default')

|

||||

parser.add_argument('--lineno', dest='lineno',

|

||||

help='optional line number; current line being edited')

|

||||

parser.add_argument('--cursorpos', dest='cursorpos',

|

||||

help='optional cursor position in the current file')

|

||||

parser.add_argument('--notfile', dest='notfile', action='store_true',

|

||||

help='when set, will accept any value for the file. for example, '+

|

||||

'a domain name or other item you want to log time towards.')

|

||||

parser.add_argument('--proxy', dest='proxy',

|

||||

help='optional https proxy url; for example: '+

|

||||

'https://user:pass@localhost:8080')

|

||||

parser.add_argument('--project', dest='project',

|

||||

help='optional project name')

|

||||

parser.add_argument('--alternate-project', dest='alternate_project',

|

||||

help='optional alternate project name; auto-discovered project takes priority')

|

||||

parser.add_argument('--hostname', dest='hostname', help='hostname of current machine.')

|

||||

parser.add_argument('--disableoffline', dest='offline',

|

||||

action='store_false',

|

||||

help='disables offline time logging instead of queuing logged time')

|

||||

parser.add_argument('--hidefilenames', dest='hidefilenames',

|

||||

action='store_true',

|

||||

help='obfuscate file names; will not send file names to api')

|

||||

parser.add_argument('--exclude', dest='exclude', action='append',

|

||||

help='filename patterns to exclude from logging; POSIX regex '+

|

||||

'syntax; can be used more than once')

|

||||

parser.add_argument('--include', dest='include', action='append',

|

||||

help='filename patterns to log; when used in combination with '+

|

||||

'--exclude, files matching include will still be logged; '+

|

||||

'POSIX regex syntax; can be used more than once')

|

||||

parser.add_argument('--ignore', dest='ignore', action='append',

|

||||

help=argparse.SUPPRESS)

|

||||

parser.add_argument('--logfile', dest='logfile',

|

||||

help='defaults to ~/.wakatime.log')

|

||||

parser.add_argument('--apiurl', dest='api_url',

|

||||

help='heartbeats api url; for debugging with a local server')

|

||||

parser.add_argument('--config', dest='config',

|

||||

help='defaults to ~/.wakatime.conf')

|

||||

parser.add_argument('--verbose', dest='verbose', action='store_true',

|

||||

help='turns on debug messages in log file')

|

||||

parser.add_argument('--version', action='version', version=__version__)

|

||||

|

||||

# parse command line arguments

|

||||

args = parser.parse_args()

|

||||

|

||||

# use current unix epoch timestamp by default

|

||||

if not args.timestamp:

|

||||

args.timestamp = time.time()

|

||||

|

||||

# parse ~/.wakatime.cfg file

|

||||

configs = parseConfigFile(args.config)

|

||||

if configs is None:

|

||||

return args, configs

|

||||

|

||||

# update args from configs

|

||||

if not args.key:

|

||||

default_key = None

|

||||

if configs.has_option('settings', 'api_key'):

|

||||

default_key = configs.get('settings', 'api_key')

|

||||

elif configs.has_option('settings', 'apikey'):

|

||||

default_key = configs.get('settings', 'apikey')

|

||||

if default_key:

|

||||

args.key = default_key

|

||||

else:

|

||||

parser.error('Missing api key')

|

||||

if not args.exclude:

|

||||

args.exclude = []

|

||||

if configs.has_option('settings', 'ignore'):

|

||||

try:

|

||||

for pattern in configs.get('settings', 'ignore').split("\n"):

|

||||

if pattern.strip() != '':

|

||||

args.exclude.append(pattern)

|

||||

except TypeError:

|

||||

pass

|

||||

if configs.has_option('settings', 'exclude'):

|

||||

try:

|

||||

for pattern in configs.get('settings', 'exclude').split("\n"):

|

||||

if pattern.strip() != '':

|

||||

args.exclude.append(pattern)

|

||||

except TypeError:

|

||||

pass

|

||||

if not args.include:

|

||||

args.include = []

|

||||

if configs.has_option('settings', 'include'):

|

||||

try:

|

||||

for pattern in configs.get('settings', 'include').split("\n"):

|

||||

if pattern.strip() != '':

|

||||

args.include.append(pattern)

|

||||

except TypeError:

|

||||

pass

|

||||

if args.offline and configs.has_option('settings', 'offline'):

|

||||

args.offline = configs.getboolean('settings', 'offline')

|

||||

if not args.hidefilenames and configs.has_option('settings', 'hidefilenames'):

|

||||

args.hidefilenames = configs.getboolean('settings', 'hidefilenames')

|

||||

if not args.proxy and configs.has_option('settings', 'proxy'):

|

||||

args.proxy = configs.get('settings', 'proxy')

|

||||

if not args.verbose and configs.has_option('settings', 'verbose'):

|

||||

args.verbose = configs.getboolean('settings', 'verbose')

|

||||

if not args.verbose and configs.has_option('settings', 'debug'):

|

||||

args.verbose = configs.getboolean('settings', 'debug')

|

||||

if not args.logfile and configs.has_option('settings', 'logfile'):

|

||||

args.logfile = configs.get('settings', 'logfile')

|

||||

if not args.api_url and configs.has_option('settings', 'api_url'):

|

||||

args.api_url = configs.get('settings', 'api_url')

|

||||

|

||||

return args, configs

|

||||

|

||||

|

||||

def should_exclude(fileName, include, exclude):

|

||||

if fileName is not None and fileName.strip() != '':

|

||||

try:

|

||||

for pattern in include:

|

||||

try:

|

||||

compiled = re.compile(pattern, re.IGNORECASE)

|

||||

if compiled.search(fileName):

|

||||

return False

|

||||

except re.error as ex:

|

||||

log.warning(u('Regex error ({msg}) for include pattern: {pattern}').format(

|

||||

msg=u(ex),

|

||||

pattern=u(pattern),

|

||||

))

|

||||

except TypeError: # pragma: nocover

|

||||

pass

|

||||

try:

|

||||

for pattern in exclude:

|

||||

try:

|

||||

compiled = re.compile(pattern, re.IGNORECASE)

|

||||

if compiled.search(fileName):

|

||||

return pattern

|

||||

except re.error as ex:

|

||||

log.warning(u('Regex error ({msg}) for exclude pattern: {pattern}').format(

|

||||

msg=u(ex),

|

||||

pattern=u(pattern),

|

||||

))

|

||||

except TypeError: # pragma: nocover

|

||||

pass

|

||||

return False

|

||||

|

||||

|

||||

def get_user_agent(plugin):

|

||||

ver = sys.version_info

|

||||

python_version = '%d.%d.%d.%s.%d' % (ver[0], ver[1], ver[2], ver[3], ver[4])

|

||||

user_agent = u('wakatime/{ver} ({platform}) Python{py_ver}').format(

|

||||

ver=u(__version__),

|

||||

platform=u(platform.platform()),

|

||||

py_ver=python_version,

|

||||

)

|

||||

if plugin:

|

||||

user_agent = u('{user_agent} {plugin}').format(

|

||||

user_agent=user_agent,

|

||||

plugin=u(plugin),

|

||||

)

|

||||

else:

|

||||

user_agent = u('{user_agent} Unknown/0').format(

|

||||

user_agent=user_agent,

|

||||

)

|

||||

return user_agent

|

||||

|

||||

|

||||

def send_heartbeat(project=None, branch=None, hostname=None, stats={}, key=None, targetFile=None,

|

||||

timestamp=None, isWrite=None, plugin=None, offline=None, notfile=False,

|

||||

hidefilenames=None, proxy=None, api_url=None, **kwargs):

|

||||

"""Sends heartbeat as POST request to WakaTime api server.

|

||||

"""

|

||||

|

||||

if not api_url:

|

||||

api_url = 'https://wakatime.com/api/v1/heartbeats'

|

||||

log.debug('Sending heartbeat to api at %s' % api_url)

|

||||

data = {

|

||||

'time': timestamp,

|

||||

'entity': targetFile,

|

||||

'type': 'file',

|

||||

}

|

||||

if hidefilenames and targetFile is not None and not notfile:

|

||||

extension = u(os.path.splitext(data['entity'])[1])

|

||||

data['entity'] = u('HIDDEN{0}').format(extension)

|

||||

if stats.get('lines'):

|

||||

data['lines'] = stats['lines']

|

||||

if stats.get('language'):

|

||||

data['language'] = stats['language']

|

||||

if stats.get('dependencies'):

|

||||

data['dependencies'] = stats['dependencies']

|

||||

if stats.get('lineno'):

|

||||

data['lineno'] = stats['lineno']

|

||||

if stats.get('cursorpos'):

|

||||

data['cursorpos'] = stats['cursorpos']

|

||||

if isWrite:

|

||||

data['is_write'] = isWrite

|

||||

if project:

|

||||

data['project'] = project

|

||||

if branch:

|

||||

data['branch'] = branch

|

||||

log.debug(data)

|

||||

|

||||

# setup api request

|

||||

request_body = json.dumps(data)

|

||||

api_key = u(base64.b64encode(str.encode(key) if is_py3 else key))

|

||||

auth = u('Basic {api_key}').format(api_key=api_key)

|

||||

headers = {

|

||||

'User-Agent': get_user_agent(plugin),

|

||||

'Content-Type': 'application/json',

|

||||

'Accept': 'application/json',

|

||||

'Authorization': auth,

|

||||

}

|

||||

if hostname:

|

||||

headers['X-Machine-Name'] = hostname

|

||||

proxies = {}

|

||||

if proxy:

|

||||

proxies['https'] = proxy

|

||||

|

||||

# add Olson timezone to request

|

||||

try:

|

||||

tz = tzlocal.get_localzone()

|

||||

except:

|

||||

tz = None

|

||||

if tz:

|

||||

headers['TimeZone'] = u(tz.zone)

|

||||

|

||||

session_cache = SessionCache()

|

||||

session = session_cache.get()

|

||||

|

||||

# log time to api

|

||||

response = None

|

||||

try:

|

||||

response = session.post(api_url, data=request_body, headers=headers,

|

||||

proxies=proxies)

|

||||

except RequestException:

|

||||

exception_data = {

|

||||

sys.exc_info()[0].__name__: u(sys.exc_info()[1]),

|

||||

}

|

||||

if log.isEnabledFor(logging.DEBUG):

|

||||

exception_data['traceback'] = traceback.format_exc()

|

||||

if offline:

|

||||

queue = Queue()

|

||||

queue.push(data, json.dumps(stats), plugin)

|

||||

if log.isEnabledFor(logging.DEBUG):

|

||||

log.warn(exception_data)

|

||||

else:

|

||||

log.error(exception_data)

|

||||

else:

|

||||

response_code = response.status_code if response is not None else None

|

||||

response_content = response.text if response is not None else None

|

||||

if response_code == 201:

|

||||

log.debug({

|

||||

'response_code': response_code,

|

||||

})

|

||||

session_cache.save(session)

|

||||

return True

|

||||

if offline:

|

||||

if response_code != 400:

|

||||

queue = Queue()

|

||||

queue.push(data, json.dumps(stats), plugin)

|

||||

if response_code == 401:

|

||||

log.error({

|

||||

'response_code': response_code,

|

||||

'response_content': response_content,

|

||||

})

|

||||

elif log.isEnabledFor(logging.DEBUG):

|

||||

log.warn({

|

||||

'response_code': response_code,

|

||||

'response_content': response_content,

|

||||

})

|

||||

else:

|

||||

log.error({

|

||||

'response_code': response_code,

|

||||

'response_content': response_content,

|

||||

})

|

||||

else:

|

||||

log.error({

|

||||

'response_code': response_code,

|

||||

'response_content': response_content,

|

||||

})

|

||||

session_cache.delete()

|

||||

return False

|

||||

|

||||

|

||||

def main(argv):

|

||||

sys.argv = ['wakatime'] + argv

|

||||

|

||||

args, configs = parseArguments()

|

||||

if configs is None:

|

||||

return 103 # config file parsing error

|

||||

|

||||

setup_logging(args, __version__)

|

||||

|

||||

exclude = should_exclude(args.targetFile, args.include, args.exclude)

|

||||

if exclude is not False:

|

||||

log.debug(u('File not logged because matches exclude pattern: {pattern}').format(

|

||||

pattern=u(exclude),

|

||||

))

|

||||

return 0

|

||||

|

||||

if os.path.isfile(args.targetFile) or args.notfile:

|

||||

|

||||

stats = get_file_stats(args.targetFile, notfile=args.notfile,

|

||||

lineno=args.lineno, cursorpos=args.cursorpos)

|

||||

|

||||

project, branch = None, None

|

||||

if not args.notfile:

|

||||

project, branch = get_project_info(configs=configs, args=args)

|

||||

|

||||

kwargs = vars(args)

|

||||

kwargs['project'] = project

|

||||

kwargs['branch'] = branch

|

||||

kwargs['stats'] = stats

|

||||

kwargs['hostname'] = args.hostname or socket.gethostname()

|

||||

|

||||

if send_heartbeat(**kwargs):

|

||||

queue = Queue()

|

||||

while True:

|

||||

heartbeat = queue.pop()

|

||||

if heartbeat is None:

|

||||

break

|

||||

sent = send_heartbeat(

|

||||

project=heartbeat['project'],

|

||||

targetFile=heartbeat['file'],

|

||||

timestamp=heartbeat['time'],

|

||||

branch=heartbeat['branch'],

|

||||

hostname=kwargs['hostname'],

|

||||

stats=json.loads(heartbeat['stats']),

|

||||

key=args.key,

|

||||

isWrite=heartbeat['is_write'],

|

||||

plugin=heartbeat['plugin'],

|

||||

offline=args.offline,

|

||||

hidefilenames=args.hidefilenames,

|

||||

notfile=args.notfile,

|

||||

proxy=args.proxy,

|

||||

api_url=args.api_url,

|

||||

)

|

||||

if not sent:

|

||||

break

|

||||

return 0 # success

|

||||

|

||||

return 102 # api error

|

||||

|

||||

else:

|

||||

log.debug('File does not exist; ignoring this heartbeat.')

|

||||

return 0

|

||||

@ -32,4 +32,4 @@ except (TypeError, ImportError):

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

sys.exit(wakatime.main(sys.argv[1:]))

|

||||

sys.exit(wakatime.execute(sys.argv[1:]))

|

||||

|

||||

@ -26,9 +26,12 @@ if is_py2: # pragma: nocover

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return unicode(text)

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

return text

|

||||

try:

|

||||

return unicode(text)

|

||||

except:

|

||||

return text

|

||||

open = codecs.open

|

||||

basestring = basestring

|

||||

|

||||

@ -39,8 +42,17 @@ elif is_py3: # pragma: nocover

|

||||

if text is None:

|

||||

return None

|

||||

if isinstance(text, bytes):

|

||||

return text.decode('utf-8')

|

||||

return str(text)

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

return str(text)

|

||||

except:

|

||||

return text

|

||||

open = open

|

||||

basestring = (str, bytes)

|

||||

|

||||

|

||||

18

packages/wakatime/constants.py

Normal file

18

packages/wakatime/constants.py

Normal file

@ -0,0 +1,18 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.constants

|

||||

~~~~~~~~~~~~~~~~~~

|

||||

|

||||