mirror of

https://github.com/wakatime/sublime-wakatime.git

synced 2023-08-10 21:13:02 +03:00

Compare commits

109 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| aba72b0f1e | |||

| 5b9d86a57d | |||

| fa40874635 | |||

| 6d4a4cf9eb | |||

| f628b8dd11 | |||

| f932ee9fc6 | |||

| 2f14009279 | |||

| 453d96bf9c | |||

| 9de153f156 | |||

| dcc782338d | |||

| 9d0dba988a | |||

| e76f2e514e | |||

| 224f7cd82a | |||

| 3cce525a84 | |||

| ce885501ad | |||

| c9448a9a19 | |||

| 04f8c61ebc | |||

| 04a4630024 | |||

| 02138220fd | |||

| d0b162bdd8 | |||

| 1b8895cd38 | |||

| 938bbb73d1 | |||

| 008fdc6b49 | |||

| a788625dd0 | |||

| bcbce681c3 | |||

| 35299db832 | |||

| eb7814624c | |||

| 1c092b2fd8 | |||

| 507ef95f71 | |||

| 9777bc7788 | |||

| 20b78defa6 | |||

| 8cb1c557d9 | |||

| 20a1965f13 | |||

| 0b802a554e | |||

| 30186c9b2c | |||

| 311a0b5309 | |||

| b7602d89fb | |||

| 305de46e32 | |||

| c574234927 | |||

| a69c50f470 | |||

| f4b40089f3 | |||

| 08394357b7 | |||

| 205d4eb163 | |||

| c4c27e4e9e | |||

| 9167eb2558 | |||

| eaa3bb5180 | |||

| 7755971d11 | |||

| 7634be5446 | |||

| 5e17ad88f6 | |||

| 24d0f65116 | |||

| a326046733 | |||

| 9bab00fd8b | |||

| b4a13a48b9 | |||

| 21601f9688 | |||

| 4c3ec87341 | |||

| b149d7fc87 | |||

| 52e6107c6e | |||

| b340637331 | |||

| 044867449a | |||

| 9e3f438823 | |||

| 887d55c3f3 | |||

| 19d54f3310 | |||

| 514a8762eb | |||

| 957c74d226 | |||

| 7b0432d6ff | |||

| 09754849be | |||

| 25ad48a97a | |||

| 3b2520afa9 | |||

| 77c2041ad3 | |||

| 8af3b53937 | |||

| 5ef2e6954e | |||

| ca94272de5 | |||

| f19a448d95 | |||

| e178765412 | |||

| 6a7de84b9c | |||

| 48810f2977 | |||

| 260eedb31d | |||

| 02e2bfcad2 | |||

| f14ece63f3 | |||

| cb7f786ec8 | |||

| ab8711d0b1 | |||

| 2354be358c | |||

| 443215bd90 | |||

| c64f125dc4 | |||

| 050b14fb53 | |||

| c7efc33463 | |||

| d0ddbed006 | |||

| 3ce8f388ab | |||

| 90731146f9 | |||

| e1ab92be6d | |||

| 8b59e46c64 | |||

| 006341eb72 | |||

| b54e0e13f6 | |||

| 835c7db864 | |||

| 53e8bb04e9 | |||

| 4aa06e3829 | |||

| 297f65733f | |||

| 5ba5e6d21b | |||

| 32eadda81f | |||

| c537044801 | |||

| a97792c23c | |||

| 4223f3575f | |||

| 284cdf3ce4 | |||

| 27afc41bf4 | |||

| 1fdda0d64a | |||

| c90a4863e9 | |||

| 94343e5b07 | |||

| 03acea6e25 | |||

| 77594700bd |

261

HISTORY.rst

261

HISTORY.rst

@ -3,6 +3,267 @@ History

|

||||

-------

|

||||

|

||||

|

||||

7.0.22 (2017-06-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v8.0.3.

|

||||

- Improve Matlab language detection.

|

||||

|

||||

|

||||

7.0.21 (2017-05-24)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v8.0.2.

|

||||

- Only treat proxy string as NTLM proxy after unable to connect with HTTPS and

|

||||

SOCKS proxy.

|

||||

- Support running automated tests on Linux, OS X, and Windows.

|

||||

- Ability to disable SSL cert verification. wakatime/wakatime#90

|

||||

- Disable line count stats for files larger than 2MB to improve performance.

|

||||

- Print error saying Python needs upgrading when requests can't be imported.

|

||||

|

||||

|

||||

7.0.20 (2017-04-10)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fix install instructions formatting.

|

||||

|

||||

|

||||

7.0.19 (2017-04-10)

|

||||

++++++++++++++++++

|

||||

|

||||

- Remove /var/www/ from default ignored folders.

|

||||

|

||||

|

||||

7.0.18 (2017-03-16)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v8.0.0.

|

||||

- No longer creating ~/.wakatime.cfg file, since only using Sublime settings.

|

||||

|

||||

|

||||

7.0.17 (2017-03-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v7.0.4.

|

||||

|

||||

|

||||

7.0.16 (2017-02-20)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v7.0.2.

|

||||

|

||||

|

||||

7.0.15 (2017-02-13)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.2.2.

|

||||

- Upgrade pygments library to v2.2.0 for improved language detection.

|

||||

|

||||

|

||||

7.0.14 (2017-02-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.2.1.

|

||||

- Allow boolean or list of regex patterns for hidefilenames config setting.

|

||||

|

||||

|

||||

7.0.13 (2016-11-11)

|

||||

++++++++++++++++++

|

||||

|

||||

- Support old Sublime Text with Python 2.6.

|

||||

- Fix bug that prevented reading default api key from existing config file.

|

||||

|

||||

|

||||

7.0.12 (2016-10-24)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.2.0.

|

||||

- Exit with status code 104 when api key is missing or invalid. Exit with

|

||||

status code 103 when config file missing or invalid.

|

||||

- New WAKATIME_HOME env variable for setting path to config and log files.

|

||||

- Improve debug warning message from unsupported dependency parsers.

|

||||

|

||||

|

||||

7.0.11 (2016-09-23)

|

||||

++++++++++++++++++

|

||||

|

||||

- Handle UnicodeDecodeError when when logging. Related to #68.

|

||||

|

||||

|

||||

7.0.10 (2016-09-22)

|

||||

++++++++++++++++++

|

||||

|

||||

- Handle UnicodeDecodeError when looking for python. Fixes #68.

|

||||

- Upgrade wakatime-cli to v6.0.9.

|

||||

|

||||

|

||||

7.0.9 (2016-09-02)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.8.

|

||||

|

||||

|

||||

7.0.8 (2016-07-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to master version to fix debug logging encoding bug.

|

||||

|

||||

|

||||

7.0.7 (2016-07-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.7.

|

||||

- Handle unknown exceptions from requests library by deleting cached session

|

||||

object because it could be from a previous conflicting version.

|

||||

- New hostname setting in config file to set machine hostname. Hostname

|

||||

argument takes priority over hostname from config file.

|

||||

- Prevent logging unrelated exception when logging tracebacks.

|

||||

- Use correct namespace for pygments.lexers.ClassNotFound exception so it is

|

||||

caught when dependency detection not available for a language.

|

||||

|

||||

|

||||

7.0.6 (2016-06-13)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.5.

|

||||

- Upgrade pygments to v2.1.3 for better language coverage.

|

||||

|

||||

|

||||

7.0.5 (2016-06-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to master version to fix bug in urllib3 package causing

|

||||

unhandled retry exceptions.

|

||||

- Prevent tracking git branch with detached head.

|

||||

|

||||

|

||||

7.0.4 (2016-05-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.3.

|

||||

- Upgrade requests dependency to v2.10.0.

|

||||

- Support for SOCKS proxies.

|

||||

|

||||

|

||||

7.0.3 (2016-05-16)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.2.

|

||||

- Prevent popup on Mac when xcode-tools is not installed.

|

||||

|

||||

|

||||

7.0.2 (2016-04-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- Prevent implicit unicode decoding from string format when logging output

|

||||

from Python version check.

|

||||

|

||||

|

||||

7.0.1 (2016-04-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.1.

|

||||

- Fix bug which prevented plugin from being sent with extra heartbeats.

|

||||

|

||||

|

||||

7.0.0 (2016-04-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- Queue heartbeats and send to wakatime-cli after 4 seconds.

|

||||

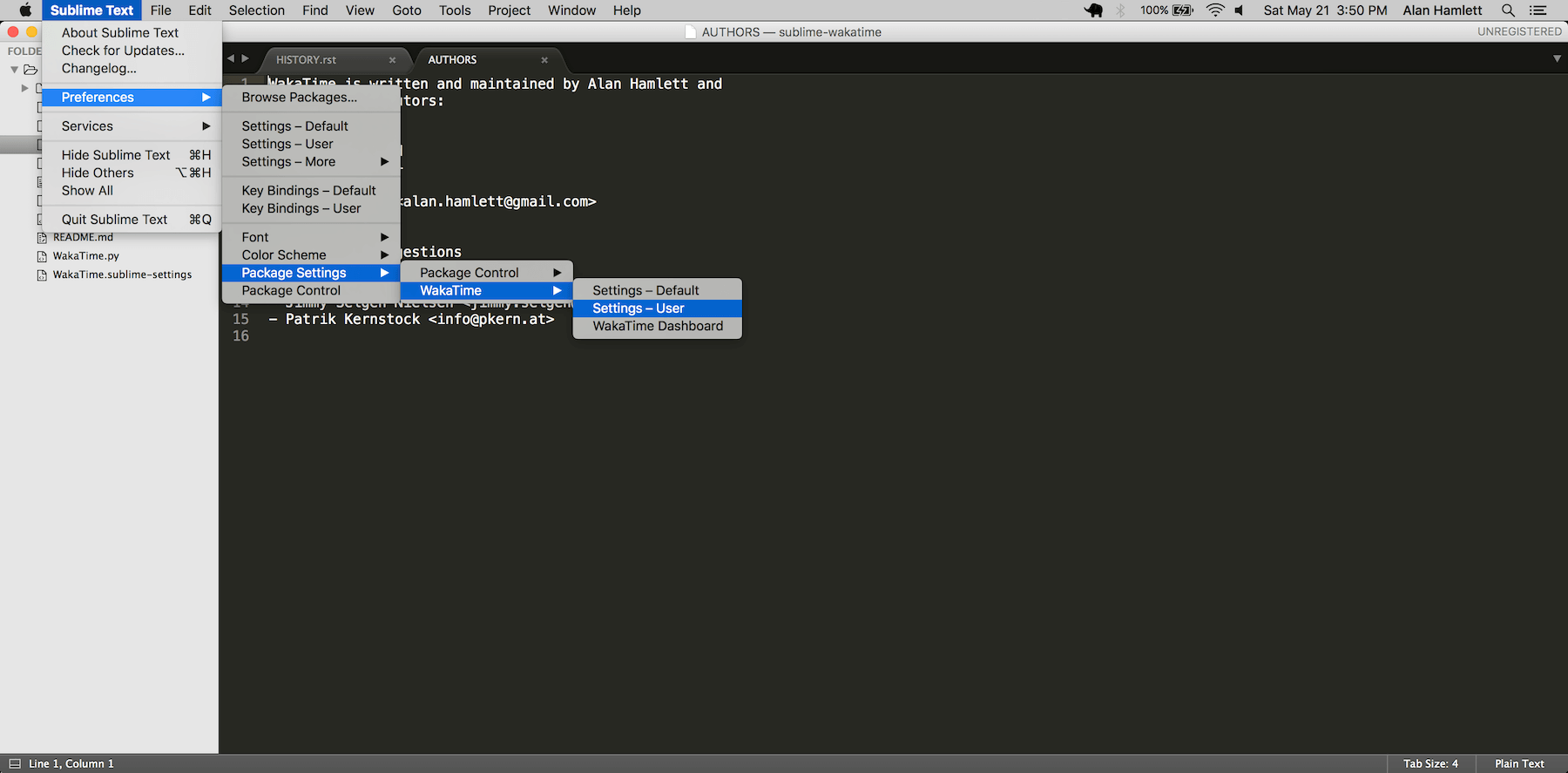

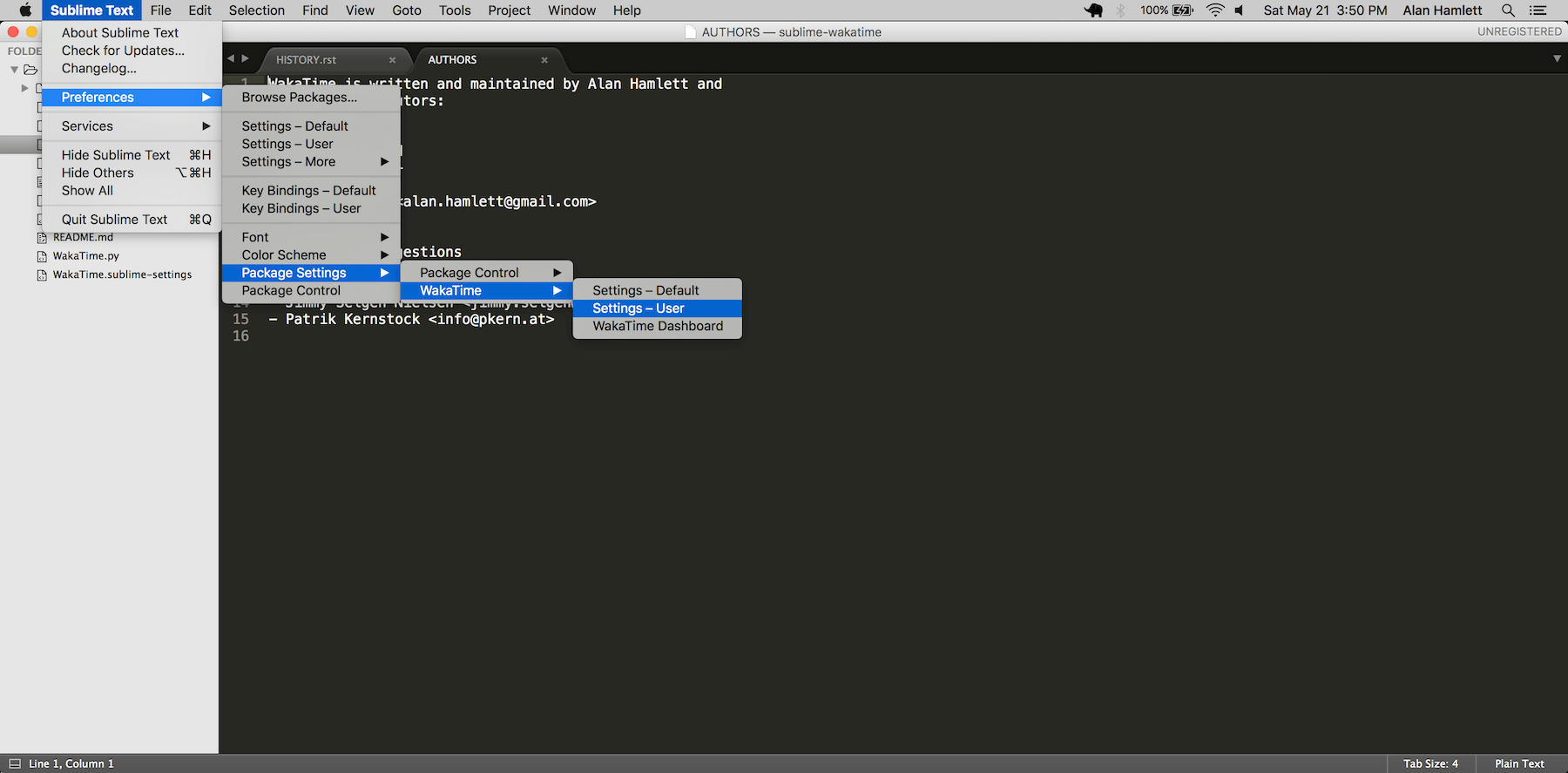

- Nest settings menu under Package Settings.

|

||||

- Upgrade wakatime-cli to v6.0.0.

|

||||

- Increase default network timeout to 60 seconds when sending heartbeats to

|

||||

the api.

|

||||

- New --extra-heartbeats command line argument for sending a JSON array of

|

||||

extra queued heartbeats to STDIN.

|

||||

- Change --entitytype command line argument to --entity-type.

|

||||

- No longer allowing --entity-type of url.

|

||||

- Support passing an alternate language to cli to be used when a language can

|

||||

not be guessed from the code file.

|

||||

|

||||

|

||||

6.0.8 (2016-04-18)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v5.0.0.

|

||||

- Support regex patterns in projectmap config section for renaming projects.

|

||||

- Upgrade pytz to v2016.3.

|

||||

- Upgrade tzlocal to v1.2.2.

|

||||

|

||||

|

||||

6.0.7 (2016-03-11)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fix bug causing RuntimeError when finding Python location

|

||||

|

||||

|

||||

6.0.6 (2016-03-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli to v4.1.13

|

||||

- encode TimeZone as utf-8 before adding to headers

|

||||

- encode X-Machine-Name as utf-8 before adding to headers

|

||||

|

||||

|

||||

6.0.5 (2016-03-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli to v4.1.11

|

||||

- encode machine hostname as Unicode when adding to X-Machine-Name header

|

||||

|

||||

|

||||

6.0.4 (2016-01-15)

|

||||

++++++++++++++++++

|

||||

|

||||

- fix UnicodeDecodeError on ST2 with non-English locale

|

||||

|

||||

|

||||

6.0.3 (2016-01-11)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli core to v4.1.10

|

||||

- accept 201 or 202 response codes as success from api

|

||||

- upgrade requests package to v2.9.1

|

||||

|

||||

|

||||

6.0.2 (2016-01-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli core to v4.1.9

|

||||

- improve C# dependency detection

|

||||

- correctly log exception tracebacks

|

||||

- log all unknown exceptions to wakatime.log file

|

||||

- disable urllib3 SSL warning from every request

|

||||

- detect dependencies from golang files

|

||||

- use api.wakatime.com for sending heartbeats

|

||||

|

||||

|

||||

6.0.1 (2016-01-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- use embedded python if system python is broken, or doesn't output a version number

|

||||

- log output from wakatime-cli in ST console when in debug mode

|

||||

|

||||

|

||||

6.0.0 (2015-12-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- use embeddable Python instead of installing on Windows

|

||||

|

||||

|

||||

5.0.1 (2015-10-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- look for python in system PATH again

|

||||

|

||||

|

||||

5.0.0 (2015-10-02)

|

||||

++++++++++++++++++

|

||||

|

||||

- improve logging with levels and log function

|

||||

- switch registry warnings to debug log level

|

||||

|

||||

|

||||

4.0.20 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

|

||||

@ -6,24 +6,37 @@

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"caption": "WakaTime",

|

||||

"mnemonic": "W",

|

||||

"id": "wakatime-settings",

|

||||

"caption": "Package Settings",

|

||||

"mnemonic": "P",

|

||||

"id": "package-settings",

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/WakaTime/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – Default"

|

||||

},

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/User/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – User"

|

||||

"caption": "WakaTime",

|

||||

"mnemonic": "W",

|

||||

"id": "wakatime-settings",

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/WakaTime/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – Default"

|

||||

},

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/User/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – User"

|

||||

},

|

||||

{

|

||||

"command": "wakatime_dashboard",

|

||||

"args": {},

|

||||

"caption": "WakaTime Dashboard"

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

46

README.md

46

README.md

@ -1,43 +1,59 @@

|

||||

sublime-wakatime

|

||||

================

|

||||

|

||||

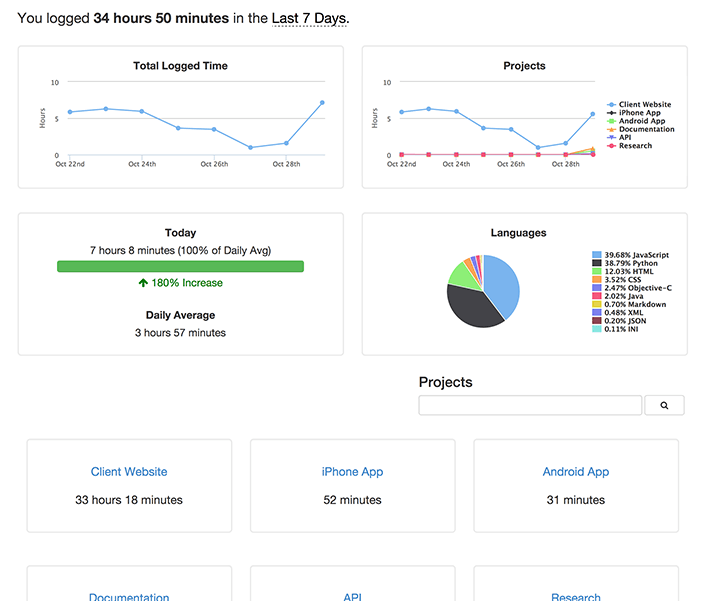

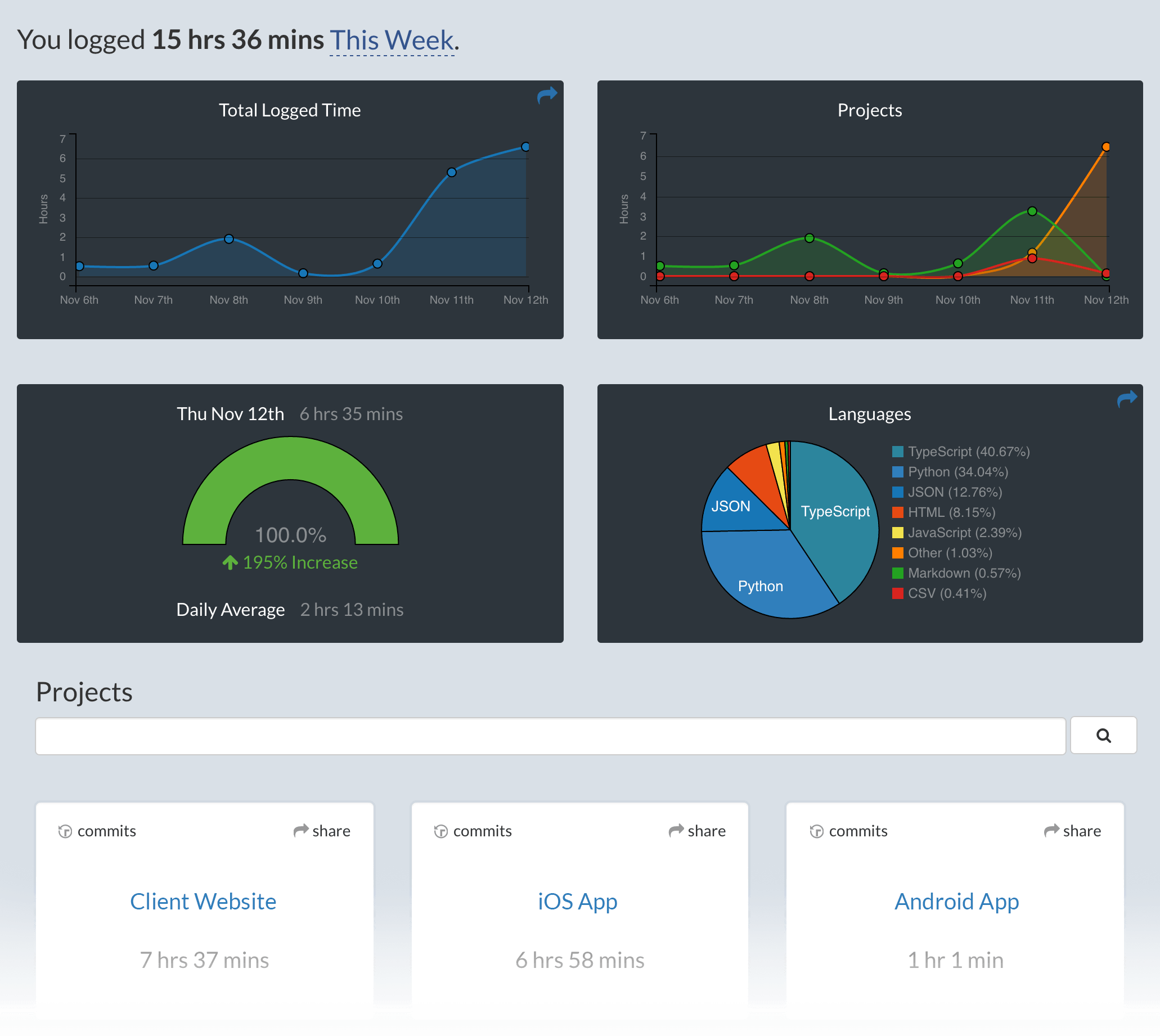

Sublime Text 2 & 3 plugin to quantify your coding using https://wakatime.com/.

|

||||

Metrics, insights, and time tracking automatically generated from your programming activity.

|

||||

|

||||

|

||||

Installation

|

||||

------------

|

||||

|

||||

Heads Up! For Sublime Text 2 on Windows & Linux, WakaTime depends on [Python](http://www.python.org/getit/) being installed to work correctly.

|

||||

|

||||

1. Install [Package Control](https://packagecontrol.io/installation).

|

||||

|

||||

2. Using [Package Control](https://packagecontrol.io/docs/usage):

|

||||

2. In Sublime, press `ctrl+shift+p`(Windows, Linux) or `cmd+shift+p`(OS X).

|

||||

|

||||

a) Inside Sublime, press `ctrl+shift+p`(Windows, Linux) or `cmd+shift+p`(OS X).

|

||||

3. Type `install`, then press `enter` with `Package Control: Install Package` selected.

|

||||

|

||||

b) Type `install`, then press `enter` with `Package Control: Install Package` selected.

|

||||

4. Type `wakatime`, then press `enter` with the `WakaTime` plugin selected.

|

||||

|

||||

c) Type `wakatime`, then press `enter` with the `WakaTime` plugin selected.

|

||||

5. Enter your [api key](https://wakatime.com/settings#apikey), then press `enter`.

|

||||

|

||||

3. Enter your [api key](https://wakatime.com/settings#apikey), then press `enter`.

|

||||

6. Use Sublime and your coding activity will be displayed on your [WakaTime dashboard](https://wakatime.com).

|

||||

|

||||

4. Use Sublime and your time will be tracked for you automatically.

|

||||

|

||||

5. Visit https://wakatime.com/dashboard to see your logged time.

|

||||

|

||||

Screen Shots

|

||||

------------

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Unresponsive Plugin Warning

|

||||

---------------------------

|

||||

|

||||

In Sublime Text 2, if you get a warning message:

|

||||

|

||||

A plugin (WakaTime) may be making Sublime Text unresponsive by taking too long (0.017332s) in its on_modified callback.

|

||||

|

||||

To fix this, go to `Preferences → Settings - User` then add the following setting:

|

||||

|

||||

`"detect_slow_plugins": false`

|

||||

|

||||

|

||||

Troubleshooting

|

||||

---------------

|

||||

|

||||

First, turn on debug mode in your `WakaTime.sublime-settings` file.

|

||||

|

||||

|

||||

|

||||

|

||||

Add the line: `"debug": true`

|

||||

|

||||

Then, open your Sublime Console with `View -> Show Console` to see the plugin executing the wakatime cli process when sending a heartbeat. Also, tail your `$HOME/.wakatime.log` file to debug wakatime cli problems.

|

||||

Then, open your Sublime Console with `View → Show Console` ( CTRL + \` ) to see the plugin executing the wakatime cli process when sending a heartbeat.

|

||||

Also, tail your `$HOME/.wakatime.log` file to debug wakatime cli problems.

|

||||

|

||||

For more general troubleshooting information, see [wakatime/wakatime#troubleshooting](https://github.com/wakatime/wakatime#troubleshooting).

|

||||

The [How to Debug Plugins][how to debug] guide shows how to check when coding activity was last received from your editor using the [User Agents API][user agents api].

|

||||

For more general troubleshooting info, see the [wakatime-cli Troubleshooting Section][wakatime-cli-help].

|

||||

|

||||

|

||||

[wakatime-cli-help]: https://github.com/wakatime/wakatime#troubleshooting

|

||||

[how to debug]: https://wakatime.com/faq#debug-plugins

|

||||

[user agents api]: https://wakatime.com/developers#user_agents

|

||||

|

||||

529

WakaTime.py

529

WakaTime.py

@ -7,22 +7,26 @@ Website: https://wakatime.com/

|

||||

==========================================================="""

|

||||

|

||||

|

||||

__version__ = '4.0.20'

|

||||

__version__ = '7.0.22'

|

||||

|

||||

|

||||

import sublime

|

||||

import sublime_plugin

|

||||

|

||||

import contextlib

|

||||

import json

|

||||

import os

|

||||

import platform

|

||||

import re

|

||||

import sys

|

||||

import time

|

||||

import threading

|

||||

import traceback

|

||||

import urllib

|

||||

import webbrowser

|

||||

from datetime import datetime

|

||||

from subprocess import Popen

|

||||

from subprocess import Popen, STDOUT, PIPE

|

||||

from zipfile import ZipFile

|

||||

try:

|

||||

import _winreg as winreg # py2

|

||||

except ImportError:

|

||||

@ -30,6 +34,61 @@ except ImportError:

|

||||

import winreg # py3

|

||||

except ImportError:

|

||||

winreg = None

|

||||

try:

|

||||

import Queue as queue # py2

|

||||

except ImportError:

|

||||

import queue # py3

|

||||

|

||||

|

||||

is_py2 = (sys.version_info[0] == 2)

|

||||

is_py3 = (sys.version_info[0] == 3)

|

||||

|

||||

if is_py2:

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

if isinstance(text, unicode):

|

||||

return text

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

try:

|

||||

return unicode(text)

|

||||

except:

|

||||

try:

|

||||

return text.decode('utf-8', 'replace')

|

||||

except:

|

||||

try:

|

||||

return unicode(str(text))

|

||||

except:

|

||||

return unicode('')

|

||||

|

||||

elif is_py3:

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

if isinstance(text, bytes):

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

return str(text)

|

||||

except:

|

||||

return text.decode('utf-8', 'replace')

|

||||

|

||||

else:

|

||||

raise Exception('Unsupported Python version: {0}.{1}.{2}'.format(

|

||||

sys.version_info[0],

|

||||

sys.version_info[1],

|

||||

sys.version_info[2],

|

||||

))

|

||||

|

||||

|

||||

# globals

|

||||

@ -44,40 +103,85 @@ LAST_HEARTBEAT = {

|

||||

'file': None,

|

||||

'is_write': False,

|

||||

}

|

||||

LOCK = threading.RLock()

|

||||

PYTHON_LOCATION = None

|

||||

HEARTBEATS = queue.Queue()

|

||||

|

||||

|

||||

# Log Levels

|

||||

DEBUG = 'DEBUG'

|

||||

INFO = 'INFO'

|

||||

WARNING = 'WARNING'

|

||||

ERROR = 'ERROR'

|

||||

|

||||

|

||||

# add wakatime package to path

|

||||

sys.path.insert(0, os.path.join(PLUGIN_DIR, 'packages'))

|

||||

try:

|

||||

from wakatime.base import parseConfigFile

|

||||

from wakatime.main import parseConfigFile

|

||||

except ImportError:

|

||||

pass

|

||||

|

||||

|

||||

def createConfigFile():

|

||||

"""Creates the .wakatime.cfg INI file in $HOME directory, if it does

|

||||

not already exist.

|

||||

def set_timeout(callback, seconds):

|

||||

"""Runs the callback after the given seconds delay.

|

||||

|

||||

If this is Sublime Text 3, runs the callback on an alternate thread. If this

|

||||

is Sublime Text 2, runs the callback in the main thread.

|

||||

"""

|

||||

configFile = os.path.join(os.path.expanduser('~'), '.wakatime.cfg')

|

||||

|

||||

milliseconds = int(seconds * 1000)

|

||||

try:

|

||||

with open(configFile) as fh:

|

||||

pass

|

||||

except IOError:

|

||||

sublime.set_timeout_async(callback, milliseconds)

|

||||

except AttributeError:

|

||||

sublime.set_timeout(callback, milliseconds)

|

||||

|

||||

|

||||

def log(lvl, message, *args, **kwargs):

|

||||

try:

|

||||

if lvl == DEBUG and not SETTINGS.get('debug'):

|

||||

return

|

||||

msg = message

|

||||

if len(args) > 0:

|

||||

msg = message.format(*args)

|

||||

elif len(kwargs) > 0:

|

||||

msg = message.format(**kwargs)

|

||||

try:

|

||||

with open(configFile, 'w') as fh:

|

||||

fh.write("[settings]\n")

|

||||

fh.write("debug = false\n")

|

||||

fh.write("hidefilenames = false\n")

|

||||

except IOError:

|

||||

pass

|

||||

print('[WakaTime] [{lvl}] {msg}'.format(lvl=lvl, msg=msg))

|

||||

except UnicodeDecodeError:

|

||||

print(u('[WakaTime] [{lvl}] {msg}').format(lvl=lvl, msg=u(msg)))

|

||||

except RuntimeError:

|

||||

set_timeout(lambda: log(lvl, message, *args, **kwargs), 0)

|

||||

|

||||

|

||||

def resources_folder():

|

||||

if platform.system() == 'Windows':

|

||||

return os.path.join(os.getenv('APPDATA'), 'WakaTime')

|

||||

else:

|

||||

return os.path.join(os.path.expanduser('~'), '.wakatime')

|

||||

|

||||

|

||||

def update_status_bar(status):

|

||||

"""Updates the status bar."""

|

||||

|

||||

try:

|

||||

if SETTINGS.get('status_bar_message'):

|

||||

msg = datetime.now().strftime(SETTINGS.get('status_bar_message_fmt'))

|

||||

if '{status}' in msg:

|

||||

msg = msg.format(status=status)

|

||||

|

||||

active_window = sublime.active_window()

|

||||

if active_window:

|

||||

for view in active_window.views():

|

||||

view.set_status('wakatime', msg)

|

||||

except RuntimeError:

|

||||

set_timeout(lambda: update_status_bar(status), 0)

|

||||

|

||||

|

||||

def prompt_api_key():

|

||||

global SETTINGS

|

||||

|

||||

createConfigFile()

|

||||

if SETTINGS.get('api_key'):

|

||||

return True

|

||||

|

||||

default_key = ''

|

||||

try:

|

||||

@ -88,29 +192,27 @@ def prompt_api_key():

|

||||

except:

|

||||

pass

|

||||

|

||||

if SETTINGS.get('api_key'):

|

||||

return True

|

||||

else:

|

||||

window = sublime.active_window()

|

||||

if window:

|

||||

def got_key(text):

|

||||

if text:

|

||||

SETTINGS.set('api_key', str(text))

|

||||

sublime.save_settings(SETTINGS_FILE)

|

||||

window = sublime.active_window()

|

||||

if window:

|

||||

window.show_input_panel('[WakaTime] Enter your wakatime.com api key:', default_key, got_key, None, None)

|

||||

return True

|

||||

else:

|

||||

print('[WakaTime] Error: Could not prompt for api key because no window found.')

|

||||

return False

|

||||

window.show_input_panel('[WakaTime] Enter your wakatime.com api key:', default_key, got_key, None, None)

|

||||

return True

|

||||

else:

|

||||

log(ERROR, 'Could not prompt for api key because no window found.')

|

||||

return False

|

||||

|

||||

|

||||

def python_binary():

|

||||

global PYTHON_LOCATION

|

||||

if PYTHON_LOCATION is not None:

|

||||

return PYTHON_LOCATION

|

||||

|

||||

# look for python in PATH and common install locations

|

||||

paths = [

|

||||

os.path.join(resources_folder(), 'python'),

|

||||

None,

|

||||

'/',

|

||||

'/usr/local/bin/',

|

||||

'/usr/bin/',

|

||||

@ -118,22 +220,28 @@ def python_binary():

|

||||

for path in paths:

|

||||

path = find_python_in_folder(path)

|

||||

if path is not None:

|

||||

PYTHON_LOCATION = path

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

|

||||

# look for python in windows registry

|

||||

path = find_python_from_registry(r'SOFTWARE\Python\PythonCore')

|

||||

if path is not None:

|

||||

PYTHON_LOCATION = path

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

path = find_python_from_registry(r'SOFTWARE\Wow6432Node\Python\PythonCore')

|

||||

if path is not None:

|

||||

PYTHON_LOCATION = path

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

|

||||

return None

|

||||

|

||||

|

||||

def set_python_binary_location(path):

|

||||

global PYTHON_LOCATION

|

||||

PYTHON_LOCATION = path

|

||||

log(DEBUG, 'Found Python at: {0}'.format(path))

|

||||

|

||||

|

||||

def find_python_from_registry(location, reg=None):

|

||||

if platform.system() != 'Windows' or winreg is None:

|

||||

return None

|

||||

@ -168,36 +276,60 @@ def find_python_from_registry(location, reg=None):

|

||||

if path is not None:

|

||||

path = find_python_in_folder(path)

|

||||

if path is not None:

|

||||

log(DEBUG, 'Found python from {reg}\\{key}\\{version}\\{sub_key}.'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

version=version,

|

||||

sub_key=sub_key,

|

||||

))

|

||||

return path

|

||||

except WindowsError:

|

||||

print('[WakaTime] Warning: Could not read registry value "{reg}\\{key}\\{version}\\{sub_key}".'.format(

|

||||

reg='HKEY_CURRENT_USER',

|

||||

log(DEBUG, 'Could not read registry value "{reg}\\{key}\\{version}\\{sub_key}".'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

version=version,

|

||||

sub_key=sub_key,

|

||||

))

|

||||

except WindowsError:

|

||||

print('[WakaTime] Warning: Could not read registry value "{reg}\\{key}".'.format(

|

||||

reg='HKEY_CURRENT_USER',

|

||||

log(DEBUG, 'Could not read registry value "{reg}\\{key}".'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

))

|

||||

except:

|

||||

log(ERROR, 'Could not read registry value "{reg}\\{key}":\n{exc}'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

exc=traceback.format_exc(),

|

||||

))

|

||||

|

||||

return val

|

||||

|

||||

|

||||

def find_python_in_folder(folder):

|

||||

path = os.path.realpath(os.path.join(folder, 'pythonw'))

|

||||

def find_python_in_folder(folder, headless=True):

|

||||

pattern = re.compile(r'\d+\.\d+')

|

||||

|

||||

path = 'python'

|

||||

if folder is not None:

|

||||

path = os.path.realpath(os.path.join(folder, 'python'))

|

||||

if headless:

|

||||

path = u(path) + u('w')

|

||||

log(DEBUG, u('Looking for Python at: {0}').format(u(path)))

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

return path

|

||||

process = Popen([path, '--version'], stdout=PIPE, stderr=STDOUT)

|

||||

output, err = process.communicate()

|

||||

output = u(output).strip()

|

||||

retcode = process.poll()

|

||||

log(DEBUG, u('Python Version Output: {0}').format(output))

|

||||

if not retcode and pattern.search(output):

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

path = os.path.realpath(os.path.join(folder, 'python'))

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

log(DEBUG, u(sys.exc_info()[1]))

|

||||

|

||||

if headless:

|

||||

path = find_python_in_folder(folder, headless=False)

|

||||

if path is not None:

|

||||

return path

|

||||

|

||||

return None

|

||||

|

||||

|

||||

@ -213,10 +345,10 @@ def obfuscate_apikey(command_list):

|

||||

return cmd

|

||||

|

||||

|

||||

def enough_time_passed(now, last_heartbeat, is_write):

|

||||

if now - last_heartbeat['time'] > HEARTBEAT_FREQUENCY * 60:

|

||||

def enough_time_passed(now, is_write):

|

||||

if now - LAST_HEARTBEAT['time'] > HEARTBEAT_FREQUENCY * 60:

|

||||

return True

|

||||

if is_write and now - last_heartbeat['time'] > 2:

|

||||

if is_write and now - LAST_HEARTBEAT['time'] > 2:

|

||||

return True

|

||||

return False

|

||||

|

||||

@ -260,142 +392,241 @@ def is_view_active(view):

|

||||

return False

|

||||

|

||||

|

||||

def handle_heartbeat(view, is_write=False):

|

||||

def handle_activity(view, is_write=False):

|

||||

window = view.window()

|

||||

if window is not None:

|

||||

target_file = view.file_name()

|

||||

project = window.project_data() if hasattr(window, 'project_data') else None

|

||||

folders = window.folders()

|

||||

thread = SendHeartbeatThread(target_file, view, is_write=is_write, project=project, folders=folders)

|

||||

thread.start()

|

||||

entity = view.file_name()

|

||||

if entity:

|

||||

timestamp = time.time()

|

||||

last_file = LAST_HEARTBEAT['file']

|

||||

if entity != last_file or enough_time_passed(timestamp, is_write):

|

||||

project = window.project_data() if hasattr(window, 'project_data') else None

|

||||

folders = window.folders()

|

||||

append_heartbeat(entity, timestamp, is_write, view, project, folders)

|

||||

|

||||

|

||||

class SendHeartbeatThread(threading.Thread):

|

||||

def append_heartbeat(entity, timestamp, is_write, view, project, folders):

|

||||

global LAST_HEARTBEAT

|

||||

|

||||

# add this heartbeat to queue

|

||||

heartbeat = {

|

||||

'entity': entity,

|

||||

'timestamp': timestamp,

|

||||

'is_write': is_write,

|

||||

'cursorpos': view.sel()[0].begin() if view.sel() else None,

|

||||

'project': project,

|

||||

'folders': folders,

|

||||

}

|

||||

HEARTBEATS.put_nowait(heartbeat)

|

||||

|

||||

# make this heartbeat the LAST_HEARTBEAT

|

||||

LAST_HEARTBEAT = {

|

||||

'file': entity,

|

||||

'time': timestamp,

|

||||

'is_write': is_write,

|

||||

}

|

||||

|

||||

# process the queue of heartbeats in the future

|

||||

seconds = 4

|

||||

set_timeout(process_queue, seconds)

|

||||

|

||||

|

||||

def process_queue():

|

||||

try:

|

||||

heartbeat = HEARTBEATS.get_nowait()

|

||||

except queue.Empty:

|

||||

return

|

||||

|

||||

has_extra_heartbeats = False

|

||||

extra_heartbeats = []

|

||||

try:

|

||||

while True:

|

||||

extra_heartbeats.append(HEARTBEATS.get_nowait())

|

||||

has_extra_heartbeats = True

|

||||

except queue.Empty:

|

||||

pass

|

||||

|

||||

thread = SendHeartbeatsThread(heartbeat)

|

||||

if has_extra_heartbeats:

|

||||

thread.add_extra_heartbeats(extra_heartbeats)

|

||||

thread.start()

|

||||

|

||||

|

||||

class SendHeartbeatsThread(threading.Thread):

|

||||

"""Non-blocking thread for sending heartbeats to api.

|

||||

"""

|

||||

|

||||

def __init__(self, target_file, view, is_write=False, project=None, folders=None, force=False):

|

||||

def __init__(self, heartbeat):

|

||||

threading.Thread.__init__(self)

|

||||

self.lock = LOCK

|

||||

self.target_file = target_file

|

||||

self.is_write = is_write

|

||||

self.project = project

|

||||

self.folders = folders

|

||||

self.force = force

|

||||

|

||||

self.debug = SETTINGS.get('debug')

|

||||

self.api_key = SETTINGS.get('api_key', '')

|

||||

self.ignore = SETTINGS.get('ignore', [])

|

||||

self.last_heartbeat = LAST_HEARTBEAT.copy()

|

||||

self.cursorpos = view.sel()[0].begin() if view.sel() else None

|

||||

self.view = view

|

||||

self.hidefilenames = SETTINGS.get('hidefilenames')

|

||||

self.proxy = SETTINGS.get('proxy')

|

||||

|

||||

self.heartbeat = heartbeat

|

||||

self.has_extra_heartbeats = False

|

||||

|

||||

def add_extra_heartbeats(self, extra_heartbeats):

|

||||

self.has_extra_heartbeats = True

|

||||

self.extra_heartbeats = extra_heartbeats

|

||||

|

||||

def run(self):

|

||||

with self.lock:

|

||||

if self.target_file:

|

||||

self.timestamp = time.time()

|

||||

if self.force or self.target_file != self.last_heartbeat['file'] or enough_time_passed(self.timestamp, self.last_heartbeat, self.is_write):

|

||||

self.send_heartbeat()

|

||||

"""Running in background thread."""

|

||||

|

||||

def send_heartbeat(self):

|

||||

if not self.api_key:

|

||||

print('[WakaTime] Error: missing api key.')

|

||||

return

|

||||

ua = 'sublime/%d sublime-wakatime/%s' % (ST_VERSION, __version__)

|

||||

cmd = [

|

||||

API_CLIENT,

|

||||

'--file', self.target_file,

|

||||

'--time', str('%f' % self.timestamp),

|

||||

'--plugin', ua,

|

||||

'--key', str(bytes.decode(self.api_key.encode('utf8'))),

|

||||

]

|

||||

if self.is_write:

|

||||

cmd.append('--write')

|

||||

if self.project and self.project.get('name'):

|

||||

cmd.extend(['--alternate-project', self.project.get('name')])

|

||||

elif self.folders:

|

||||

project_name = find_project_from_folders(self.folders, self.target_file)

|

||||

if project_name:

|

||||

cmd.extend(['--alternate-project', project_name])

|

||||

if self.cursorpos is not None:

|

||||

cmd.extend(['--cursorpos', '{0}'.format(self.cursorpos)])

|

||||

for pattern in self.ignore:

|

||||

cmd.extend(['--ignore', pattern])

|

||||

if self.debug:

|

||||

cmd.append('--verbose')

|

||||

if python_binary():

|

||||

cmd.insert(0, python_binary())

|

||||

if self.debug:

|

||||

print('[WakaTime] %s' % ' '.join(obfuscate_apikey(cmd)))

|

||||

if platform.system() == 'Windows':

|

||||

Popen(cmd, shell=False)

|

||||

else:

|

||||

with open(os.path.join(os.path.expanduser('~'), '.wakatime.log'), 'a') as stderr:

|

||||

Popen(cmd, stderr=stderr)

|

||||

self.sent()

|

||||

else:

|

||||

print('[WakaTime] Error: Unable to find python binary.')

|

||||

self.send_heartbeats()

|

||||

|

||||

def sent(self):

|

||||

sublime.set_timeout(self.set_status_bar, 0)

|

||||

sublime.set_timeout(self.set_last_heartbeat, 0)

|

||||

def build_heartbeat(self, entity=None, timestamp=None, is_write=None,

|

||||

cursorpos=None, project=None, folders=None):

|

||||

"""Returns a dict for passing to wakatime-cli as arguments."""

|

||||

|

||||

def set_status_bar(self):

|

||||

if SETTINGS.get('status_bar_message'):

|

||||

self.view.set_status('wakatime', datetime.now().strftime(SETTINGS.get('status_bar_message_fmt')))

|

||||

|

||||

def set_last_heartbeat(self):

|

||||

global LAST_HEARTBEAT

|

||||

LAST_HEARTBEAT = {

|

||||

'file': self.target_file,

|

||||

'time': self.timestamp,

|

||||

'is_write': self.is_write,

|

||||

heartbeat = {

|

||||

'entity': entity,

|

||||

'timestamp': timestamp,

|

||||

'is_write': is_write,

|

||||

}

|

||||

|

||||

if project and project.get('name'):

|

||||

heartbeat['alternate_project'] = project.get('name')

|

||||

elif folders:

|

||||

project_name = find_project_from_folders(folders, entity)

|

||||

if project_name:

|

||||

heartbeat['alternate_project'] = project_name

|

||||

|

||||

class InstallPython(threading.Thread):

|

||||

"""Non-blocking thread for installing Python on Windows machines.

|

||||

if cursorpos is not None:

|

||||

heartbeat['cursorpos'] = '{0}'.format(cursorpos)

|

||||

|

||||

return heartbeat

|

||||

|

||||

def send_heartbeats(self):

|

||||

if python_binary():

|

||||

heartbeat = self.build_heartbeat(**self.heartbeat)

|

||||

ua = 'sublime/%d sublime-wakatime/%s' % (ST_VERSION, __version__)

|

||||

cmd = [

|

||||

python_binary(),

|

||||

API_CLIENT,

|

||||

'--entity', heartbeat['entity'],

|

||||

'--time', str('%f' % heartbeat['timestamp']),

|

||||

'--plugin', ua,

|

||||

]

|

||||

if self.api_key:

|

||||

cmd.extend(['--key', str(bytes.decode(self.api_key.encode('utf8')))])

|

||||

if heartbeat['is_write']:

|

||||

cmd.append('--write')

|

||||

if heartbeat.get('alternate_project'):

|

||||

cmd.extend(['--alternate-project', heartbeat['alternate_project']])

|

||||

if heartbeat.get('cursorpos') is not None:

|

||||

cmd.extend(['--cursorpos', heartbeat['cursorpos']])

|

||||

for pattern in self.ignore:

|

||||

cmd.extend(['--exclude', pattern])

|

||||

if self.debug:

|

||||

cmd.append('--verbose')

|

||||

if self.hidefilenames:

|

||||

cmd.append('--hidefilenames')

|

||||

if self.proxy:

|

||||

cmd.extend(['--proxy', self.proxy])

|

||||

if self.has_extra_heartbeats:

|

||||

cmd.append('--extra-heartbeats')

|

||||

stdin = PIPE

|

||||

extra_heartbeats = [self.build_heartbeat(**x) for x in self.extra_heartbeats]

|

||||

extra_heartbeats = json.dumps(extra_heartbeats)

|

||||

else:

|

||||

extra_heartbeats = None

|

||||

stdin = None

|

||||

|

||||

log(DEBUG, ' '.join(obfuscate_apikey(cmd)))

|

||||

try:

|

||||

process = Popen(cmd, stdin=stdin, stdout=PIPE, stderr=STDOUT)

|

||||

inp = None

|

||||

if self.has_extra_heartbeats:

|

||||

inp = "{0}\n".format(extra_heartbeats)

|

||||

inp = inp.encode('utf-8')

|

||||

output, err = process.communicate(input=inp)

|

||||

output = u(output)

|

||||

retcode = process.poll()

|

||||

if (not retcode or retcode == 102) and not output:

|

||||

self.sent()

|

||||

else:

|

||||

update_status_bar('Error')

|

||||

if retcode:

|

||||

log(DEBUG if retcode == 102 else ERROR, 'wakatime-core exited with status: {0}'.format(retcode))

|

||||

if output:

|

||||

log(ERROR, u('wakatime-core output: {0}').format(output))

|

||||

except:

|

||||

log(ERROR, u(sys.exc_info()[1]))

|

||||

update_status_bar('Error')

|

||||

|

||||

else:

|

||||

log(ERROR, 'Unable to find python binary.')

|

||||

update_status_bar('Error')

|

||||

|

||||

def sent(self):

|

||||

update_status_bar('OK')

|

||||

|

||||

|

||||

def download_python():

|

||||

thread = DownloadPython()

|

||||

thread.start()

|

||||

|

||||

|

||||

class DownloadPython(threading.Thread):

|

||||

"""Non-blocking thread for extracting embeddable Python on Windows machines.

|

||||

"""

|

||||

|

||||

def run(self):

|

||||

print('[WakaTime] Downloading and installing python...')

|

||||

url = 'https://www.python.org/ftp/python/3.4.3/python-3.4.3.msi'

|

||||

if platform.architecture()[0] == '64bit':

|

||||

url = 'https://www.python.org/ftp/python/3.4.3/python-3.4.3.amd64.msi'

|

||||

python_msi = os.path.join(os.path.expanduser('~'), 'python.msi')

|

||||

log(INFO, 'Downloading embeddable Python...')

|

||||

|

||||

ver = '3.5.2'

|

||||

arch = 'amd64' if platform.architecture()[0] == '64bit' else 'win32'

|

||||

url = 'https://www.python.org/ftp/python/{ver}/python-{ver}-embed-{arch}.zip'.format(

|

||||

ver=ver,

|

||||

arch=arch,

|

||||

)

|

||||

|

||||

if not os.path.exists(resources_folder()):

|

||||

os.makedirs(resources_folder())

|

||||

|

||||

zip_file = os.path.join(resources_folder(), 'python.zip')

|

||||

try:

|

||||

urllib.urlretrieve(url, python_msi)

|

||||

urllib.urlretrieve(url, zip_file)

|

||||

except AttributeError:

|

||||

urllib.request.urlretrieve(url, python_msi)

|

||||

args = [

|

||||

'msiexec',

|

||||

'/i',

|

||||

python_msi,

|

||||

'/norestart',

|

||||

'/qb!',

|

||||

]

|

||||

Popen(args)

|

||||

urllib.request.urlretrieve(url, zip_file)

|

||||

|

||||

log(INFO, 'Extracting Python...')

|

||||

with contextlib.closing(ZipFile(zip_file)) as zf:

|

||||

path = os.path.join(resources_folder(), 'python')

|

||||

zf.extractall(path)

|

||||

|

||||

try:

|

||||

os.remove(zip_file)

|

||||

except:

|

||||

pass

|

||||

|

||||

log(INFO, 'Finished extracting Python.')

|

||||

|

||||

|

||||

def plugin_loaded():

|

||||

global SETTINGS

|

||||

print('[WakaTime] Initializing WakaTime plugin v%s' % __version__)

|

||||

SETTINGS = sublime.load_settings(SETTINGS_FILE)

|

||||

|

||||

log(INFO, 'Initializing WakaTime plugin v%s' % __version__)

|

||||

update_status_bar('Initializing')

|

||||

|

||||

if not python_binary():

|

||||

print('[WakaTime] Warning: Python binary not found.')

|

||||

log(WARNING, 'Python binary not found.')

|

||||

if platform.system() == 'Windows':

|

||||

thread = InstallPython()

|

||||

thread.start()

|

||||

set_timeout(download_python, 0)

|

||||

else:

|

||||

sublime.error_message("Unable to find Python binary!\nWakaTime needs Python to work correctly.\n\nGo to https://www.python.org/downloads")

|

||||

return

|

||||

|

||||

SETTINGS = sublime.load_settings(SETTINGS_FILE)

|

||||

after_loaded()

|

||||

|

||||

|

||||

def after_loaded():

|

||||

if not prompt_api_key():

|

||||

sublime.set_timeout(after_loaded, 500)

|

||||

set_timeout(after_loaded, 0.5)

|

||||

|

||||

|

||||

# need to call plugin_loaded because only ST3 will auto-call it

|

||||

@ -406,15 +637,15 @@ if ST_VERSION < 3000:

|

||||

class WakatimeListener(sublime_plugin.EventListener):

|

||||

|

||||

def on_post_save(self, view):

|

||||

handle_heartbeat(view, is_write=True)

|

||||

handle_activity(view, is_write=True)

|

||||

|

||||

def on_selection_modified(self, view):

|

||||

if is_view_active(view):

|

||||

handle_heartbeat(view)

|

||||

handle_activity(view)

|

||||

|

||||

def on_modified(self, view):

|

||||

if is_view_active(view):

|

||||

handle_heartbeat(view)

|

||||

handle_activity(view)

|

||||

|

||||

|

||||

class WakatimeDashboardCommand(sublime_plugin.ApplicationCommand):

|

||||

|

||||

@ -6,18 +6,24 @@

|

||||

// Your api key from https://wakatime.com/#apikey

|

||||

// Set this in your User specific WakaTime.sublime-settings file.

|

||||

"api_key": "",

|

||||

|

||||

// Ignore files; Files (including absolute paths) that match one of these

|

||||

// POSIX regular expressions will not be logged.

|

||||

"ignore": ["^/tmp/", "^/etc/", "^/var/", "COMMIT_EDITMSG$", "PULLREQ_EDITMSG$", "MERGE_MSG$", "TAG_EDITMSG$"],

|

||||

|

||||

|

||||

// Debug mode. Set to true for verbose logging. Defaults to false.

|

||||

"debug": false,

|

||||

|

||||

|

||||

// Proxy with format https://user:pass@host:port or socks5://user:pass@host:port or domain\\user:pass.

|

||||

"proxy": "",

|

||||

|

||||

// Ignore files; Files (including absolute paths) that match one of these

|

||||

// POSIX regular expressions will not be logged.

|

||||

"ignore": ["^/tmp/", "^/etc/", "^/var/(?!www/).*", "COMMIT_EDITMSG$", "PULLREQ_EDITMSG$", "MERGE_MSG$", "TAG_EDITMSG$"],

|

||||

|

||||

// Status bar message. Set to false to hide status bar message.

|

||||

// Defaults to true.

|

||||

"status_bar_message": true,

|

||||

|

||||

|

||||

// Status bar message format.

|

||||

"status_bar_message_fmt": "WakaTime active %I:%M %p"

|

||||

"status_bar_message_fmt": "WakaTime {status} %I:%M %p",

|

||||

|

||||

// Obfuscate file paths when sending to API. Your dashboard will no longer display coding activity per file.

|

||||

"hidefilenames": false

|

||||

}

|

||||

|

||||

@ -1,9 +1,9 @@

|

||||

__title__ = 'wakatime'

|

||||

__description__ = 'Common interface to the WakaTime api.'

|

||||

__url__ = 'https://github.com/wakatime/wakatime'

|

||||

__version_info__ = ('4', '1', '8')

|

||||

__version_info__ = ('8', '0', '3')

|

||||

__version__ = '.'.join(__version_info__)

|

||||

__author__ = 'Alan Hamlett'

|

||||

__author_email__ = 'alan@wakatime.com'

|

||||

__license__ = 'BSD'

|

||||

__copyright__ = 'Copyright 2014 Alan Hamlett'

|

||||

__copyright__ = 'Copyright 2017 Alan Hamlett'

|

||||

|

||||

240

packages/wakatime/arguments.py

Normal file

240

packages/wakatime/arguments.py

Normal file

@ -0,0 +1,240 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.arguments

|

||||

~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Command-line arguments.

|

||||

|

||||

:copyright: (c) 2016 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

|

||||

from __future__ import print_function

|

||||

|

||||

|

||||

import os

|

||||

import re

|

||||

import time

|

||||

import traceback

|

||||

from .__about__ import __version__

|

||||

from .configs import parseConfigFile

|

||||

from .constants import AUTH_ERROR

|

||||

from .packages import argparse

|

||||

|

||||

|

||||

class FileAction(argparse.Action):

|

||||

|

||||

def __call__(self, parser, namespace, values, option_string=None):

|

||||

try:

|

||||

if os.path.isfile(values):

|

||||

values = os.path.realpath(values)

|

||||

except: # pragma: nocover

|

||||

pass

|

||||

setattr(namespace, self.dest, values)

|

||||

|

||||

|

||||

def parseArguments():

|

||||

"""Parse command line arguments and configs from ~/.wakatime.cfg.

|

||||

Command line arguments take precedence over config file settings.

|

||||

Returns instances of ArgumentParser and SafeConfigParser.

|

||||

"""

|

||||

|

||||

# define supported command line arguments

|

||||

parser = argparse.ArgumentParser(

|

||||

description='Common interface for the WakaTime api.')

|

||||

parser.add_argument('--entity', dest='entity', metavar='FILE',

|

||||

action=FileAction,

|

||||

help='absolute path to file for the heartbeat; can also be a '+

|

||||

'url, domain, or app when --entity-type is not file')

|

||||

parser.add_argument('--file', dest='file', action=FileAction,

|

||||

help=argparse.SUPPRESS)

|

||||

parser.add_argument('--key', dest='key',

|

||||

help='your wakatime api key; uses api_key from '+

|

||||

'~/.wakatime.cfg by default')

|

||||

parser.add_argument('--write', dest='is_write',

|

||||

action='store_true',

|

||||

help='when set, tells api this heartbeat was triggered from '+

|

||||

'writing to a file')

|

||||

parser.add_argument('--plugin', dest='plugin',

|

||||

help='optional text editor plugin name and version '+

|

||||

'for User-Agent header')

|

||||

parser.add_argument('--time', dest='timestamp', metavar='time',

|

||||

type=float,

|

||||

help='optional floating-point unix epoch timestamp; '+

|

||||

'uses current time by default')

|

||||

parser.add_argument('--lineno', dest='lineno',

|

||||

help='optional line number; current line being edited')

|

||||

parser.add_argument('--cursorpos', dest='cursorpos',

|

||||

help='optional cursor position in the current file')

|

||||

parser.add_argument('--entity-type', dest='entity_type',

|

||||

help='entity type for this heartbeat. can be one of "file", '+

|

||||

'"domain", or "app"; defaults to file.')

|

||||

parser.add_argument('--proxy', dest='proxy',

|

||||

help='optional proxy configuration. Supports HTTPS '+

|

||||

'and SOCKS proxies. For example: '+

|

||||

'https://user:pass@host:port or '+

|

||||

'socks5://user:pass@host:port or ' +

|

||||

'domain\\user:pass')

|

||||

parser.add_argument('--no-ssl-verify', dest='nosslverify',

|

||||

action='store_true',

|

||||

help='disables SSL certificate verification for HTTPS '+

|

||||

'requests. By default, SSL certificates are verified.')

|

||||

parser.add_argument('--project', dest='project',

|

||||

help='optional project name')

|

||||

parser.add_argument('--alternate-project', dest='alternate_project',

|

||||

help='optional alternate project name; auto-discovered project '+

|

||||

'takes priority')

|

||||

parser.add_argument('--alternate-language', dest='alternate_language',

|

||||

help=argparse.SUPPRESS)

|

||||

parser.add_argument('--language', dest='language',

|

||||

help='optional language name; if valid, takes priority over '+

|

||||

'auto-detected language')

|

||||

parser.add_argument('--hostname', dest='hostname', help='hostname of '+

|

||||

'current machine.')

|

||||

parser.add_argument('--disableoffline', dest='offline',

|

||||

action='store_false',

|

||||

help='disables offline time logging instead of queuing logged time')

|

||||

parser.add_argument('--hidefilenames', dest='hidefilenames',

|

||||

action='store_true',

|

||||

help='obfuscate file names; will not send file names to api')

|

||||

parser.add_argument('--exclude', dest='exclude', action='append',

|

||||

help='filename patterns to exclude from logging; POSIX regex '+

|

||||

'syntax; can be used more than once')

|

||||

parser.add_argument('--include', dest='include', action='append',

|

||||

help='filename patterns to log; when used in combination with '+

|

||||

'--exclude, files matching include will still be logged; '+

|

||||

'POSIX regex syntax; can be used more than once')

|

||||

parser.add_argument('--ignore', dest='ignore', action='append',

|

||||

help=argparse.SUPPRESS)

|

||||

parser.add_argument('--extra-heartbeats', dest='extra_heartbeats',

|

||||

action='store_true',

|

||||

help='reads extra heartbeats from STDIN as a JSON array until EOF')

|

||||

parser.add_argument('--logfile', dest='logfile',

|

||||

help='defaults to ~/.wakatime.log')

|

||||

parser.add_argument('--apiurl', dest='api_url',

|

||||

help='heartbeats api url; for debugging with a local server')

|

||||

parser.add_argument('--timeout', dest='timeout', type=int,

|

||||

help='number of seconds to wait when sending heartbeats to api; '+

|

||||

'defaults to 60 seconds')

|

||||

parser.add_argument('--config', dest='config',

|

||||

help='defaults to ~/.wakatime.cfg')

|

||||

parser.add_argument('--verbose', dest='verbose', action='store_true',

|

||||

help='turns on debug messages in log file')

|

||||

parser.add_argument('--version', action='version', version=__version__)

|

||||

|

||||

# parse command line arguments

|

||||

args = parser.parse_args()

|

||||

|

||||

# use current unix epoch timestamp by default

|

||||

if not args.timestamp:

|

||||

args.timestamp = time.time()

|

||||

|

||||

# parse ~/.wakatime.cfg file

|

||||

configs = parseConfigFile(args.config)

|

||||

|

||||

# update args from configs

|

||||

if not args.hostname:

|

||||

if configs.has_option('settings', 'hostname'):

|

||||

args.hostname = configs.get('settings', 'hostname')

|

||||

if not args.key:

|

||||

default_key = None

|

||||

if configs.has_option('settings', 'api_key'):

|

||||

default_key = configs.get('settings', 'api_key')

|

||||

elif configs.has_option('settings', 'apikey'):

|

||||

default_key = configs.get('settings', 'apikey')

|

||||

if default_key:

|

||||

args.key = default_key

|

||||

else:

|

||||

try:

|

||||

parser.error('Missing api key. Find your api key from wakatime.com/settings.')

|

||||

except SystemExit:

|

||||

raise SystemExit(AUTH_ERROR)

|

||||

|

||||

is_valid = not not re.match(r'^[a-f0-9]{8}-[a-f0-9]{4}-4[a-f0-9]{3}-[89ab][a-f0-9]{3}-[a-f0-9]{12}$', args.key, re.I)

|

||||

if not is_valid:

|

||||

try:

|

||||

parser.error('Invalid api key. Find your api key from wakatime.com/settings.')

|

||||

except SystemExit:

|

||||

raise SystemExit(AUTH_ERROR)

|

||||

|

||||

if not args.entity:

|

||||

if args.file:

|

||||

args.entity = args.file

|

||||

else:

|

||||

parser.error('argument --entity is required')

|

||||

|

||||

if not args.language and args.alternate_language:

|

||||

args.language = args.alternate_language

|

||||

|

||||

if not args.exclude:

|

||||

args.exclude = []

|

||||

if configs.has_option('settings', 'ignore'):

|

||||

try:

|

||||

for pattern in configs.get('settings', 'ignore').split("\n"):

|

||||

if pattern.strip() != '':

|

||||

args.exclude.append(pattern)

|

||||

except TypeError: # pragma: nocover

|

||||

pass

|

||||

if configs.has_option('settings', 'exclude'):

|

||||

try:

|

||||

for pattern in configs.get('settings', 'exclude').split("\n"):

|

||||

if pattern.strip() != '':

|

||||

args.exclude.append(pattern)

|

||||

except TypeError: # pragma: nocover

|

||||

pass

|

||||

if not args.include:

|

||||

args.include = []

|

||||

if configs.has_option('settings', 'include'):

|

||||

try:

|

||||

for pattern in configs.get('settings', 'include').split("\n"):

|

||||

if pattern.strip() != '':

|

||||

args.include.append(pattern)

|

||||

except TypeError: # pragma: nocover

|

||||

pass

|

||||

if args.hidefilenames:

|

||||

args.hidefilenames = ['.*']

|

||||

else:

|

||||

args.hidefilenames = []

|

||||

if configs.has_option('settings', 'hidefilenames'):

|

||||

option = configs.get('settings', 'hidefilenames')

|

||||

if option.strip().lower() == 'true':

|

||||

args.hidefilenames = ['.*']

|

||||

elif option.strip().lower() != 'false':

|

||||

for pattern in option.split("\n"):

|

||||

if pattern.strip() != '':

|

||||

args.hidefilenames.append(pattern)

|

||||

if args.offline and configs.has_option('settings', 'offline'):

|

||||

args.offline = configs.getboolean('settings', 'offline')

|

||||

if not args.proxy and configs.has_option('settings', 'proxy'):

|

||||

args.proxy = configs.get('settings', 'proxy')

|

||||

if args.proxy:

|

||||

pattern = r'^((https?|socks5)://)?([^:@]+(:([^:@])+)?@)?[^:]+(:\d+)?$'

|

||||

if '\\' in args.proxy:

|

||||

pattern = r'^.*\\.+$'

|

||||

is_valid = not not re.match(pattern, args.proxy, re.I)

|

||||

if not is_valid:

|

||||

parser.error('Invalid proxy. Must be in format ' +

|

||||

'https://user:pass@host:port or ' +

|

||||

'socks5://user:pass@host:port or ' +

|

||||

'domain\\user:pass.')

|

||||

if configs.has_option('settings', 'no_ssl_verify'):

|

||||

args.nosslverify = configs.getboolean('settings', 'no_ssl_verify')

|

||||

if not args.verbose and configs.has_option('settings', 'verbose'):

|

||||

args.verbose = configs.getboolean('settings', 'verbose')

|

||||

if not args.verbose and configs.has_option('settings', 'debug'):

|

||||

args.verbose = configs.getboolean('settings', 'debug')

|

||||

if not args.logfile and configs.has_option('settings', 'logfile'):

|

||||

args.logfile = configs.get('settings', 'logfile')

|

||||

if not args.logfile and os.environ.get('WAKATIME_HOME'):

|

||||

home = os.environ.get('WAKATIME_HOME')

|

||||

args.logfile = os.path.join(os.path.expanduser(home), '.wakatime.log')

|

||||

if not args.api_url and configs.has_option('settings', 'api_url'):

|

||||

args.api_url = configs.get('settings', 'api_url')

|

||||

if not args.timeout and configs.has_option('settings', 'timeout'):

|

||||

try:

|

||||

args.timeout = int(configs.get('settings', 'timeout'))

|

||||

except ValueError:

|

||||

print(traceback.format_exc())

|

||||

|

||||

return args, configs

|

||||

@ -31,7 +31,7 @@ if is_py2: # pragma: nocover

|

||||

try:

|

||||

return unicode(text)

|

||||

except:

|

||||

return text

|

||||

return text.decode('utf-8', 'replace')

|

||||

open = codecs.open

|

||||

basestring = basestring

|

||||

|

||||

@ -52,7 +52,7 @@ elif is_py3: # pragma: nocover

|

||||

try:

|

||||

return str(text)

|

||||

except:

|

||||

return text

|

||||

return text.decode('utf-8', 'replace')

|

||||

open = open

|

||||

basestring = (str, bytes)

|

||||

|

||||

|

||||

64

packages/wakatime/configs.py

Normal file

64

packages/wakatime/configs.py

Normal file

@ -0,0 +1,64 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.configs

|

||||

~~~~~~~~~~~~~~~~

|

||||

|

||||

Config file parser.

|

||||

|

||||

:copyright: (c) 2016 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

|

||||

from __future__ import print_function

|

||||

|

||||

import os

|

||||

import traceback

|

||||

|

||||

from .compat import open

|

||||

from .constants import CONFIG_FILE_PARSE_ERROR

|

||||

|

||||

|

||||

try:

|

||||

import configparser

|

||||

except ImportError:

|

||||

from .packages import configparser

|

||||

|

||||

|

||||

def getConfigFile():

|

||||

"""Returns the config file location.

|

||||

|

||||

If $WAKATIME_HOME env varialbe is defined, returns

|

||||

$WAKATIME_HOME/.wakatime.cfg, otherwise ~/.wakatime.cfg.

|

||||

"""

|

||||

|

||||

fileName = '.wakatime.cfg'

|

||||

|

||||

home = os.environ.get('WAKATIME_HOME')

|

||||

if home:

|