mirror of

https://github.com/wakatime/sublime-wakatime.git

synced 2023-08-10 21:13:02 +03:00

Compare commits

256 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| c100213351 | |||

| 43237a05c5 | |||

| c34eccad4a | |||

| 5077c5e290 | |||

| a387c08b44 | |||

| 3c2947cf79 | |||

| 0744d7209b | |||

| 54e6772a80 | |||

| b576dfafe6 | |||

| 5299efd6fa | |||

| 74583a6845 | |||

| 31f1f8ecdc | |||

| c1f58fd05d | |||

| 28063e3ac4 | |||

| 4d56aca1a1 | |||

| e15c514ef3 | |||

| fe582c84b9 | |||

| d7ee8675d8 | |||

| da17125b97 | |||

| 847223cdce | |||

| b405f99cff | |||

| b5210b77ce | |||

| fac1192228 | |||

| 4d6533b2ee | |||

| 7d2bd0b7c5 | |||

| 66f5f48f33 | |||

| e337afcc53 | |||

| 033c07f070 | |||

| c09767b58d | |||

| ac80c4268b | |||

| e5331d3086 | |||

| bebb46dda6 | |||

| 9767790063 | |||

| 75c219055d | |||

| ef77e1f178 | |||

| 4025decc12 | |||

| 086c700151 | |||

| 650bb6fa26 | |||

| 389c84673e | |||

| 6fa1321a95 | |||

| f1a8fcab44 | |||

| 3937b083c5 | |||

| 711aab0d18 | |||

| cb8ce3a54e | |||

| 7db5fe0a5d | |||

| cebcfaa0e9 | |||

| f8faed6e47 | |||

| fb303e048f | |||

| 4f11222c2b | |||

| 72b72dc9f0 | |||

| 1b07d0442b | |||

| fbd8e84ea1 | |||

| 01c0e7758e | |||

| 28556de3b6 | |||

| 809e43cfe5 | |||

| 22ddbe27b0 | |||

| ddaf60b8b0 | |||

| 2e6a87c67e | |||

| 01503b1c20 | |||

| 5a4ac9c11d | |||

| fa0a3aacb5 | |||

| d588451468 | |||

| 483d8f596e | |||

| 03f2d6d580 | |||

| e1390d7647 | |||

| c87bdd041c | |||

| 3a65395636 | |||

| 0b2f3aa9a4 | |||

| 58ef2cd794 | |||

| 04173d3bcc | |||

| 3206a07476 | |||

| 9330236816 | |||

| 885c11f01a | |||

| 8acda0157a | |||

| 935ddbd5f6 | |||

| b57b1eb696 | |||

| 6ec097b9d1 | |||

| b3ed36d3b2 | |||

| 3669e4df6a | |||

| 3504096082 | |||

| 5990947706 | |||

| 2246e31244 | |||

| b55fe702d3 | |||

| e0fbbb50bb | |||

| 32c0cb5a97 | |||

| 67d8b0d24f | |||

| b8b2f4944b | |||

| a20161164c | |||

| 405211bb07 | |||

| ffc879c4eb | |||

| 1e23919694 | |||

| b2086a3cd2 | |||

| 005b07520c | |||

| 60608bd322 | |||

| cde8f8f1de | |||

| 4adfca154c | |||

| f7b3924a30 | |||

| db00024455 | |||

| 9a6be7ca4e | |||

| 1ea9b2a761 | |||

| bd5e87e030 | |||

| 0256ff4a6a | |||

| 9d170b3276 | |||

| c54e575210 | |||

| 07513d8f10 | |||

| 30902cc050 | |||

| aa7962d49a | |||

| d8c662f3db | |||

| 10d88ebf2d | |||

| 2f28c561b1 | |||

| 24968507df | |||

| 641cd539ed | |||

| 0c65d7e5b2 | |||

| f0532f5b8e | |||

| 8094db9680 | |||

| bf20551849 | |||

| 2b6e32b578 | |||

| 363c3d38e2 | |||

| 88466d7db2 | |||

| 122fcbbee5 | |||

| c41fcec5d8 | |||

| be09b34d44 | |||

| e1ee1c1216 | |||

| a37061924b | |||

| da01fa268b | |||

| c279418651 | |||

| 5cf2c8f7ac | |||

| d1455e77a8 | |||

| 8499e7bafe | |||

| abc26a0864 | |||

| 71ad97ffe9 | |||

| 3ec5995c99 | |||

| 195cf4de36 | |||

| b39eefb4f5 | |||

| bbf5761e26 | |||

| c4df1dc633 | |||

| 360a491cda | |||

| f61a34eda7 | |||

| 48123d7409 | |||

| c8a15d7ac0 | |||

| 202df81e04 | |||

| 5e34f3f6a7 | |||

| d4441e5575 | |||

| 9eac8e2bd3 | |||

| 11d8fc3a09 | |||

| d1f1f51f23 | |||

| b10bb36c09 | |||

| dc9474befa | |||

| b910807e98 | |||

| bc770515f0 | |||

| 9e102d7c5c | |||

| 5c1770fb48 | |||

| 683397534c | |||

| 1c92017543 | |||

| fda1307668 | |||

| 1c84d457c5 | |||

| 1e680ce739 | |||

| 376adbb7d7 | |||

| e0040e185b | |||

| c4a88541d0 | |||

| 0cf621d177 | |||

| db9d6cec97 | |||

| 2c17f49a6b | |||

| 95116d6007 | |||

| 8c52596f8f | |||

| 3109817dc7 | |||

| 0c0f965763 | |||

| 1573e9c825 | |||

| a0b8f349c2 | |||

| 2fb60b1589 | |||

| 02786a744e | |||

| 729a4360ba | |||

| 8f45de85ec | |||

| 4672f70c87 | |||

| 46a9aae942 | |||

| 9e77ce2697 | |||

| 385ba818cc | |||

| 7492c3ce12 | |||

| 03eed88917 | |||

| 60a7ad96b5 | |||

| 2d1d5d336a | |||

| e659759b2d | |||

| a290e5d86d | |||

| d5b922bb10 | |||

| ec7b5e3530 | |||

| aa3f2e8af6 | |||

| f4e53cd682 | |||

| aba72b0f1e | |||

| 5b9d86a57d | |||

| fa40874635 | |||

| 6d4a4cf9eb | |||

| f628b8dd11 | |||

| f932ee9fc6 | |||

| 2f14009279 | |||

| 453d96bf9c | |||

| 9de153f156 | |||

| dcc782338d | |||

| 9d0dba988a | |||

| e76f2e514e | |||

| 224f7cd82a | |||

| 3cce525a84 | |||

| ce885501ad | |||

| c9448a9a19 | |||

| 04f8c61ebc | |||

| 04a4630024 | |||

| 02138220fd | |||

| d0b162bdd8 | |||

| 1b8895cd38 | |||

| 938bbb73d1 | |||

| 008fdc6b49 | |||

| a788625dd0 | |||

| bcbce681c3 | |||

| 35299db832 | |||

| eb7814624c | |||

| 1c092b2fd8 | |||

| 507ef95f71 | |||

| 9777bc7788 | |||

| 20b78defa6 | |||

| 8cb1c557d9 | |||

| 20a1965f13 | |||

| 0b802a554e | |||

| 30186c9b2c | |||

| 311a0b5309 | |||

| b7602d89fb | |||

| 305de46e32 | |||

| c574234927 | |||

| a69c50f470 | |||

| f4b40089f3 | |||

| 08394357b7 | |||

| 205d4eb163 | |||

| c4c27e4e9e | |||

| 9167eb2558 | |||

| eaa3bb5180 | |||

| 7755971d11 | |||

| 7634be5446 | |||

| 5e17ad88f6 | |||

| 24d0f65116 | |||

| a326046733 | |||

| 9bab00fd8b | |||

| b4a13a48b9 | |||

| 21601f9688 | |||

| 4c3ec87341 | |||

| b149d7fc87 | |||

| 52e6107c6e | |||

| b340637331 | |||

| 044867449a | |||

| 9e3f438823 | |||

| 887d55c3f3 | |||

| 19d54f3310 | |||

| 514a8762eb | |||

| 957c74d226 | |||

| 7b0432d6ff | |||

| 09754849be | |||

| 25ad48a97a | |||

| 3b2520afa9 | |||

| 77c2041ad3 |

2

AUTHORS

2

AUTHORS

@ -13,3 +13,5 @@ Patches and Suggestions

|

||||

|

||||

- Jimmy Selgen Nielsen <jimmy.selgen@gmail.com>

|

||||

- Patrik Kernstock <info@pkern.at>

|

||||

- Krishna Glick <krishnaglick@gmail.com>

|

||||

- Carlos Henrique Gandarez <gandarez@gmail.com>

|

||||

|

||||

560

HISTORY.rst

560

HISTORY.rst

@ -3,6 +3,566 @@ History

|

||||

-------

|

||||

|

||||

|

||||

11.1.0 (2022-11-12)

|

||||

++++++++++++++++++

|

||||

|

||||

- Support for api key vault cmd config.

|

||||

`#117 <https://github.com/wakatime/sublime-wakatime/pull/117>`_

|

||||

|

||||

|

||||

11.0.8 (2022-08-23)

|

||||

++++++++++++++++++

|

||||

|

||||

- Bugfix to prevent using empty selection object.

|

||||

`#116 <https://github.com/wakatime/sublime-wakatime/issues/116>`_

|

||||

|

||||

|

||||

11.0.7 (2022-06-25)

|

||||

++++++++++++++++++

|

||||

|

||||

- Check wakatime-cli versions in background thread.

|

||||

`#115 <https://github.com/wakatime/sublime-wakatime/issues/115>`_

|

||||

|

||||

|

||||

11.0.6 (2022-06-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fix call to log helper.

|

||||

`#113 <https://github.com/wakatime/sublime-wakatime/issues/113>`_

|

||||

|

||||

|

||||

11.0.5 (2022-04-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- Bugfix to not overwrite global urlopener in embedded Python.

|

||||

`#110 <https://github.com/wakatime/sublime-wakatime/issues/110>`_

|

||||

- Chmod wakatime-cli to be executable after updating on non-Windows platforms.

|

||||

|

||||

|

||||

11.0.4 (2022-01-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- Copy wakatime-cli when symlink fails on Windows.

|

||||

`vim-wakatime#122 <https://github.com/wakatime/vim-wakatime/issues/122>`_

|

||||

- Fix lineno, cursorpos, and lines-in-file arguments.

|

||||

|

||||

|

||||

11.0.3 (2021-12-31)

|

||||

++++++++++++++++++

|

||||

|

||||

- Bugfix to not delete old wakatime-cli until finished downloading new version.

|

||||

`#107 <https://github.com/wakatime/sublime-wakatime/issues/107>`_

|

||||

|

||||

|

||||

11.0.2 (2021-11-17)

|

||||

++++++++++++++++++

|

||||

|

||||

- Bugfix to encode extra heartbeats cursorpos as int not str when sending to wakatime-cli.

|

||||

|

||||

|

||||

11.0.1 (2021-11-16)

|

||||

++++++++++++++++++

|

||||

|

||||

- Bugfix for install script when using system Python3 and duplicat INI keys.

|

||||

|

||||

|

||||

11.0.0 (2021-10-31)

|

||||

++++++++++++++++++

|

||||

|

||||

- Use new Go wakatime-cli.

|

||||

|

||||

|

||||

10.0.1 (2020-12-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- Improve readme subtitle.

|

||||

|

||||

|

||||

10.0.0 (2020-12-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- Support for standalone wakatime-cli, disabled by default.

|

||||

|

||||

|

||||

9.1.2 (2020-02-13)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v13.0.7.

|

||||

- Split bundled pygments library for Python 2.7+.

|

||||

- Upgrade pygments for py27+ to v2.5.2 development master.

|

||||

- Force requests to use bundled ca cert from certifi by default.

|

||||

- Upgrade bundled certifi to v2019.11.28.

|

||||

|

||||

|

||||

9.1.1 (2020-02-11)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fix typo in python detection on Windows platform.

|

||||

|

||||

|

||||

9.1.0 (2020-02-09)

|

||||

++++++++++++++++++

|

||||

|

||||

- Detect python in Windows LocalAppData install locations.

|

||||

- Upgrade wakatime-cli to v13.0.4.

|

||||

- Bundle cryptography, pyopenssl, and ipaddress packages for improved SSL

|

||||

support on Python2.

|

||||

|

||||

|

||||

9.0.2 (2019-12-04)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v13.0.3.

|

||||

- Support slashes in Mercurial and Git branch names.

|

||||

`wakatime#199 <https://github.com/wakatime/wakatime/issues/199>`_

|

||||

|

||||

|

||||

9.0.1 (2019-11-24)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v13.0.2.

|

||||

- Filter dependencies longer than 200 characters.

|

||||

- Close sqlite connection even when error raised.

|

||||

`wakatime#196 <https://github.com/wakatime/wakatime/issues/196>`_

|

||||

- Detect ColdFusion as root language instead of HTML.

|

||||

- New arguments for reading and writing ini config file.

|

||||

- Today argument shows categories when available.

|

||||

- Prevent unnecessarily debug log when syncing offline heartbeats.

|

||||

- Support for Python 3.7.

|

||||

|

||||

|

||||

9.0.0 (2019-06-23)

|

||||

++++++++++++++++++

|

||||

|

||||

- New optional config option hide_branch_names.

|

||||

`wakatime#183 <https://github.com/wakatime/wakatime/issues/183>`_

|

||||

|

||||

|

||||

8.7.0 (2019-05-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- Prevent creating user sublime-settings file when api key already exists in

|

||||

common wakatime.cfg file.

|

||||

`#98 <https://github.com/wakatime/sublime-wakatime/issues/98>`_

|

||||

|

||||

|

||||

8.6.1 (2019-05-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fix parsing common wakatime.cfg file.

|

||||

`#98 <https://github.com/wakatime/sublime-wakatime/issues/98>`_

|

||||

|

||||

|

||||

8.6.0 (2019-05-27)

|

||||

++++++++++++++++++

|

||||

|

||||

- Prevent prompting for api key when found from config file.

|

||||

`#98 <https://github.com/wakatime/sublime-wakatime/issues/98>`_

|

||||

|

||||

|

||||

8.5.0 (2019-05-10)

|

||||

++++++++++++++++++

|

||||

|

||||

- Remove clock icon from status bar.

|

||||

- Use wakatime-cli to fetch status bar coding time.

|

||||

|

||||

|

||||

8.4.2 (2019-05-07)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v11.0.0.

|

||||

- Rename argument --show-time-today to --today.

|

||||

- New argument --show-time-today for printing Today's coding time.

|

||||

|

||||

|

||||

8.4.1 (2019-05-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- Use api subdomain for fetching today's coding activity.

|

||||

|

||||

|

||||

8.4.0 (2019-05-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- Show today's coding time in status bar.

|

||||

|

||||

|

||||

8.3.6 (2019-04-30)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.8.4.

|

||||

- Use wakatime fork of certifi package.

|

||||

`#95 <https://github.com/wakatime/sublime-wakatime/issues/95>`_

|

||||

|

||||

|

||||

8.3.5 (2019-04-30)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.8.3.

|

||||

- Upgrade certifi to version 2019.03.09.

|

||||

|

||||

|

||||

8.3.4 (2019-03-30)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.8.2.

|

||||

- Detect go.mod files as Go language.

|

||||

`jetbrains-wakatime#119 <https://github.com/wakatime/jetbrains-wakatime/issues/119>`_

|

||||

- Detect C++ language from all C++ file extensions.

|

||||

`vscode-wakatime#87 <https://github.com/wakatime/vscode-wakatime/issues/87>`_

|

||||

- Add ssl_certs_file arg and config for custom ca bundles.

|

||||

`wakatime#164 <https://github.com/wakatime/wakatime/issues/164>`_

|

||||

- Fix bug causing random project names when hide project names enabled.

|

||||

`vscode-wakatime#162 <https://github.com/wakatime/vscode-wakatime/issues/61>`_

|

||||

- Add support for UNC network shares without drive letter mapped on Winows.

|

||||

`wakatime#162 <https://github.com/wakatime/wakatime/issues/162>`_

|

||||

|

||||

|

||||

8.3.3 (2018-12-19)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.6.1.

|

||||

- Correctly parse include_only_with_project_file when set to false.

|

||||

`wakatime#161 <https://github.com/wakatime/wakatime/issues/161>`_

|

||||

- Support language argument for non-file entity types.

|

||||

- Send 25 heartbeats per API request.

|

||||

- New category "Writing Tests".

|

||||

`wakatime#156 <https://github.com/wakatime/wakatime/issues/156>`_

|

||||

- Fix bug caused by git config section without any submodule option defined.

|

||||

`wakatime#152 <https://github.com/wakatime/wakatime/issues/152>`_

|

||||

|

||||

|

||||

8.3.2 (2018-10-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- Send buffered heartbeats to API every 30 seconds.

|

||||

|

||||

|

||||

8.3.1 (2018-10-05)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.4.1.

|

||||

- Send 50 offline heartbeats to API per request with 1 second delay in between.

|

||||

|

||||

|

||||

8.3.0 (2018-10-03)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.4.0.

|

||||

- Support logging coding activity to remote network drive files on Windows

|

||||

platform by detecting UNC path from drive letter.

|

||||

`wakatime#72 <https://github.com/wakatime/wakatime/issues/72>`_

|

||||

|

||||

|

||||

8.2.0 (2018-09-30)

|

||||

++++++++++++++++++

|

||||

|

||||

- Prevent opening cmd window on Windows when running wakatime-cli.

|

||||

`#91 <https://github.com/wakatime/sublime-wakatime/issues/91>`_

|

||||

- Upgrade wakatime-cli to v10.3.0.

|

||||

- Re-enable detecting projects from Subversion folder on Windows platform.

|

||||

- Prevent opening cmd window on Windows when detecting project from Subversion.

|

||||

- Run tests on Windows using Appveyor.

|

||||

|

||||

|

||||

8.1.2 (2018-09-20)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.2.4.

|

||||

- Default --sync-offline-activity to 100 instead of 5, so offline coding is

|

||||

synced to dashboard faster.

|

||||

- Batch heartbeats in groups of 10 per api request.

|

||||

- New config hide_project_name and argument --hide-project-names for

|

||||

obfuscating project names when sending coding activity to api.

|

||||

- Fix mispelled Gosu language.

|

||||

`wakatime#137 <https://github.com/wakatime/wakatime/issues/137>`_

|

||||

- Remove metadata when hiding project or file names.

|

||||

- New --local-file argument to be used when --entity is a remote file.

|

||||

- New argument --sync-offline-activity for configuring the maximum offline

|

||||

heartbeats to sync to the WakaTime API.

|

||||

|

||||

|

||||

8.1.1 (2018-04-26)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.2.1.

|

||||

- Force forward slash for file paths.

|

||||

- New --category argument.

|

||||

- New --exclude-unknown-project argument and corresponding config setting.

|

||||

- Support for project detection from git worktree folders.

|

||||

|

||||

|

||||

8.1.0 (2018-04-03)

|

||||

++++++++++++++++++

|

||||

|

||||

- Prefer Python3 over Python2 when running wakatime-cli core.

|

||||

- Improve detection of Python3 on Ubuntu 17.10 platforms.

|

||||

|

||||

|

||||

8.0.8 (2018-03-15)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.1.3.

|

||||

- Smarter C vs C++ vs Objective-C language detection.

|

||||

|

||||

|

||||

8.0.7 (2018-03-15)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.1.2.

|

||||

- Detect dependencies from Swift, Objective-C, TypeScript and JavaScript files.

|

||||

- Categorize .mjs files as JavaScript.

|

||||

`wakatime#121 <https://github.com/wakatime/wakatime/issues/121>`_

|

||||

- Detect dependencies from Elm, Haskell, Haxe, Kotlin, Rust, and Scala files.

|

||||

- Improved Matlab vs Objective-C language detection.

|

||||

`wakatime#129 <https://github.com/wakatime/wakatime/issues/129>`_

|

||||

|

||||

|

||||

8.0.6 (2018-01-04)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.1.0.

|

||||

- Ability to only track folders containing a .wakatime-project file using new

|

||||

include_only_with_project_file argument and config option.

|

||||

|

||||

|

||||

8.0.5 (2017-11-24)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.0.5.

|

||||

- Fix bug that caused heartbeats to be cached locally instead of sent to API.

|

||||

|

||||

|

||||

8.0.4 (2017-11-23)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.0.4.

|

||||

- Improve Java dependency detection.

|

||||

- Skip null or missing heartbeats from extra heartbeats argument.

|

||||

|

||||

|

||||

8.0.3 (2017-11-22)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.0.3.

|

||||

- Support saving unicode heartbeats when working offline.

|

||||

`wakatime#112 <https://github.com/wakatime/wakatime/issues/112>`_

|

||||

|

||||

|

||||

8.0.2 (2017-11-15)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.0.2.

|

||||

- Limit bulk syncing to 5 heartbeats per request.

|

||||

`wakatime#109 <https://github.com/wakatime/wakatime/issues/109>`_

|

||||

|

||||

|

||||

8.0.1 (2017-11-09)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.0.1.

|

||||

- Parse array of results from bulk heartbeats endpoint, only saving heartbeats

|

||||

to local offline cache when they were not accepted by the api.

|

||||

|

||||

|

||||

8.0.0 (2017-11-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v10.0.0.

|

||||

- Upload multiple heartbeats to bulk endpoint for improved network performance.

|

||||

`wakatime#107 <https://github.com/wakatime/wakatime/issues/107>`_

|

||||

|

||||

|

||||

7.0.26 (2017-11-07)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v9.0.1.

|

||||

- Fix bug causing 401 response when hidefilenames is enabled.

|

||||

`wakatime#106 <https://github.com/wakatime/wakatime/issues/106>`_

|

||||

|

||||

|

||||

7.0.25 (2017-11-05)

|

||||

++++++++++++++++++

|

||||

|

||||

- Ability to override python binary location in sublime-settings file.

|

||||

`#78 <https://github.com/wakatime/sublime-wakatime/issues/78>`_

|

||||

- Upgrade wakatime-cli to v9.0.0.

|

||||

- Detect project and branch names from git submodules.

|

||||

`wakatime#105 <https://github.com/wakatime/wakatime/issues/105>`_

|

||||

|

||||

|

||||

7.0.24 (2017-10-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v8.0.5.

|

||||

- Allow passing string arguments wrapped in extra quotes for plugins which

|

||||

cannot properly escape spaces in arguments.

|

||||

- Upgrade pytz to v2017.2.

|

||||

- Upgrade requests to v2.18.4.

|

||||

- Upgrade tzlocal to v1.4.

|

||||

- Use WAKATIME_HOME env variable for offline and session caching.

|

||||

`wakatime#102 <https://github.com/wakatime/wakatime/issues/102>`_

|

||||

|

||||

|

||||

7.0.23 (2017-09-14)

|

||||

++++++++++++++++++

|

||||

|

||||

- Add "include" setting to bypass ignored files.

|

||||

`#89 <https://github.com/wakatime/sublime-wakatime/issues/89>`_

|

||||

|

||||

|

||||

7.0.22 (2017-06-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v8.0.3.

|

||||

- Improve Matlab language detection.

|

||||

|

||||

|

||||

7.0.21 (2017-05-24)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v8.0.2.

|

||||

- Only treat proxy string as NTLM proxy after unable to connect with HTTPS and

|

||||

SOCKS proxy.

|

||||

- Support running automated tests on Linux, OS X, and Windows.

|

||||

- Ability to disable SSL cert verification.

|

||||

`wakatime#90 <https://github.com/wakatime/wakatime/issues/90>`_

|

||||

- Disable line count stats for files larger than 2MB to improve performance.

|

||||

- Print error saying Python needs upgrading when requests can't be imported.

|

||||

|

||||

|

||||

7.0.20 (2017-04-10)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fix install instructions formatting.

|

||||

|

||||

|

||||

7.0.19 (2017-04-10)

|

||||

++++++++++++++++++

|

||||

|

||||

- Remove /var/www/ from default ignored folders.

|

||||

|

||||

|

||||

7.0.18 (2017-03-16)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v8.0.0.

|

||||

- No longer creating ~/.wakatime.cfg file, since only using Sublime settings.

|

||||

|

||||

|

||||

7.0.17 (2017-03-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v7.0.4.

|

||||

|

||||

|

||||

7.0.16 (2017-02-20)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v7.0.2.

|

||||

|

||||

|

||||

7.0.15 (2017-02-13)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.2.2.

|

||||

- Upgrade pygments library to v2.2.0 for improved language detection.

|

||||

|

||||

|

||||

7.0.14 (2017-02-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.2.1.

|

||||

- Allow boolean or list of regex patterns for hidefilenames config setting.

|

||||

|

||||

|

||||

7.0.13 (2016-11-11)

|

||||

++++++++++++++++++

|

||||

|

||||

- Support old Sublime Text with Python 2.6.

|

||||

- Fix bug that prevented reading default api key from existing config file.

|

||||

|

||||

|

||||

7.0.12 (2016-10-24)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.2.0.

|

||||

- Exit with status code 104 when api key is missing or invalid. Exit with

|

||||

status code 103 when config file missing or invalid.

|

||||

- New WAKATIME_HOME env variable for setting path to config and log files.

|

||||

- Improve debug warning message from unsupported dependency parsers.

|

||||

|

||||

|

||||

7.0.11 (2016-09-23)

|

||||

++++++++++++++++++

|

||||

|

||||

- Handle UnicodeDecodeError when when logging.

|

||||

`#68 <https://github.com/wakatime/sublime-wakatime/issues/68>`_

|

||||

|

||||

|

||||

7.0.10 (2016-09-22)

|

||||

++++++++++++++++++

|

||||

|

||||

- Handle UnicodeDecodeError when looking for python.

|

||||

`#68 <https://github.com/wakatime/sublime-wakatime/issues/68>`_

|

||||

- Upgrade wakatime-cli to v6.0.9.

|

||||

|

||||

|

||||

7.0.9 (2016-09-02)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.8.

|

||||

|

||||

|

||||

7.0.8 (2016-07-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to master version to fix debug logging encoding bug.

|

||||

|

||||

|

||||

7.0.7 (2016-07-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.7.

|

||||

- Handle unknown exceptions from requests library by deleting cached session

|

||||

object because it could be from a previous conflicting version.

|

||||

- New hostname setting in config file to set machine hostname. Hostname

|

||||

argument takes priority over hostname from config file.

|

||||

- Prevent logging unrelated exception when logging tracebacks.

|

||||

- Use correct namespace for pygments.lexers.ClassNotFound exception so it is

|

||||

caught when dependency detection not available for a language.

|

||||

|

||||

|

||||

7.0.6 (2016-06-13)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.5.

|

||||

- Upgrade pygments to v2.1.3 for better language coverage.

|

||||

|

||||

|

||||

7.0.5 (2016-06-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to master version to fix bug in urllib3 package causing

|

||||

unhandled retry exceptions.

|

||||

- Prevent tracking git branch with detached head.

|

||||

|

||||

|

||||

7.0.4 (2016-05-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.3.

|

||||

- Upgrade requests dependency to v2.10.0.

|

||||

- Support for SOCKS proxies.

|

||||

|

||||

|

||||

7.0.3 (2016-05-16)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.2.

|

||||

- Prevent popup on Mac when xcode-tools is not installed.

|

||||

|

||||

|

||||

7.0.2 (2016-04-29)

|

||||

++++++++++++++++++

|

||||

|

||||

|

||||

3

LICENSE

3

LICENSE

@ -1,7 +1,6 @@

|

||||

BSD 3-Clause License

|

||||

|

||||

Copyright (c) 2014 by the respective authors (see AUTHORS file).

|

||||

All rights reserved.

|

||||

Copyright (c) 2014 Alan Hamlett.

|

||||

|

||||

Redistribution and use in source and binary forms, with or without

|

||||

modification, are permitted provided that the following conditions are met:

|

||||

|

||||

50

README.md

50

README.md

@ -1,56 +1,52 @@

|

||||

sublime-wakatime

|

||||

================

|

||||

# sublime-wakatime

|

||||

|

||||

Metrics, insights, and time tracking automatically generated from your programming activity.

|

||||

[](https://wakatime.com/badge/github/wakatime/sublime-wakatime)

|

||||

|

||||

[WakaTime][wakatime] is an open source Sublime Text plugin for metrics, insights, and time tracking automatically generated from your programming activity.

|

||||

|

||||

Installation

|

||||

------------

|

||||

## Installation

|

||||

|

||||

1. Install [Package Control](https://packagecontrol.io/installation).

|

||||

|

||||

2. Using [Package Control](https://packagecontrol.io/docs/usage):

|

||||

2. In Sublime, press `ctrl+shift+p`(Windows, Linux) or `cmd+shift+p`(OS X).

|

||||

|

||||

a) Inside Sublime, press `ctrl+shift+p`(Windows, Linux) or `cmd+shift+p`(OS X).

|

||||

3. Type `install`, then press `enter` with `Package Control: Install Package` selected.

|

||||

|

||||

b) Type `install`, then press `enter` with `Package Control: Install Package` selected.

|

||||

4. Type `wakatime`, then press `enter` with the `WakaTime` plugin selected.

|

||||

|

||||

c) Type `wakatime`, then press `enter` with the `WakaTime` plugin selected.

|

||||

5. Enter your [api key](https://wakatime.com/settings#apikey), then press `enter`.

|

||||

|

||||

3. Enter your [api key](https://wakatime.com/settings#apikey), then press `enter`.

|

||||

6. Use Sublime and your coding activity will be displayed on your [WakaTime dashboard](https://wakatime.com).

|

||||

|

||||

4. Use Sublime and your time will be tracked for you automatically.

|

||||

|

||||

5. Visit https://wakatime.com/dashboard to see your logged time.

|

||||

|

||||

|

||||

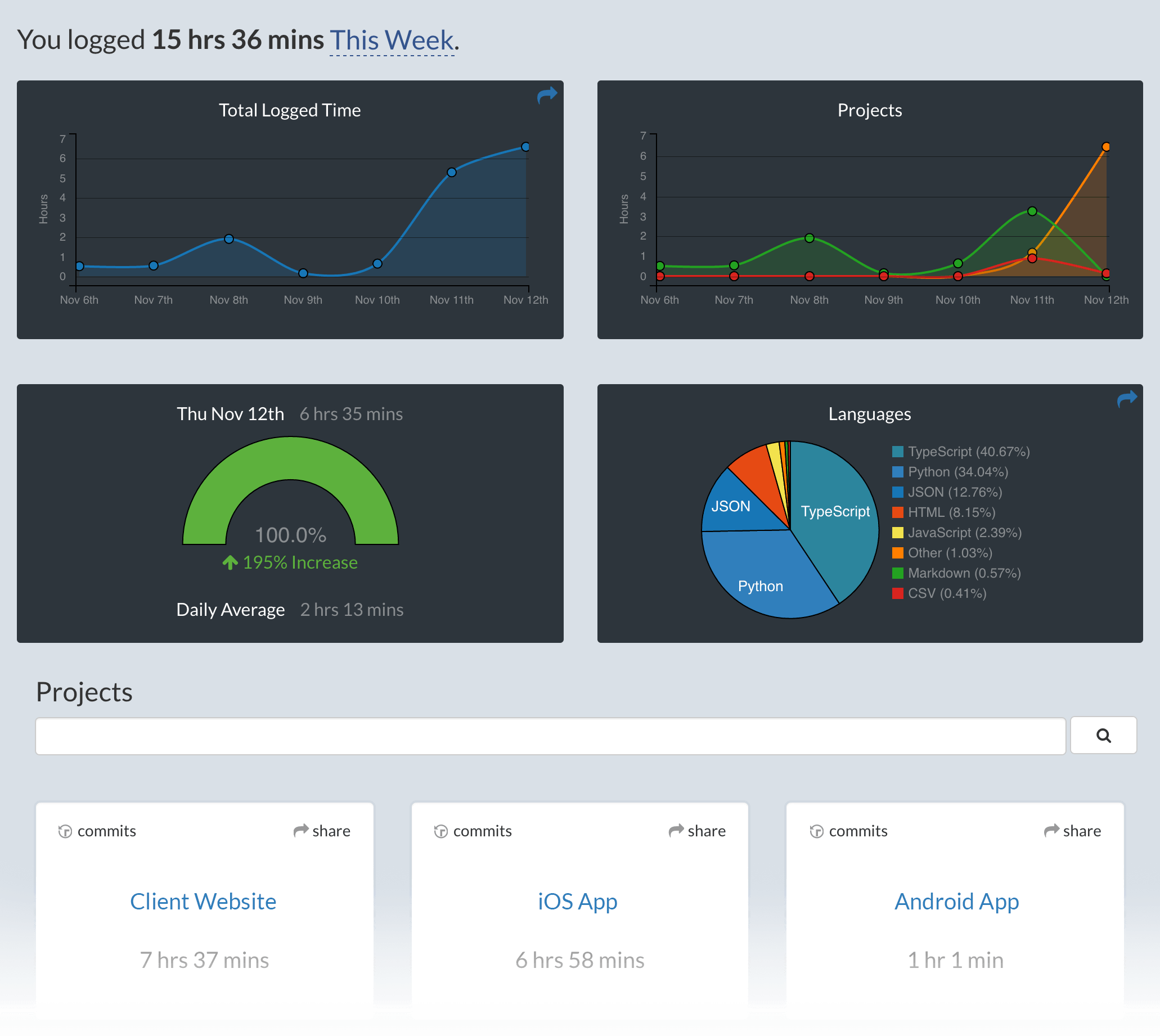

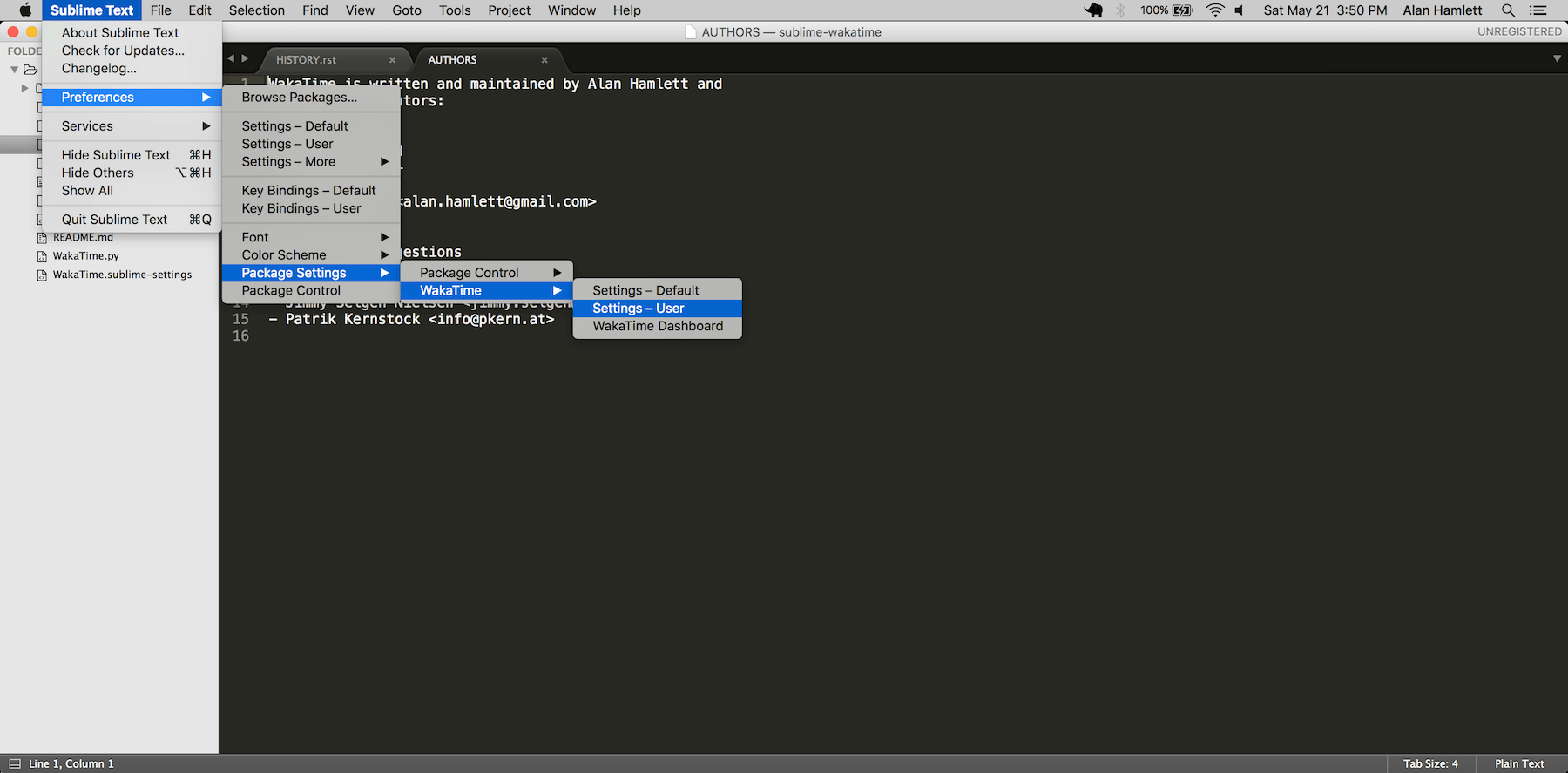

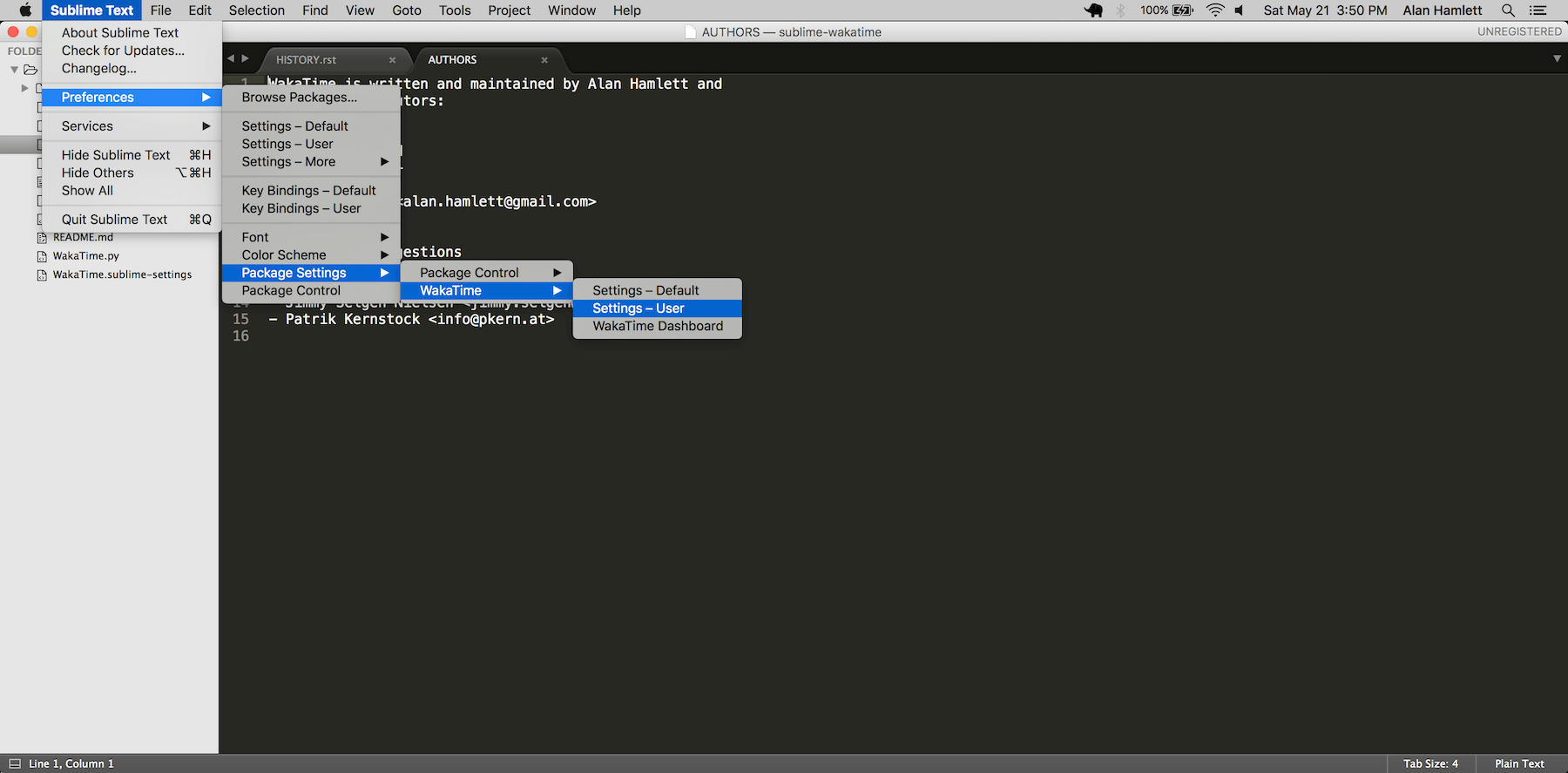

Screen Shots

|

||||

------------

|

||||

## Screen Shots

|

||||

|

||||

|

||||

|

||||

|

||||

Unresponsive Plugin Warning

|

||||

---------------------------

|

||||

## Unresponsive Plugin Warning

|

||||

|

||||

In Sublime Text 2, if you get a warning message:

|

||||

|

||||

A plugin (WakaTime) may be making Sublime Text unresponsive by taking too long (0.017332s) in its on_modified callback.

|

||||

|

||||

To fix this, go to `Preferences > Settings - User` then add the following setting:

|

||||

To fix this, go to `Preferences → Settings - User` then add the following setting:

|

||||

|

||||

`"detect_slow_plugins": false`

|

||||

|

||||

|

||||

Troubleshooting

|

||||

---------------

|

||||

## Troubleshooting

|

||||

|

||||

First, turn on debug mode in your `WakaTime.sublime-settings` file.

|

||||

|

||||

|

||||

|

||||

|

||||

Add the line: `"debug": true`

|

||||

|

||||

Then, open your Sublime Console with `View -> Show Console` to see the plugin executing the wakatime cli process when sending a heartbeat. Also, tail your `$HOME/.wakatime.log` file to debug wakatime cli problems.

|

||||

Then, open your Sublime Console with `View → Show Console` ( CTRL + \` ) to see the plugin executing the wakatime cli process when sending a heartbeat.

|

||||

Also, tail your `$HOME/.wakatime.log` file to debug wakatime cli problems.

|

||||

|

||||

For more general troubleshooting information, see [wakatime/wakatime#troubleshooting](https://github.com/wakatime/wakatime#troubleshooting).

|

||||

The [How to Debug Plugins][how to debug] guide shows how to check when coding activity was last received from your editor using the [User Agents API][user agents api].

|

||||

For more general troubleshooting info, see the [wakatime-cli Troubleshooting Section][wakatime-cli-help].

|

||||

|

||||

[wakatime]: https://wakatime.com/sublime-text

|

||||

[wakatime-cli-help]: https://github.com/wakatime/wakatime#troubleshooting

|

||||

[how to debug]: https://wakatime.com/faq#debug-plugins

|

||||

[user agents api]: https://wakatime.com/developers#user_agents

|

||||

|

||||

976

WakaTime.py

976

WakaTime.py

File diff suppressed because it is too large

Load Diff

@ -3,21 +3,38 @@

|

||||

// This settings file will be overwritten when upgrading.

|

||||

|

||||

{

|

||||

// Your api key from https://wakatime.com/#apikey

|

||||

// Your api key from https://wakatime.com/api-key

|

||||

// Set this in your User specific WakaTime.sublime-settings file.

|

||||

"api_key": "",

|

||||

|

||||

// Ignore files; Files (including absolute paths) that match one of these

|

||||

// POSIX regular expressions will not be logged.

|

||||

"ignore": ["^/tmp/", "^/etc/", "^/var/", "COMMIT_EDITMSG$", "PULLREQ_EDITMSG$", "MERGE_MSG$", "TAG_EDITMSG$"],

|

||||

|

||||

|

||||

// Debug mode. Set to true for verbose logging. Defaults to false.

|

||||

"debug": false,

|

||||

|

||||

// Status bar message. Set to false to hide status bar message.

|

||||

|

||||

// Proxy with format https://user:pass@host:port or socks5://user:pass@host:port or domain\\user:pass.

|

||||

"proxy": "",

|

||||

|

||||

// Ignore files; Files (including absolute paths) that match one of these

|

||||

// POSIX regular expressions will not be logged.

|

||||

"ignore": ["^/tmp/", "^/etc/", "^/var/(?!www/).*", "COMMIT_EDITMSG$", "PULLREQ_EDITMSG$", "MERGE_MSG$", "TAG_EDITMSG$"],

|

||||

|

||||

// Include files; Files (including absolute paths) that match one of these

|

||||

// POSIX regular expressions will bypass your ignore setting.

|

||||

"include": [".*"],

|

||||

|

||||

// Status bar for surfacing errors and displaying today's coding time. Set

|

||||

// to false to hide. Defaults to true.

|

||||

"status_bar_enabled": true,

|

||||

|

||||

// Show today's coding activity in WakaTime status bar item.

|

||||

// Defaults to true.

|

||||

"status_bar_message": true,

|

||||

|

||||

// Status bar message format.

|

||||

"status_bar_message_fmt": "WakaTime {status} %I:%M %p"

|

||||

"status_bar_coding_activity": true,

|

||||

|

||||

// Obfuscate file paths when sending to API. Your dashboard will no longer display coding activity per file.

|

||||

"hidefilenames": false,

|

||||

|

||||

// Python binary location. Uses python from your PATH by default.

|

||||

"python_binary": "",

|

||||

|

||||

// Use standalone compiled Python wakatime-cli (Will not work on ARM Macs)

|

||||

"standalone": false

|

||||

}

|

||||

|

||||

@ -1,9 +0,0 @@

|

||||

__title__ = 'wakatime'

|

||||

__description__ = 'Common interface to the WakaTime api.'

|

||||

__url__ = 'https://github.com/wakatime/wakatime'

|

||||

__version_info__ = ('6', '0', '1')

|

||||

__version__ = '.'.join(__version_info__)

|

||||

__author__ = 'Alan Hamlett'

|

||||

__author_email__ = 'alan@wakatime.com'

|

||||

__license__ = 'BSD'

|

||||

__copyright__ = 'Copyright 2016 Alan Hamlett'

|

||||

@ -1,17 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime

|

||||

~~~~~~~~

|

||||

|

||||

Common interface to the WakaTime api.

|

||||

http://wakatime.com

|

||||

|

||||

:copyright: (c) 2013 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

|

||||

__all__ = ['main']

|

||||

|

||||

|

||||

from .main import execute

|

||||

@ -1,35 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.cli

|

||||

~~~~~~~~~~~~

|

||||

|

||||

Command-line entry point.

|

||||

|

||||

:copyright: (c) 2013 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

import os

|

||||

import sys

|

||||

|

||||

|

||||

# get path to local wakatime package

|

||||

package_folder = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

|

||||

|

||||

# add local wakatime package to sys.path

|

||||

sys.path.insert(0, package_folder)

|

||||

|

||||

# import local wakatime package

|

||||

try:

|

||||

import wakatime

|

||||

except (TypeError, ImportError):

|

||||

# on Windows, non-ASCII characters in import path can be fixed using

|

||||

# the script path from sys.argv[0].

|

||||

# More info at https://github.com/wakatime/wakatime/issues/32

|

||||

package_folder = os.path.dirname(os.path.dirname(os.path.abspath(sys.argv[0])))

|

||||

sys.path.insert(0, package_folder)

|

||||

import wakatime

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

sys.exit(wakatime.execute(sys.argv[1:]))

|

||||

@ -1,93 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.compat

|

||||

~~~~~~~~~~~~~~~

|

||||

|

||||

For working with Python2 and Python3.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

import codecs

|

||||

import sys

|

||||

|

||||

|

||||

is_py2 = (sys.version_info[0] == 2)

|

||||

is_py3 = (sys.version_info[0] == 3)

|

||||

|

||||

|

||||

if is_py2: # pragma: nocover

|

||||

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

try:

|

||||

return unicode(text)

|

||||

except:

|

||||

return text

|

||||

open = codecs.open

|

||||

basestring = basestring

|

||||

|

||||

|

||||

elif is_py3: # pragma: nocover

|

||||

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

if isinstance(text, bytes):

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

return str(text)

|

||||

except:

|

||||

return text

|

||||

open = open

|

||||

basestring = (str, bytes)

|

||||

|

||||

|

||||

try:

|

||||

from importlib import import_module

|

||||

except ImportError: # pragma: nocover

|

||||

def _resolve_name(name, package, level):

|

||||

"""Return the absolute name of the module to be imported."""

|

||||

if not hasattr(package, 'rindex'):

|

||||

raise ValueError("'package' not set to a string")

|

||||

dot = len(package)

|

||||

for x in xrange(level, 1, -1):

|

||||

try:

|

||||

dot = package.rindex('.', 0, dot)

|

||||

except ValueError:

|

||||

raise ValueError("attempted relative import beyond top-level "

|

||||

"package")

|

||||

return "%s.%s" % (package[:dot], name)

|

||||

|

||||

def import_module(name, package=None):

|

||||

"""Import a module.

|

||||

The 'package' argument is required when performing a relative import.

|

||||

It specifies the package to use as the anchor point from which to

|

||||

resolve the relative import to an absolute import.

|

||||

"""

|

||||

if name.startswith('.'):

|

||||

if not package:

|

||||

raise TypeError("relative imports require the 'package' "

|

||||

+ "argument")

|

||||

level = 0

|

||||

for character in name:

|

||||

if character != '.':

|

||||

break

|

||||

level += 1

|

||||

name = _resolve_name(name[level:], package, level)

|

||||

__import__(name)

|

||||

return sys.modules[name]

|

||||

@ -1,18 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.constants

|

||||

~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Constant variable definitions.

|

||||

|

||||

:copyright: (c) 2016 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

|

||||

SUCCESS = 0

|

||||

API_ERROR = 102

|

||||

CONFIG_FILE_PARSE_ERROR = 103

|

||||

AUTH_ERROR = 104

|

||||

UNKNOWN_ERROR = 105

|

||||

MALFORMED_HEARTBEAT_ERROR = 106

|

||||

@ -1,130 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.dependencies

|

||||

~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from a source code file.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

import logging

|

||||

import re

|

||||

import sys

|

||||

import traceback

|

||||

|

||||

from ..compat import u, open, import_module

|

||||

from ..exceptions import NotYetImplemented

|

||||

|

||||

|

||||

log = logging.getLogger('WakaTime')

|

||||

|

||||

|

||||

class TokenParser(object):

|

||||

"""The base class for all dependency parsers. To add support for your

|

||||

language, inherit from this class and implement the :meth:`parse` method

|

||||

to return a list of dependency strings.

|

||||

"""

|

||||

exclude = []

|

||||

|

||||

def __init__(self, source_file, lexer=None):

|

||||

self._tokens = None

|

||||

self.dependencies = []

|

||||

self.source_file = source_file

|

||||

self.lexer = lexer

|

||||

self.exclude = [re.compile(x, re.IGNORECASE) for x in self.exclude]

|

||||

|

||||

@property

|

||||

def tokens(self):

|

||||

if self._tokens is None:

|

||||

self._tokens = self._extract_tokens()

|

||||

return self._tokens

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

""" Should return a list of dependencies.

|

||||

"""

|

||||

raise NotYetImplemented()

|

||||

|

||||

def append(self, dep, truncate=False, separator=None, truncate_to=None,

|

||||

strip_whitespace=True):

|

||||

self._save_dependency(

|

||||

dep,

|

||||

truncate=truncate,

|

||||

truncate_to=truncate_to,

|

||||

separator=separator,

|

||||

strip_whitespace=strip_whitespace,

|

||||

)

|

||||

|

||||

def partial(self, token):

|

||||

return u(token).split('.')[-1]

|

||||

|

||||

def _extract_tokens(self):

|

||||

if self.lexer:

|

||||

try:

|

||||

with open(self.source_file, 'r', encoding='utf-8') as fh:

|

||||

return self.lexer.get_tokens_unprocessed(fh.read(512000))

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

with open(self.source_file, 'r', encoding=sys.getfilesystemencoding()) as fh:

|

||||

return self.lexer.get_tokens_unprocessed(fh.read(512000))

|

||||

except:

|

||||

pass

|

||||

return []

|

||||

|

||||

def _save_dependency(self, dep, truncate=False, separator=None,

|

||||

truncate_to=None, strip_whitespace=True):

|

||||

if truncate:

|

||||

if separator is None:

|

||||

separator = u('.')

|

||||

separator = u(separator)

|

||||

dep = dep.split(separator)

|

||||

if truncate_to is None or truncate_to < 1:

|

||||

truncate_to = 1

|

||||

if truncate_to > len(dep):

|

||||

truncate_to = len(dep)

|

||||

dep = dep[0] if len(dep) == 1 else separator.join(dep[:truncate_to])

|

||||

if strip_whitespace:

|

||||

dep = dep.strip()

|

||||

if dep and (not separator or not dep.startswith(separator)):

|

||||

should_exclude = False

|

||||

for compiled in self.exclude:

|

||||

if compiled.search(dep):

|

||||

should_exclude = True

|

||||

break

|

||||

if not should_exclude:

|

||||

self.dependencies.append(dep)

|

||||

|

||||

|

||||

class DependencyParser(object):

|

||||

source_file = None

|

||||

lexer = None

|

||||

parser = None

|

||||

|

||||

def __init__(self, source_file, lexer):

|

||||

self.source_file = source_file

|

||||

self.lexer = lexer

|

||||

|

||||

if self.lexer:

|

||||

module_name = self.lexer.__module__.rsplit('.', 1)[-1]

|

||||

class_name = self.lexer.__class__.__name__.replace('Lexer', 'Parser', 1)

|

||||

else:

|

||||

module_name = 'unknown'

|

||||

class_name = 'UnknownParser'

|

||||

|

||||

try:

|

||||

module = import_module('.%s' % module_name, package=__package__)

|

||||

try:

|

||||

self.parser = getattr(module, class_name)

|

||||

except AttributeError:

|

||||

log.debug('Module {0} is missing class {1}'.format(module.__name__, class_name))

|

||||

except ImportError:

|

||||

log.debug(traceback.format_exc())

|

||||

|

||||

def parse(self):

|

||||

if self.parser:

|

||||

plugin = self.parser(self.source_file, lexer=self.lexer)

|

||||

dependencies = plugin.parse()

|

||||

return list(set(dependencies))

|

||||

return []

|

||||

@ -1,68 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.c_cpp

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from C++ code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

|

||||

|

||||

class CppParser(TokenParser):

|

||||

exclude = [

|

||||

r'^stdio\.h$',

|

||||

r'^stdlib\.h$',

|

||||

r'^string\.h$',

|

||||

r'^time\.h$',

|

||||

]

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Preproc':

|

||||

self._process_preproc(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_preproc(self, token, content):

|

||||

if content.strip().startswith('include ') or content.strip().startswith("include\t"):

|

||||

content = content.replace('include', '', 1).strip().strip('"').strip('<').strip('>').strip()

|

||||

self.append(content)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

|

||||

|

||||

class CParser(TokenParser):

|

||||

exclude = [

|

||||

r'^stdio\.h$',

|

||||

r'^stdlib\.h$',

|

||||

r'^string\.h$',

|

||||

r'^time\.h$',

|

||||

]

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Preproc':

|

||||

self._process_preproc(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_preproc(self, token, content):

|

||||

if content.strip().startswith('include ') or content.strip().startswith("include\t"):

|

||||

content = content.replace('include', '', 1).strip().strip('"').strip('<').strip('>').strip()

|

||||

self.append(content)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

@ -1,64 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.data

|

||||

~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from data files.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

import os

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

FILES = {

|

||||

'bower.json': {'exact': True, 'dependency': 'bower'},

|

||||

'component.json': {'exact': True, 'dependency': 'bower'},

|

||||

'package.json': {'exact': True, 'dependency': 'npm'},

|

||||

}

|

||||

|

||||

|

||||

class JsonParser(TokenParser):

|

||||

state = None

|

||||

level = 0

|

||||

|

||||

def parse(self):

|

||||

self._process_file_name(os.path.basename(self.source_file))

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_file_name(self, file_name):

|

||||

for key, value in FILES.items():

|

||||

found = (key == file_name) if value.get('exact') else (key.lower() in file_name.lower())

|

||||

if found:

|

||||

self.append(value['dependency'])

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if u(token) == 'Token.Name.Tag':

|

||||

self._process_tag(token, content)

|

||||

elif u(token) == 'Token.Literal.String.Single' or u(token) == 'Token.Literal.String.Double':

|

||||

self._process_literal_string(token, content)

|

||||

elif u(token) == 'Token.Punctuation':

|

||||

self._process_punctuation(token, content)

|

||||

|

||||

def _process_tag(self, token, content):

|

||||

if content.strip('"').strip("'") == 'dependencies' or content.strip('"').strip("'") == 'devDependencies':

|

||||

self.state = 'dependencies'

|

||||

elif self.state == 'dependencies' and self.level == 2:

|

||||

self.append(content.strip('"').strip("'"))

|

||||

|

||||

def _process_literal_string(self, token, content):

|

||||

pass

|

||||

|

||||

def _process_punctuation(self, token, content):

|

||||

if content == '{':

|

||||

self.level += 1

|

||||

elif content == '}':

|

||||

self.level -= 1

|

||||

if self.state is not None and self.level <= 1:

|

||||

self.state = None

|

||||

@ -1,64 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.dotnet

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from .NET code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class CSharpParser(TokenParser):

|

||||

exclude = [

|

||||

r'^system$',

|

||||

r'^microsoft$',

|

||||

]

|

||||

state = None

|

||||

buffer = u('')

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Keyword':

|

||||

self._process_keyword(token, content)

|

||||

if self.partial(token) == 'Namespace' or self.partial(token) == 'Name':

|

||||

self._process_namespace(token, content)

|

||||

elif self.partial(token) == 'Punctuation':

|

||||

self._process_punctuation(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_keyword(self, token, content):

|

||||

if content == 'using':

|

||||

self.state = 'import'

|

||||

self.buffer = u('')

|

||||

|

||||

def _process_namespace(self, token, content):

|

||||

if self.state == 'import':

|

||||

if u(content) != u('import') and u(content) != u('package') and u(content) != u('namespace') and u(content) != u('static'):

|

||||

if u(content) == u(';'): # pragma: nocover

|

||||

self._process_punctuation(token, content)

|

||||

else:

|

||||

self.buffer += u(content)

|

||||

|

||||

def _process_punctuation(self, token, content):

|

||||

if self.state == 'import':

|

||||

if u(content) == u(';'):

|

||||

self.append(self.buffer, truncate=True)

|

||||

self.buffer = u('')

|

||||

self.state = None

|

||||

elif u(content) == u('='):

|

||||

self.buffer = u('')

|

||||

else:

|

||||

self.buffer += u(content)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

@ -1,77 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.go

|

||||

~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from Go code.

|

||||

|

||||

:copyright: (c) 2016 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

|

||||

|

||||

class GoParser(TokenParser):

|

||||

state = None

|

||||

parens = 0

|

||||

aliases = 0

|

||||

exclude = [

|

||||

r'^"fmt"$',

|

||||

]

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Namespace':

|

||||

self._process_namespace(token, content)

|

||||

elif self.partial(token) == 'Punctuation':

|

||||

self._process_punctuation(token, content)

|

||||

elif self.partial(token) == 'String':

|

||||

self._process_string(token, content)

|

||||

elif self.partial(token) == 'Text':

|

||||

self._process_text(token, content)

|

||||

elif self.partial(token) == 'Other':

|

||||

self._process_other(token, content)

|

||||

else:

|

||||

self._process_misc(token, content)

|

||||

|

||||

def _process_namespace(self, token, content):

|

||||

self.state = content

|

||||

self.parens = 0

|

||||

self.aliases = 0

|

||||

|

||||

def _process_string(self, token, content):

|

||||

if self.state == 'import':

|

||||

self.append(content, truncate=False)

|

||||

|

||||

def _process_punctuation(self, token, content):

|

||||

if content == '(':

|

||||

self.parens += 1

|

||||

elif content == ')':

|

||||

self.parens -= 1

|

||||

elif content == '.':

|

||||

self.aliases += 1

|

||||

else:

|

||||

self.state = None

|

||||

|

||||

def _process_text(self, token, content):

|

||||

if self.state == 'import':

|

||||

if content == "\n" and self.parens <= 0:

|

||||

self.state = None

|

||||

self.parens = 0

|

||||

self.aliases = 0

|

||||

else:

|

||||

self.state = None

|

||||

|

||||

def _process_other(self, token, content):

|

||||

if self.state == 'import':

|

||||

self.aliases += 1

|

||||

else:

|

||||

self.state = None

|

||||

|

||||

def _process_misc(self, token, content):

|

||||

self.state = None

|

||||

@ -1,96 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.java

|

||||

~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from Java code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class JavaParser(TokenParser):

|

||||

exclude = [

|

||||

r'^java\.',

|

||||

r'^javax\.',

|

||||

r'^import$',

|

||||

r'^package$',

|

||||

r'^namespace$',

|

||||

r'^static$',

|

||||

]

|

||||

state = None

|

||||

buffer = u('')

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Namespace':

|

||||

self._process_namespace(token, content)

|

||||

if self.partial(token) == 'Name':

|

||||

self._process_name(token, content)

|

||||

elif self.partial(token) == 'Attribute':

|

||||

self._process_attribute(token, content)

|

||||

elif self.partial(token) == 'Operator':

|

||||

self._process_operator(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_namespace(self, token, content):

|

||||

if u(content) == u('import'):

|

||||

self.state = 'import'

|

||||

|

||||

elif self.state == 'import':

|

||||

keywords = [

|

||||

u('package'),

|

||||

u('namespace'),

|

||||

u('static'),

|

||||

]

|

||||

if u(content) in keywords:

|

||||

return

|

||||

self.buffer = u('{0}{1}').format(self.buffer, u(content))

|

||||

|

||||

elif self.state == 'import-finished':

|

||||

content = content.split(u('.'))

|

||||

|

||||

if len(content) == 1:

|

||||

self.append(content[0])

|

||||

|

||||

elif len(content) > 1:

|

||||

if len(content[0]) == 3:

|

||||

content = content[1:]

|

||||

if content[-1] == u('*'):

|

||||

content = content[:len(content) - 1]

|

||||

|

||||

if len(content) == 1:

|

||||

self.append(content[0])

|

||||

elif len(content) > 1:

|

||||

self.append(u('.').join(content[:2]))

|

||||

|

||||

self.state = None

|

||||

|

||||

def _process_name(self, token, content):

|

||||

if self.state == 'import':

|

||||

self.buffer = u('{0}{1}').format(self.buffer, u(content))

|

||||

|

||||

def _process_attribute(self, token, content):

|

||||

if self.state == 'import':

|

||||

self.buffer = u('{0}{1}').format(self.buffer, u(content))

|

||||

|

||||

def _process_operator(self, token, content):

|

||||

if u(content) == u(';'):

|

||||

self.state = 'import-finished'

|

||||

self._process_namespace(token, self.buffer)

|

||||

self.state = None

|

||||

self.buffer = u('')

|

||||

elif self.state == 'import':

|

||||

self.buffer = u('{0}{1}').format(self.buffer, u(content))

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

@ -1,85 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.php

|

||||

~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from PHP code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class PhpParser(TokenParser):

|

||||

state = None

|

||||

parens = 0

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Keyword':

|

||||

self._process_keyword(token, content)

|

||||

elif u(token) == 'Token.Literal.String.Single' or u(token) == 'Token.Literal.String.Double':

|

||||

self._process_literal_string(token, content)

|

||||

elif u(token) == 'Token.Name.Other':

|

||||

self._process_name(token, content)

|

||||

elif u(token) == 'Token.Name.Function':

|

||||

self._process_function(token, content)

|

||||

elif self.partial(token) == 'Punctuation':

|

||||

self._process_punctuation(token, content)

|

||||

elif self.partial(token) == 'Text':

|

||||

self._process_text(token, content)

|

||||

else:

|

||||