mirror of

https://github.com/wakatime/sublime-wakatime.git

synced 2023-08-10 21:13:02 +03:00

Compare commits

94 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 5e17ad88f6 | |||

| 24d0f65116 | |||

| a326046733 | |||

| 9bab00fd8b | |||

| b4a13a48b9 | |||

| 21601f9688 | |||

| 4c3ec87341 | |||

| b149d7fc87 | |||

| 52e6107c6e | |||

| b340637331 | |||

| 044867449a | |||

| 9e3f438823 | |||

| 887d55c3f3 | |||

| 19d54f3310 | |||

| 514a8762eb | |||

| 957c74d226 | |||

| 7b0432d6ff | |||

| 09754849be | |||

| 25ad48a97a | |||

| 3b2520afa9 | |||

| 77c2041ad3 | |||

| 8af3b53937 | |||

| 5ef2e6954e | |||

| ca94272de5 | |||

| f19a448d95 | |||

| e178765412 | |||

| 6a7de84b9c | |||

| 48810f2977 | |||

| 260eedb31d | |||

| 02e2bfcad2 | |||

| f14ece63f3 | |||

| cb7f786ec8 | |||

| ab8711d0b1 | |||

| 2354be358c | |||

| 443215bd90 | |||

| c64f125dc4 | |||

| 050b14fb53 | |||

| c7efc33463 | |||

| d0ddbed006 | |||

| 3ce8f388ab | |||

| 90731146f9 | |||

| e1ab92be6d | |||

| 8b59e46c64 | |||

| 006341eb72 | |||

| b54e0e13f6 | |||

| 835c7db864 | |||

| 53e8bb04e9 | |||

| 4aa06e3829 | |||

| 297f65733f | |||

| 5ba5e6d21b | |||

| 32eadda81f | |||

| c537044801 | |||

| a97792c23c | |||

| 4223f3575f | |||

| 284cdf3ce4 | |||

| 27afc41bf4 | |||

| 1fdda0d64a | |||

| c90a4863e9 | |||

| 94343e5b07 | |||

| 03acea6e25 | |||

| 77594700bd | |||

| 6681409e98 | |||

| 8f7837269a | |||

| a523b3aa4d | |||

| 6985ce32bb | |||

| 4be40c7720 | |||

| eeb7fd8219 | |||

| 11fbd2d2a6 | |||

| 3cecd0de5d | |||

| c50100e675 | |||

| c1da94bc18 | |||

| 7f9d6ede9d | |||

| 192a5c7aa7 | |||

| 16bbe21be9 | |||

| 5ebaf12a99 | |||

| 1834e8978a | |||

| 22c8ed74bd | |||

| 12bbb4e561 | |||

| c71cb21cc1 | |||

| eb11b991f0 | |||

| 7ea51d09ba | |||

| b07b59e0c8 | |||

| 9d715e95b7 | |||

| 3edaed53aa | |||

| 865b0bcee9 | |||

| d440fe912c | |||

| 627455167f | |||

| aba89d3948 | |||

| 18d87118e1 | |||

| fd91b9e032 | |||

| 16b15773bf | |||

| f0b518862a | |||

| 7ee7de70d5 | |||

| fb479f8e84 |

273

HISTORY.rst

273

HISTORY.rst

@ -3,7 +3,278 @@ History

|

||||

-------

|

||||

|

||||

|

||||

4.0.6 (2015-06-21)

|

||||

7.0.10 (2016-09-22)

|

||||

++++++++++++++++++

|

||||

|

||||

- Handle UnicodeDecodeError when looking for python. Fixes #68.

|

||||

- Upgrade wakatime-cli to v6.0.9.

|

||||

|

||||

|

||||

7.0.9 (2016-09-02)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.8.

|

||||

|

||||

|

||||

7.0.8 (2016-07-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to master version to fix debug logging encoding bug.

|

||||

|

||||

|

||||

7.0.7 (2016-07-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.7.

|

||||

- Handle unknown exceptions from requests library by deleting cached session

|

||||

object because it could be from a previous conflicting version.

|

||||

- New hostname setting in config file to set machine hostname. Hostname

|

||||

argument takes priority over hostname from config file.

|

||||

- Prevent logging unrelated exception when logging tracebacks.

|

||||

- Use correct namespace for pygments.lexers.ClassNotFound exception so it is

|

||||

caught when dependency detection not available for a language.

|

||||

|

||||

|

||||

7.0.6 (2016-06-13)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.5.

|

||||

- Upgrade pygments to v2.1.3 for better language coverage.

|

||||

|

||||

|

||||

7.0.5 (2016-06-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to master version to fix bug in urllib3 package causing

|

||||

unhandled retry exceptions.

|

||||

- Prevent tracking git branch with detached head.

|

||||

|

||||

|

||||

7.0.4 (2016-05-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.3.

|

||||

- Upgrade requests dependency to v2.10.0.

|

||||

- Support for SOCKS proxies.

|

||||

|

||||

|

||||

7.0.3 (2016-05-16)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.2.

|

||||

- Prevent popup on Mac when xcode-tools is not installed.

|

||||

|

||||

|

||||

7.0.2 (2016-04-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- Prevent implicit unicode decoding from string format when logging output

|

||||

from Python version check.

|

||||

|

||||

|

||||

7.0.1 (2016-04-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v6.0.1.

|

||||

- Fix bug which prevented plugin from being sent with extra heartbeats.

|

||||

|

||||

|

||||

7.0.0 (2016-04-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- Queue heartbeats and send to wakatime-cli after 4 seconds.

|

||||

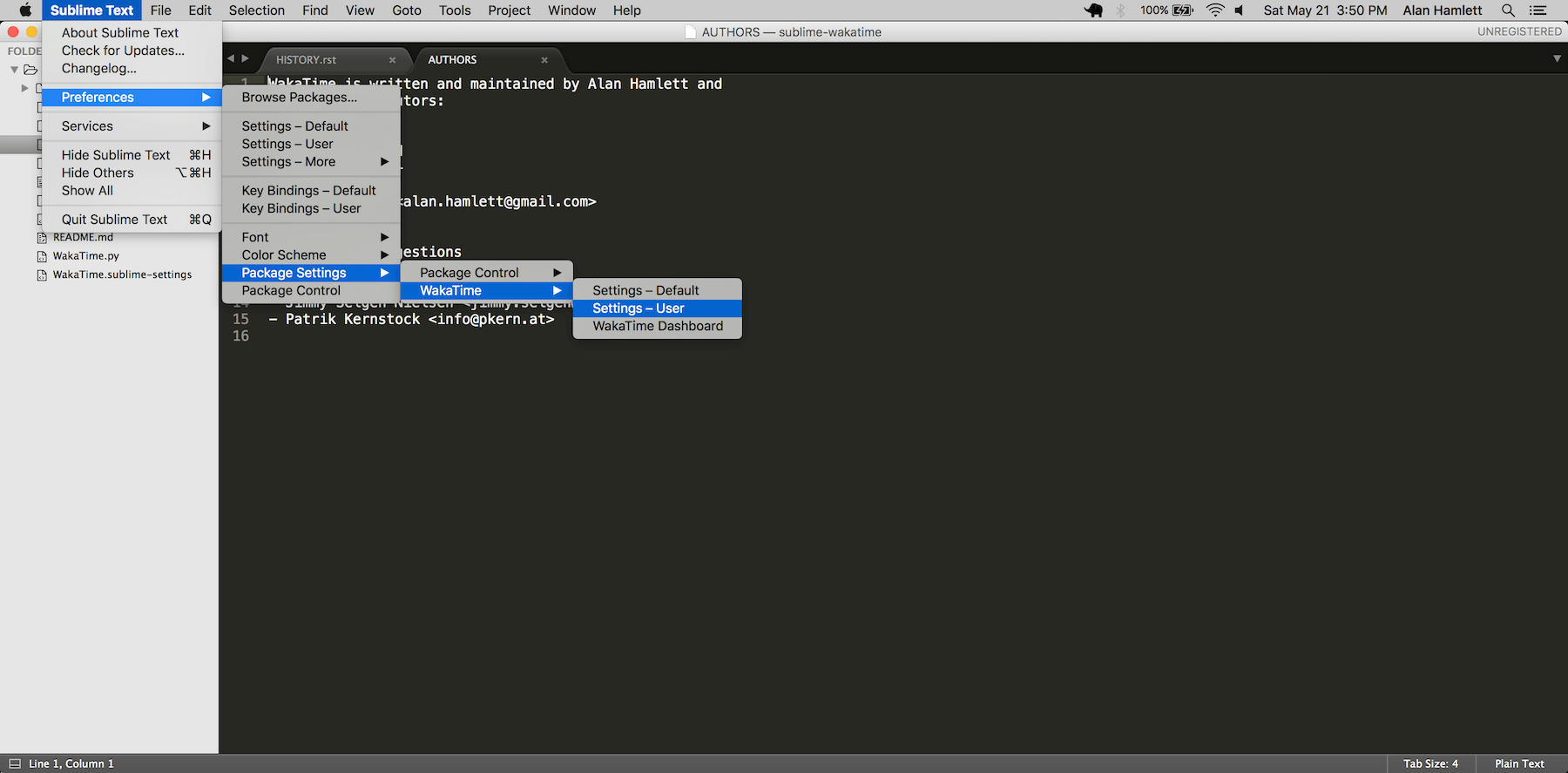

- Nest settings menu under Package Settings.

|

||||

- Upgrade wakatime-cli to v6.0.0.

|

||||

- Increase default network timeout to 60 seconds when sending heartbeats to

|

||||

the api.

|

||||

- New --extra-heartbeats command line argument for sending a JSON array of

|

||||

extra queued heartbeats to STDIN.

|

||||

- Change --entitytype command line argument to --entity-type.

|

||||

- No longer allowing --entity-type of url.

|

||||

- Support passing an alternate language to cli to be used when a language can

|

||||

not be guessed from the code file.

|

||||

|

||||

|

||||

6.0.8 (2016-04-18)

|

||||

++++++++++++++++++

|

||||

|

||||

- Upgrade wakatime-cli to v5.0.0.

|

||||

- Support regex patterns in projectmap config section for renaming projects.

|

||||

- Upgrade pytz to v2016.3.

|

||||

- Upgrade tzlocal to v1.2.2.

|

||||

|

||||

|

||||

6.0.7 (2016-03-11)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fix bug causing RuntimeError when finding Python location

|

||||

|

||||

|

||||

6.0.6 (2016-03-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli to v4.1.13

|

||||

- encode TimeZone as utf-8 before adding to headers

|

||||

- encode X-Machine-Name as utf-8 before adding to headers

|

||||

|

||||

|

||||

6.0.5 (2016-03-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli to v4.1.11

|

||||

- encode machine hostname as Unicode when adding to X-Machine-Name header

|

||||

|

||||

|

||||

6.0.4 (2016-01-15)

|

||||

++++++++++++++++++

|

||||

|

||||

- fix UnicodeDecodeError on ST2 with non-English locale

|

||||

|

||||

|

||||

6.0.3 (2016-01-11)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli core to v4.1.10

|

||||

- accept 201 or 202 response codes as success from api

|

||||

- upgrade requests package to v2.9.1

|

||||

|

||||

|

||||

6.0.2 (2016-01-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime-cli core to v4.1.9

|

||||

- improve C# dependency detection

|

||||

- correctly log exception tracebacks

|

||||

- log all unknown exceptions to wakatime.log file

|

||||

- disable urllib3 SSL warning from every request

|

||||

- detect dependencies from golang files

|

||||

- use api.wakatime.com for sending heartbeats

|

||||

|

||||

|

||||

6.0.1 (2016-01-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- use embedded python if system python is broken, or doesn't output a version number

|

||||

- log output from wakatime-cli in ST console when in debug mode

|

||||

|

||||

|

||||

6.0.0 (2015-12-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- use embeddable Python instead of installing on Windows

|

||||

|

||||

|

||||

5.0.1 (2015-10-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- look for python in system PATH again

|

||||

|

||||

|

||||

5.0.0 (2015-10-02)

|

||||

++++++++++++++++++

|

||||

|

||||

- improve logging with levels and log function

|

||||

- switch registry warnings to debug log level

|

||||

|

||||

|

||||

4.0.20 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- correctly find python binary in non-Windows environments

|

||||

|

||||

|

||||

4.0.19 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- handle case where ST builtin python does not have _winreg or winreg module

|

||||

|

||||

|

||||

4.0.18 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- find python location from windows registry

|

||||

|

||||

|

||||

4.0.17 (2015-10-01)

|

||||

++++++++++++++++++

|

||||

|

||||

- download python in non blocking background thread for Windows machines

|

||||

|

||||

|

||||

4.0.16 (2015-09-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.8

|

||||

- fix bug in guess_language function

|

||||

- improve dependency detection

|

||||

- default request timeout of 30 seconds

|

||||

- new --timeout command line argument to change request timeout in seconds

|

||||

- allow passing command line arguments using sys.argv

|

||||

- fix entry point for pypi distribution

|

||||

- new --entity and --entitytype command line arguments

|

||||

|

||||

|

||||

4.0.15 (2015-08-28)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.3

|

||||

- fix local session caching

|

||||

|

||||

|

||||

4.0.14 (2015-08-25)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.2

|

||||

- fix bug in offline caching which prevented heartbeats from being cleaned up

|

||||

|

||||

|

||||

4.0.13 (2015-08-25)

|

||||

++++++++++++++++++

|

||||

|

||||

- upgrade wakatime cli to v4.1.1

|

||||

- send hostname in X-Machine-Name header

|

||||

- catch exceptions from pygments.modeline.get_filetype_from_buffer

|

||||

- upgrade requests package to v2.7.0

|

||||

- handle non-ASCII characters in import path on Windows, won't fix for Python2

|

||||

- upgrade argparse to v1.3.0

|

||||

- move language translations to api server

|

||||

- move extension rules to api server

|

||||

- detect correct header file language based on presence of .cpp or .c files named the same as the .h file

|

||||

|

||||

|

||||

4.0.12 (2015-07-31)

|

||||

++++++++++++++++++

|

||||

|

||||

- correctly use urllib in Python3

|

||||

|

||||

|

||||

4.0.11 (2015-07-31)

|

||||

++++++++++++++++++

|

||||

|

||||

- install python if missing on Windows OS

|

||||

|

||||

|

||||

4.0.10 (2015-07-31)

|

||||

++++++++++++++++++

|

||||

|

||||

- downgrade requests library to v2.6.0

|

||||

|

||||

|

||||

4.0.9 (2015-07-29)

|

||||

++++++++++++++++++

|

||||

|

||||

- catch exceptions from pygments.modeline.get_filetype_from_buffer

|

||||

|

||||

|

||||

4.0.8 (2015-06-23)

|

||||

++++++++++++++++++

|

||||

|

||||

- fix offline logging

|

||||

- limit language detection to known file extensions, unless file contents has a vim modeline

|

||||

- upgrade wakatime cli to v4.0.16

|

||||

|

||||

|

||||

4.0.7 (2015-06-21)

|

||||

++++++++++++++++++

|

||||

|

||||

- allow customizing status bar message in sublime-settings file

|

||||

|

||||

@ -6,24 +6,37 @@

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"caption": "WakaTime",

|

||||

"mnemonic": "W",

|

||||

"id": "wakatime-settings",

|

||||

"caption": "Package Settings",

|

||||

"mnemonic": "P",

|

||||

"id": "package-settings",

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/WakaTime/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – Default"

|

||||

},

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/User/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – User"

|

||||

"caption": "WakaTime",

|

||||

"mnemonic": "W",

|

||||

"id": "wakatime-settings",

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/WakaTime/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – Default"

|

||||

},

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/User/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – User"

|

||||

},

|

||||

{

|

||||

"command": "wakatime_dashboard",

|

||||

"args": {},

|

||||

"caption": "WakaTime Dashboard"

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

33

README.md

33

README.md

@ -1,13 +1,12 @@

|

||||

sublime-wakatime

|

||||

================

|

||||

|

||||

Fully automatic time tracking for Sublime Text 2 & 3.

|

||||

Metrics, insights, and time tracking automatically generated from your programming activity.

|

||||

|

||||

|

||||

Installation

|

||||

------------

|

||||

|

||||

Heads Up! For Sublime Text 2 on Windows & Linux, WakaTime depends on [Python](http://www.python.org/getit/) being installed to work correctly.

|

||||

|

||||

1. Install [Package Control](https://packagecontrol.io/installation).

|

||||

|

||||

2. Using [Package Control](https://packagecontrol.io/docs/usage):

|

||||

@ -24,8 +23,34 @@ Heads Up! For Sublime Text 2 on Windows & Linux, WakaTime depends on [Python](ht

|

||||

|

||||

5. Visit https://wakatime.com/dashboard to see your logged time.

|

||||

|

||||

|

||||

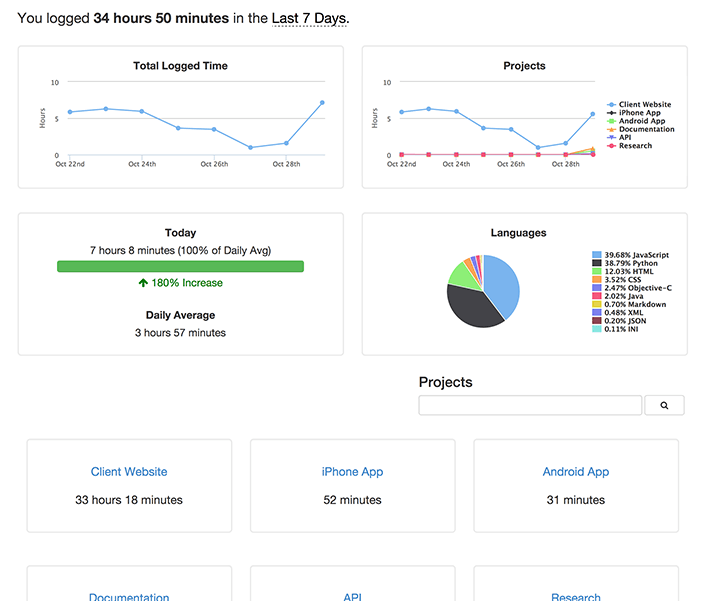

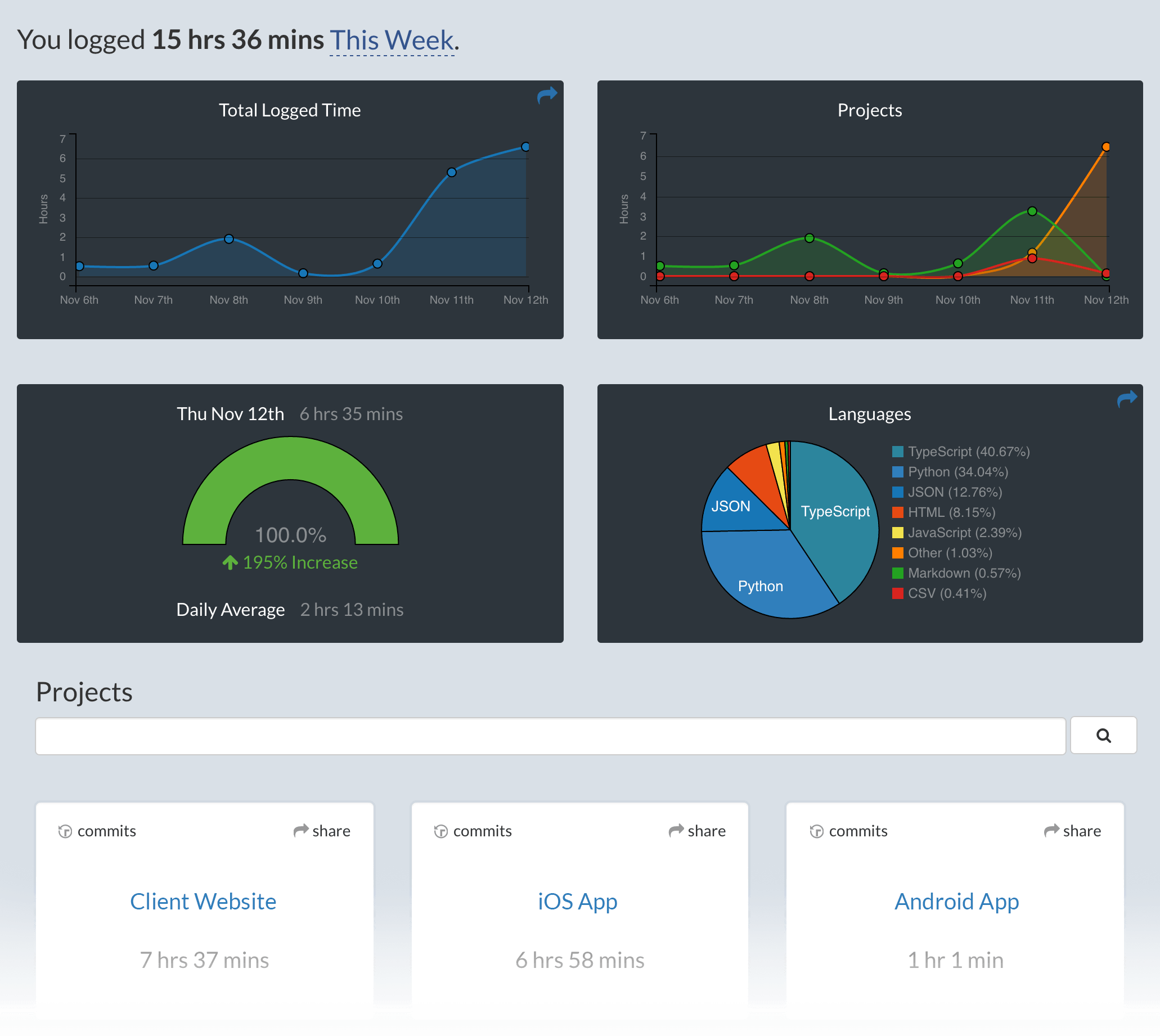

Screen Shots

|

||||

------------

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Unresponsive Plugin Warning

|

||||

---------------------------

|

||||

|

||||

In Sublime Text 2, if you get a warning message:

|

||||

|

||||

A plugin (WakaTime) may be making Sublime Text unresponsive by taking too long (0.017332s) in its on_modified callback.

|

||||

|

||||

To fix this, go to `Preferences > Settings - User` then add the following setting:

|

||||

|

||||

`"detect_slow_plugins": false`

|

||||

|

||||

|

||||

Troubleshooting

|

||||

---------------

|

||||

|

||||

First, turn on debug mode in your `WakaTime.sublime-settings` file.

|

||||

|

||||

|

||||

|

||||

Add the line: `"debug": true`

|

||||

|

||||

Then, open your Sublime Console with `View -> Show Console` to see the plugin executing the wakatime cli process when sending a heartbeat. Also, tail your `$HOME/.wakatime.log` file to debug wakatime cli problems.

|

||||

|

||||

For more general troubleshooting information, see [wakatime/wakatime#troubleshooting](https://github.com/wakatime/wakatime#troubleshooting).

|

||||

|

||||

540

WakaTime.py

540

WakaTime.py

@ -7,21 +7,78 @@ Website: https://wakatime.com/

|

||||

==========================================================="""

|

||||

|

||||

|

||||

__version__ = '4.0.7'

|

||||

__version__ = '7.0.10'

|

||||

|

||||

|

||||

import sublime

|

||||

import sublime_plugin

|

||||

|

||||

import glob

|

||||

import json

|

||||

import os

|

||||

import platform

|

||||

import re

|

||||

import sys

|

||||

import time

|

||||

import threading

|

||||

import urllib

|

||||

import webbrowser

|

||||

from datetime import datetime

|

||||

from subprocess import Popen

|

||||

from zipfile import ZipFile

|

||||

from subprocess import Popen, STDOUT, PIPE

|

||||

try:

|

||||

import _winreg as winreg # py2

|

||||

except ImportError:

|

||||

try:

|

||||

import winreg # py3

|

||||

except ImportError:

|

||||

winreg = None

|

||||

try:

|

||||

import Queue as queue # py2

|

||||

except ImportError:

|

||||

import queue # py3

|

||||

|

||||

|

||||

is_py2 = (sys.version_info[0] == 2)

|

||||

is_py3 = (sys.version_info[0] == 3)

|

||||

|

||||

if is_py2:

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

try:

|

||||

return unicode(text)

|

||||

except:

|

||||

return text.decode('utf-8', 'replace')

|

||||

|

||||

elif is_py3:

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

if isinstance(text, bytes):

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

return str(text)

|

||||

except:

|

||||

return text.decode('utf-8', 'replace')

|

||||

|

||||

else:

|

||||

raise Exception('Unsupported Python version: {0}.{1}.{2}'.format(

|

||||

sys.version_info[0],

|

||||

sys.version_info[1],

|

||||

sys.version_info[2],

|

||||

))

|

||||

|

||||

|

||||

# globals

|

||||

@ -36,8 +93,15 @@ LAST_HEARTBEAT = {

|

||||

'file': None,

|

||||

'is_write': False,

|

||||

}

|

||||

LOCK = threading.RLock()

|

||||

PYTHON_LOCATION = None

|

||||

HEARTBEATS = queue.Queue()

|

||||

|

||||

|

||||

# Log Levels

|

||||

DEBUG = 'DEBUG'

|

||||

INFO = 'INFO'

|

||||

WARNING = 'WARNING'

|

||||

ERROR = 'ERROR'

|

||||

|

||||

|

||||

# add wakatime package to path

|

||||

@ -48,7 +112,59 @@ except ImportError:

|

||||

pass

|

||||

|

||||

|

||||

def createConfigFile():

|

||||

def set_timeout(callback, seconds):

|

||||

"""Runs the callback after the given seconds delay.

|

||||

|

||||

If this is Sublime Text 3, runs the callback on an alternate thread. If this

|

||||

is Sublime Text 2, runs the callback in the main thread.

|

||||

"""

|

||||

|

||||

milliseconds = int(seconds * 1000)

|

||||

try:

|

||||

sublime.set_timeout_async(callback, milliseconds)

|

||||

except AttributeError:

|

||||

sublime.set_timeout(callback, milliseconds)

|

||||

|

||||

|

||||

def log(lvl, message, *args, **kwargs):

|

||||

try:

|

||||

if lvl == DEBUG and not SETTINGS.get('debug'):

|

||||

return

|

||||

msg = message

|

||||

if len(args) > 0:

|

||||

msg = message.format(*args)

|

||||

elif len(kwargs) > 0:

|

||||

msg = message.format(**kwargs)

|

||||

print('[WakaTime] [{lvl}] {msg}'.format(lvl=lvl, msg=msg))

|

||||

except RuntimeError:

|

||||

set_timeout(lambda: log(lvl, message, *args, **kwargs), 0)

|

||||

|

||||

|

||||

def resources_folder():

|

||||

if platform.system() == 'Windows':

|

||||

return os.path.join(os.getenv('APPDATA'), 'WakaTime')

|

||||

else:

|

||||

return os.path.join(os.path.expanduser('~'), '.wakatime')

|

||||

|

||||

|

||||

def update_status_bar(status):

|

||||

"""Updates the status bar."""

|

||||

|

||||

try:

|

||||

if SETTINGS.get('status_bar_message'):

|

||||

msg = datetime.now().strftime(SETTINGS.get('status_bar_message_fmt'))

|

||||

if '{status}' in msg:

|

||||

msg = msg.format(status=status)

|

||||

|

||||

active_window = sublime.active_window()

|

||||

if active_window:

|

||||

for view in active_window.views():

|

||||

view.set_status('wakatime', msg)

|

||||

except RuntimeError:

|

||||

set_timeout(lambda: update_status_bar(status), 0)

|

||||

|

||||

|

||||

def create_config_file():

|

||||

"""Creates the .wakatime.cfg INI file in $HOME directory, if it does

|

||||

not already exist.

|

||||

"""

|

||||

@ -69,7 +185,7 @@ def createConfigFile():

|

||||

def prompt_api_key():

|

||||

global SETTINGS

|

||||

|

||||

createConfigFile()

|

||||

create_config_file()

|

||||

|

||||

default_key = ''

|

||||

try:

|

||||

@ -92,35 +208,129 @@ def prompt_api_key():

|

||||

window.show_input_panel('[WakaTime] Enter your wakatime.com api key:', default_key, got_key, None, None)

|

||||

return True

|

||||

else:

|

||||

print('[WakaTime] Error: Could not prompt for api key because no window found.')

|

||||

log(ERROR, 'Could not prompt for api key because no window found.')

|

||||

return False

|

||||

|

||||

|

||||

def python_binary():

|

||||

global PYTHON_LOCATION

|

||||

if PYTHON_LOCATION is not None:

|

||||

return PYTHON_LOCATION

|

||||

|

||||

# look for python in PATH and common install locations

|

||||

paths = [

|

||||

"pythonw",

|

||||

"python",

|

||||

"/usr/local/bin/python",

|

||||

"/usr/bin/python",

|

||||

os.path.join(resources_folder(), 'python'),

|

||||

None,

|

||||

'/',

|

||||

'/usr/local/bin/',

|

||||

'/usr/bin/',

|

||||

]

|

||||

for path in paths:

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

PYTHON_LOCATION = path

|

||||

path = find_python_in_folder(path)

|

||||

if path is not None:

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

for path in glob.iglob('/python*'):

|

||||

path = os.path.realpath(os.path.join(path, 'pythonw'))

|

||||

try:

|

||||

Popen([path, '--version'])

|

||||

PYTHON_LOCATION = path

|

||||

|

||||

# look for python in windows registry

|

||||

path = find_python_from_registry(r'SOFTWARE\Python\PythonCore')

|

||||

if path is not None:

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

path = find_python_from_registry(r'SOFTWARE\Wow6432Node\Python\PythonCore')

|

||||

if path is not None:

|

||||

set_python_binary_location(path)

|

||||

return path

|

||||

|

||||

return None

|

||||

|

||||

|

||||

def set_python_binary_location(path):

|

||||

global PYTHON_LOCATION

|

||||

PYTHON_LOCATION = path

|

||||

log(DEBUG, 'Found Python at: {0}'.format(path))

|

||||

|

||||

|

||||

def find_python_from_registry(location, reg=None):

|

||||

if platform.system() != 'Windows' or winreg is None:

|

||||

return None

|

||||

|

||||

if reg is None:

|

||||

path = find_python_from_registry(location, reg=winreg.HKEY_CURRENT_USER)

|

||||

if path is None:

|

||||

path = find_python_from_registry(location, reg=winreg.HKEY_LOCAL_MACHINE)

|

||||

return path

|

||||

|

||||

val = None

|

||||

sub_key = 'InstallPath'

|

||||

compiled = re.compile(r'^\d+\.\d+$')

|

||||

|

||||

try:

|

||||

with winreg.OpenKey(reg, location) as handle:

|

||||

versions = []

|

||||

try:

|

||||

for index in range(1024):

|

||||

version = winreg.EnumKey(handle, index)

|

||||

try:

|

||||

if compiled.search(version):

|

||||

versions.append(version)

|

||||

except re.error:

|

||||

pass

|

||||

except EnvironmentError:

|

||||

pass

|

||||

versions.sort(reverse=True)

|

||||

for version in versions:

|

||||

try:

|

||||

path = winreg.QueryValue(handle, version + '\\' + sub_key)

|

||||

if path is not None:

|

||||

path = find_python_in_folder(path)

|

||||

if path is not None:

|

||||

log(DEBUG, 'Found python from {reg}\\{key}\\{version}\\{sub_key}.'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

version=version,

|

||||

sub_key=sub_key,

|

||||

))

|

||||

return path

|

||||

except WindowsError:

|

||||

log(DEBUG, 'Could not read registry value "{reg}\\{key}\\{version}\\{sub_key}".'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

version=version,

|

||||

sub_key=sub_key,

|

||||

))

|

||||

except WindowsError:

|

||||

log(DEBUG, 'Could not read registry value "{reg}\\{key}".'.format(

|

||||

reg=reg,

|

||||

key=location,

|

||||

))

|

||||

|

||||

return val

|

||||

|

||||

|

||||

def find_python_in_folder(folder, headless=True):

|

||||

pattern = re.compile(r'\d+\.\d+')

|

||||

|

||||

path = 'python'

|

||||

if folder is not None:

|

||||

path = os.path.realpath(os.path.join(folder, 'python'))

|

||||

if headless:

|

||||

path = u(path) + u('w')

|

||||

log(DEBUG, u('Looking for Python at: {0}').format(u(path)))

|

||||

try:

|

||||

process = Popen([path, '--version'], stdout=PIPE, stderr=STDOUT)

|

||||

output, err = process.communicate()

|

||||

output = u(output).strip()

|

||||

retcode = process.poll()

|

||||

log(DEBUG, u('Python Version Output: {0}').format(output))

|

||||

if not retcode and pattern.search(output):

|

||||

return path

|

||||

except:

|

||||

pass

|

||||

except:

|

||||

log(DEBUG, u(sys.exc_info()[1]))

|

||||

|

||||

if headless:

|

||||

path = find_python_in_folder(folder, headless=False)

|

||||

if path is not None:

|

||||

return path

|

||||

|

||||

return None

|

||||

|

||||

|

||||

@ -132,14 +342,14 @@ def obfuscate_apikey(command_list):

|

||||

apikey_index = num + 1

|

||||

break

|

||||

if apikey_index is not None and apikey_index < len(cmd):

|

||||

cmd[apikey_index] = '********-****-****-****-********' + cmd[apikey_index][-4:]

|

||||

cmd[apikey_index] = 'XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXX' + cmd[apikey_index][-4:]

|

||||

return cmd

|

||||

|

||||

|

||||

def enough_time_passed(now, last_heartbeat, is_write):

|

||||

if now - last_heartbeat['time'] > HEARTBEAT_FREQUENCY * 60:

|

||||

def enough_time_passed(now, is_write):

|

||||

if now - LAST_HEARTBEAT['time'] > HEARTBEAT_FREQUENCY * 60:

|

||||

return True

|

||||

if is_write and now - last_heartbeat['time'] > 2:

|

||||

if is_write and now - LAST_HEARTBEAT['time'] > 2:

|

||||

return True

|

||||

return False

|

||||

|

||||

@ -183,111 +393,235 @@ def is_view_active(view):

|

||||

return False

|

||||

|

||||

|

||||

def handle_heartbeat(view, is_write=False):

|

||||

def handle_activity(view, is_write=False):

|

||||

window = view.window()

|

||||

if window is not None:

|

||||

target_file = view.file_name()

|

||||

project = window.project_data() if hasattr(window, 'project_data') else None

|

||||

folders = window.folders()

|

||||

thread = SendHeartbeatThread(target_file, view, is_write=is_write, project=project, folders=folders)

|

||||

thread.start()

|

||||

entity = view.file_name()

|

||||

if entity:

|

||||

timestamp = time.time()

|

||||

last_file = LAST_HEARTBEAT['file']

|

||||

if entity != last_file or enough_time_passed(timestamp, is_write):

|

||||

project = window.project_data() if hasattr(window, 'project_data') else None

|

||||

folders = window.folders()

|

||||

append_heartbeat(entity, timestamp, is_write, view, project, folders)

|

||||

|

||||

|

||||

class SendHeartbeatThread(threading.Thread):

|

||||

def append_heartbeat(entity, timestamp, is_write, view, project, folders):

|

||||

global LAST_HEARTBEAT

|

||||

|

||||

def __init__(self, target_file, view, is_write=False, project=None, folders=None, force=False):

|

||||

# add this heartbeat to queue

|

||||

heartbeat = {

|

||||

'entity': entity,

|

||||

'timestamp': timestamp,

|

||||

'is_write': is_write,

|

||||

'cursorpos': view.sel()[0].begin() if view.sel() else None,

|

||||

'project': project,

|

||||

'folders': folders,

|

||||

}

|

||||

HEARTBEATS.put_nowait(heartbeat)

|

||||

|

||||

# make this heartbeat the LAST_HEARTBEAT

|

||||

LAST_HEARTBEAT = {

|

||||

'file': entity,

|

||||

'time': timestamp,

|

||||

'is_write': is_write,

|

||||

}

|

||||

|

||||

# process the queue of heartbeats in the future

|

||||

seconds = 4

|

||||

set_timeout(process_queue, seconds)

|

||||

|

||||

|

||||

def process_queue():

|

||||

try:

|

||||

heartbeat = HEARTBEATS.get_nowait()

|

||||

except queue.Empty:

|

||||

return

|

||||

|

||||

has_extra_heartbeats = False

|

||||

extra_heartbeats = []

|

||||

try:

|

||||

while True:

|

||||

extra_heartbeats.append(HEARTBEATS.get_nowait())

|

||||

has_extra_heartbeats = True

|

||||

except queue.Empty:

|

||||

pass

|

||||

|

||||

thread = SendHeartbeatsThread(heartbeat)

|

||||

if has_extra_heartbeats:

|

||||

thread.add_extra_heartbeats(extra_heartbeats)

|

||||

thread.start()

|

||||

|

||||

|

||||

class SendHeartbeatsThread(threading.Thread):

|

||||

"""Non-blocking thread for sending heartbeats to api.

|

||||

"""

|

||||

|

||||

def __init__(self, heartbeat):

|

||||

threading.Thread.__init__(self)

|

||||

self.lock = LOCK

|

||||

self.target_file = target_file

|

||||

self.is_write = is_write

|

||||

self.project = project

|

||||

self.folders = folders

|

||||

self.force = force

|

||||

|

||||

self.debug = SETTINGS.get('debug')

|

||||

self.api_key = SETTINGS.get('api_key', '')

|

||||

self.ignore = SETTINGS.get('ignore', [])

|

||||

self.last_heartbeat = LAST_HEARTBEAT.copy()

|

||||

self.cursorpos = view.sel()[0].begin() if view.sel() else None

|

||||

self.view = view

|

||||

|

||||

self.heartbeat = heartbeat

|

||||

self.has_extra_heartbeats = False

|

||||

|

||||

def add_extra_heartbeats(self, extra_heartbeats):

|

||||

self.has_extra_heartbeats = True

|

||||

self.extra_heartbeats = extra_heartbeats

|

||||

|

||||

def run(self):

|

||||

with self.lock:

|

||||

if self.target_file:

|

||||

self.timestamp = time.time()

|

||||

if self.force or self.target_file != self.last_heartbeat['file'] or enough_time_passed(self.timestamp, self.last_heartbeat, self.is_write):

|

||||

self.send_heartbeat()

|

||||

"""Running in background thread."""

|

||||

|

||||

def send_heartbeat(self):

|

||||

if not self.api_key:

|

||||

print('[WakaTime] Error: missing api key.')

|

||||

return

|

||||

ua = 'sublime/%d sublime-wakatime/%s' % (ST_VERSION, __version__)

|

||||

cmd = [

|

||||

API_CLIENT,

|

||||

'--file', self.target_file,

|

||||

'--time', str('%f' % self.timestamp),

|

||||

'--plugin', ua,

|

||||

'--key', str(bytes.decode(self.api_key.encode('utf8'))),

|

||||

]

|

||||

if self.is_write:

|

||||

cmd.append('--write')

|

||||

if self.project and self.project.get('name'):

|

||||

cmd.extend(['--alternate-project', self.project.get('name')])

|

||||

elif self.folders:

|

||||

project_name = find_project_from_folders(self.folders, self.target_file)

|

||||

self.send_heartbeats()

|

||||

|

||||

def build_heartbeat(self, entity=None, timestamp=None, is_write=None,

|

||||

cursorpos=None, project=None, folders=None):

|

||||

"""Returns a dict for passing to wakatime-cli as arguments."""

|

||||

|

||||

heartbeat = {

|

||||

'entity': entity,

|

||||

'timestamp': timestamp,

|

||||

'is_write': is_write,

|

||||

}

|

||||

|

||||

if project and project.get('name'):

|

||||

heartbeat['alternate_project'] = project.get('name')

|

||||

elif folders:

|

||||

project_name = find_project_from_folders(folders, entity)

|

||||

if project_name:

|

||||

cmd.extend(['--alternate-project', project_name])

|

||||

if self.cursorpos is not None:

|

||||

cmd.extend(['--cursorpos', '{0}'.format(self.cursorpos)])

|

||||

for pattern in self.ignore:

|

||||

cmd.extend(['--ignore', pattern])

|

||||

if self.debug:

|

||||

cmd.append('--verbose')

|

||||

heartbeat['alternate_project'] = project_name

|

||||

|

||||

if cursorpos is not None:

|

||||

heartbeat['cursorpos'] = '{0}'.format(cursorpos)

|

||||

|

||||

return heartbeat

|

||||

|

||||

def send_heartbeats(self):

|

||||

if python_binary():

|

||||

cmd.insert(0, python_binary())

|

||||

heartbeat = self.build_heartbeat(**self.heartbeat)

|

||||

ua = 'sublime/%d sublime-wakatime/%s' % (ST_VERSION, __version__)

|

||||

cmd = [

|

||||

python_binary(),

|

||||

API_CLIENT,

|

||||

'--entity', heartbeat['entity'],

|

||||

'--time', str('%f' % heartbeat['timestamp']),

|

||||

'--plugin', ua,

|

||||

]

|

||||

if self.api_key:

|

||||

cmd.extend(['--key', str(bytes.decode(self.api_key.encode('utf8')))])

|

||||

if heartbeat['is_write']:

|

||||

cmd.append('--write')

|

||||

if heartbeat.get('alternate_project'):

|

||||

cmd.extend(['--alternate-project', heartbeat['alternate_project']])

|

||||

if heartbeat.get('cursorpos') is not None:

|

||||

cmd.extend(['--cursorpos', heartbeat['cursorpos']])

|

||||

for pattern in self.ignore:

|

||||

cmd.extend(['--ignore', pattern])

|

||||

if self.debug:

|

||||

print('[WakaTime] %s' % ' '.join(obfuscate_apikey(cmd)))

|

||||

if platform.system() == 'Windows':

|

||||

Popen(cmd, shell=False)

|

||||

cmd.append('--verbose')

|

||||

if self.has_extra_heartbeats:

|

||||

cmd.append('--extra-heartbeats')

|

||||

stdin = PIPE

|

||||

extra_heartbeats = [self.build_heartbeat(**x) for x in self.extra_heartbeats]

|

||||

extra_heartbeats = json.dumps(extra_heartbeats)

|

||||

else:

|

||||

with open(os.path.join(os.path.expanduser('~'), '.wakatime.log'), 'a') as stderr:

|

||||

Popen(cmd, stderr=stderr)

|

||||

self.sent()

|

||||

extra_heartbeats = None

|

||||

stdin = None

|

||||

|

||||

log(DEBUG, ' '.join(obfuscate_apikey(cmd)))

|

||||

try:

|

||||

process = Popen(cmd, stdin=stdin, stdout=PIPE, stderr=STDOUT)

|

||||

inp = None

|

||||

if self.has_extra_heartbeats:

|

||||

inp = "{0}\n".format(extra_heartbeats)

|

||||

inp = inp.encode('utf-8')

|

||||

output, err = process.communicate(input=inp)

|

||||

output = u(output)

|

||||

retcode = process.poll()

|

||||

if (not retcode or retcode == 102) and not output:

|

||||

self.sent()

|

||||

else:

|

||||

update_status_bar('Error')

|

||||

if retcode:

|

||||

log(DEBUG if retcode == 102 else ERROR, 'wakatime-core exited with status: {0}'.format(retcode))

|

||||

if output:

|

||||

log(ERROR, u('wakatime-core output: {0}').format(output))

|

||||

except:

|

||||

log(ERROR, u(sys.exc_info()[1]))

|

||||

update_status_bar('Error')

|

||||

|

||||

else:

|

||||

print('[WakaTime] Error: Unable to find python binary.')

|

||||

log(ERROR, 'Unable to find python binary.')

|

||||

update_status_bar('Error')

|

||||

|

||||

def sent(self):

|

||||

sublime.set_timeout(self.set_status_bar, 0)

|

||||

sublime.set_timeout(self.set_last_heartbeat, 0)

|

||||

update_status_bar('OK')

|

||||

|

||||

def set_status_bar(self):

|

||||

if SETTINGS.get('status_bar_message'):

|

||||

self.view.set_status('wakatime', datetime.now().strftime(SETTINGS.get('status_bar_message_fmt')))

|

||||

|

||||

def set_last_heartbeat(self):

|

||||

global LAST_HEARTBEAT

|

||||

LAST_HEARTBEAT = {

|

||||

'file': self.target_file,

|

||||

'time': self.timestamp,

|

||||

'is_write': self.is_write,

|

||||

}

|

||||

def download_python():

|

||||

thread = DownloadPython()

|

||||

thread.start()

|

||||

|

||||

|

||||

class DownloadPython(threading.Thread):

|

||||

"""Non-blocking thread for extracting embeddable Python on Windows machines.

|

||||

"""

|

||||

|

||||

def run(self):

|

||||

log(INFO, 'Downloading embeddable Python...')

|

||||

|

||||

ver = '3.5.0'

|

||||

arch = 'amd64' if platform.architecture()[0] == '64bit' else 'win32'

|

||||

url = 'https://www.python.org/ftp/python/{ver}/python-{ver}-embed-{arch}.zip'.format(

|

||||

ver=ver,

|

||||

arch=arch,

|

||||

)

|

||||

|

||||

if not os.path.exists(resources_folder()):

|

||||

os.makedirs(resources_folder())

|

||||

|

||||

zip_file = os.path.join(resources_folder(), 'python.zip')

|

||||

try:

|

||||

urllib.urlretrieve(url, zip_file)

|

||||

except AttributeError:

|

||||

urllib.request.urlretrieve(url, zip_file)

|

||||

|

||||

log(INFO, 'Extracting Python...')

|

||||

with ZipFile(zip_file) as zf:

|

||||

path = os.path.join(resources_folder(), 'python')

|

||||

zf.extractall(path)

|

||||

|

||||

try:

|

||||

os.remove(zip_file)

|

||||

except:

|

||||

pass

|

||||

|

||||

log(INFO, 'Finished extracting Python.')

|

||||

|

||||

|

||||

def plugin_loaded():

|

||||

global SETTINGS

|

||||

print('[WakaTime] Initializing WakaTime plugin v%s' % __version__)

|

||||

SETTINGS = sublime.load_settings(SETTINGS_FILE)

|

||||

|

||||

log(INFO, 'Initializing WakaTime plugin v%s' % __version__)

|

||||

update_status_bar('Initializing')

|

||||

|

||||

if not python_binary():

|

||||

sublime.error_message("Unable to find Python binary!\nWakaTime needs Python to work correctly.\n\nGo to https://www.python.org/downloads")

|

||||

return

|

||||

log(WARNING, 'Python binary not found.')

|

||||

if platform.system() == 'Windows':

|

||||

set_timeout(download_python, 0)

|

||||

else:

|

||||

sublime.error_message("Unable to find Python binary!\nWakaTime needs Python to work correctly.\n\nGo to https://www.python.org/downloads")

|

||||

return

|

||||

|

||||

SETTINGS = sublime.load_settings(SETTINGS_FILE)

|

||||

after_loaded()

|

||||

|

||||

|

||||

def after_loaded():

|

||||

if not prompt_api_key():

|

||||

sublime.set_timeout(after_loaded, 500)

|

||||

set_timeout(after_loaded, 0.5)

|

||||

|

||||

|

||||

# need to call plugin_loaded because only ST3 will auto-call it

|

||||

@ -298,15 +632,15 @@ if ST_VERSION < 3000:

|

||||

class WakatimeListener(sublime_plugin.EventListener):

|

||||

|

||||

def on_post_save(self, view):

|

||||

handle_heartbeat(view, is_write=True)

|

||||

handle_activity(view, is_write=True)

|

||||

|

||||

def on_selection_modified(self, view):

|

||||

if is_view_active(view):

|

||||

handle_heartbeat(view)

|

||||

handle_activity(view)

|

||||

|

||||

def on_modified(self, view):

|

||||

if is_view_active(view):

|

||||

handle_heartbeat(view)

|

||||

handle_activity(view)

|

||||

|

||||

|

||||

class WakatimeDashboardCommand(sublime_plugin.ApplicationCommand):

|

||||

|

||||

@ -19,5 +19,5 @@

|

||||

"status_bar_message": true,

|

||||

|

||||

// Status bar message format.

|

||||

"status_bar_message_fmt": "WakaTime active %I:%M %p"

|

||||

"status_bar_message_fmt": "WakaTime {status} %I:%M %p"

|

||||

}

|

||||

|

||||

@ -1,9 +1,9 @@

|

||||

__title__ = 'wakatime'

|

||||

__description__ = 'Common interface to the WakaTime api.'

|

||||

__url__ = 'https://github.com/wakatime/wakatime'

|

||||

__version_info__ = ('4', '0', '15')

|

||||

__version_info__ = ('6', '0', '9')

|

||||

__version__ = '.'.join(__version_info__)

|

||||

__author__ = 'Alan Hamlett'

|

||||

__author_email__ = 'alan@wakatime.com'

|

||||

__license__ = 'BSD'

|

||||

__copyright__ = 'Copyright 2014 Alan Hamlett'

|

||||

__copyright__ = 'Copyright 2016 Alan Hamlett'

|

||||

|

||||

@ -14,4 +14,4 @@

|

||||

__all__ = ['main']

|

||||

|

||||

|

||||

from .base import main

|

||||

from .main import execute

|

||||

|

||||

@ -11,8 +11,25 @@

|

||||

|

||||

import os

|

||||

import sys

|

||||

sys.path.insert(0, os.path.dirname(os.path.dirname(os.path.abspath(__file__))))

|

||||

import wakatime

|

||||

|

||||

|

||||

# get path to local wakatime package

|

||||

package_folder = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

|

||||

|

||||

# add local wakatime package to sys.path

|

||||

sys.path.insert(0, package_folder)

|

||||

|

||||

# import local wakatime package

|

||||

try:

|

||||

import wakatime

|

||||

except (TypeError, ImportError):

|

||||

# on Windows, non-ASCII characters in import path can be fixed using

|

||||

# the script path from sys.argv[0].

|

||||

# More info at https://github.com/wakatime/wakatime/issues/32

|

||||

package_folder = os.path.dirname(os.path.dirname(os.path.abspath(sys.argv[0])))

|

||||

sys.path.insert(0, package_folder)

|

||||

import wakatime

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

sys.exit(wakatime.main(sys.argv))

|

||||

sys.exit(wakatime.execute(sys.argv[1:]))

|

||||

|

||||

@ -17,32 +17,49 @@ is_py2 = (sys.version_info[0] == 2)

|

||||

is_py3 = (sys.version_info[0] == 3)

|

||||

|

||||

|

||||

if is_py2:

|

||||

if is_py2: # pragma: nocover

|

||||

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return unicode(text)

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

return text

|

||||

try:

|

||||

return unicode(text)

|

||||

except:

|

||||

return text.decode('utf-8', 'replace')

|

||||

open = codecs.open

|

||||

basestring = basestring

|

||||

|

||||

|

||||

elif is_py3:

|

||||

elif is_py3: # pragma: nocover

|

||||

|

||||

def u(text):

|

||||

if text is None:

|

||||

return None

|

||||

if isinstance(text, bytes):

|

||||

return text.decode('utf-8')

|

||||

return str(text)

|

||||

try:

|

||||

return text.decode('utf-8')

|

||||

except:

|

||||

try:

|

||||

return text.decode(sys.getdefaultencoding())

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

return str(text)

|

||||

except:

|

||||

return text.decode('utf-8', 'replace')

|

||||

open = open

|

||||

basestring = (str, bytes)

|

||||

|

||||

|

||||

try:

|

||||

from importlib import import_module

|

||||

except ImportError:

|

||||

except ImportError: # pragma: nocover

|

||||

def _resolve_name(name, package, level):

|

||||

"""Return the absolute name of the module to be imported."""

|

||||

if not hasattr(package, 'rindex'):

|

||||

|

||||

40

packages/wakatime/constants.py

Normal file

40

packages/wakatime/constants.py

Normal file

@ -0,0 +1,40 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.constants

|

||||

~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Constant variable definitions.

|

||||

|

||||

:copyright: (c) 2016 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

""" Success

|

||||

Exit code used when a heartbeat was sent successfully.

|

||||

"""

|

||||

SUCCESS = 0

|

||||

|

||||

""" Api Error

|

||||

Exit code used when the WakaTime API returned an error.

|

||||

"""

|

||||

API_ERROR = 102

|

||||

|

||||

""" Config File Parse Error

|

||||

Exit code used when the ~/.wakatime.cfg config file could not be parsed.

|

||||

"""

|

||||

CONFIG_FILE_PARSE_ERROR = 103

|

||||

|

||||

""" Auth Error

|

||||

Exit code used when our api key is invalid.

|

||||

"""

|

||||

AUTH_ERROR = 104

|

||||

|

||||

""" Unknown Error

|

||||

Exit code used when there was an unhandled exception.

|

||||

"""

|

||||

UNKNOWN_ERROR = 105

|

||||

|

||||

""" Malformed Heartbeat Error

|

||||

Exit code used when the JSON input from `--extra-heartbeats` is malformed.

|

||||

"""

|

||||

MALFORMED_HEARTBEAT_ERROR = 106

|

||||

@ -1,7 +1,7 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages

|

||||

~~~~~~~~~~~~~~~~~~

|

||||

wakatime.dependencies

|

||||

~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from a source code file.

|

||||

|

||||

@ -10,9 +10,11 @@

|

||||

"""

|

||||

|

||||

import logging

|

||||

import traceback

|

||||

import re

|

||||

import sys

|

||||

|

||||

from ..compat import u, open, import_module

|

||||

from ..exceptions import NotYetImplemented

|

||||

|

||||

|

||||

log = logging.getLogger('WakaTime')

|

||||

@ -23,26 +25,28 @@ class TokenParser(object):

|

||||

language, inherit from this class and implement the :meth:`parse` method

|

||||

to return a list of dependency strings.

|

||||

"""

|

||||

source_file = None

|

||||

lexer = None

|

||||

dependencies = []

|

||||

tokens = []

|

||||

exclude = []

|

||||

|

||||

def __init__(self, source_file, lexer=None):

|

||||

self._tokens = None

|

||||

self.dependencies = []

|

||||

self.source_file = source_file

|

||||

self.lexer = lexer

|

||||

self.exclude = [re.compile(x, re.IGNORECASE) for x in self.exclude]

|

||||

|

||||

@property

|

||||

def tokens(self):

|

||||

if self._tokens is None:

|

||||

self._tokens = self._extract_tokens()

|

||||

return self._tokens

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

""" Should return a list of dependencies.

|

||||

"""

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

raise Exception('Not yet implemented.')

|

||||

raise NotYetImplemented()

|

||||

|

||||

def append(self, dep, truncate=False, separator=None, truncate_to=None,

|

||||

strip_whitespace=True):

|

||||

if dep == 'as':

|

||||

print('***************** as')

|

||||

self._save_dependency(

|

||||

dep,

|

||||

truncate=truncate,

|

||||

@ -51,10 +55,21 @@ class TokenParser(object):

|

||||

strip_whitespace=strip_whitespace,

|

||||

)

|

||||

|

||||

def partial(self, token):

|

||||

return u(token).split('.')[-1]

|

||||

|

||||

def _extract_tokens(self):

|

||||

if self.lexer:

|

||||

with open(self.source_file, 'r', encoding='utf-8') as fh:

|

||||

return self.lexer.get_tokens_unprocessed(fh.read(512000))

|

||||

try:

|

||||

with open(self.source_file, 'r', encoding='utf-8') as fh:

|

||||

return self.lexer.get_tokens_unprocessed(fh.read(512000))

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

with open(self.source_file, 'r', encoding=sys.getfilesystemencoding()) as fh:

|

||||

return self.lexer.get_tokens_unprocessed(fh.read(512000)) # pragma: nocover

|

||||

except:

|

||||

pass

|

||||

return []

|

||||

|

||||

def _save_dependency(self, dep, truncate=False, separator=None,

|

||||

@ -64,13 +79,21 @@ class TokenParser(object):

|

||||

separator = u('.')

|

||||

separator = u(separator)

|

||||

dep = dep.split(separator)

|

||||

if truncate_to is None or truncate_to < 0 or truncate_to > len(dep) - 1:

|

||||

truncate_to = len(dep) - 1

|

||||

dep = dep[0] if len(dep) == 1 else separator.join(dep[0:truncate_to])

|

||||

if truncate_to is None or truncate_to < 1:

|

||||

truncate_to = 1

|

||||

if truncate_to > len(dep):

|

||||

truncate_to = len(dep)

|

||||

dep = dep[0] if len(dep) == 1 else separator.join(dep[:truncate_to])

|

||||

if strip_whitespace:

|

||||

dep = dep.strip()

|

||||

if dep:

|

||||

self.dependencies.append(dep)

|

||||

if dep and (not separator or not dep.startswith(separator)):

|

||||

should_exclude = False

|

||||

for compiled in self.exclude:

|

||||

if compiled.search(dep):

|

||||

should_exclude = True

|

||||

break

|

||||

if not should_exclude:

|

||||

self.dependencies.append(dep)

|

||||

|

||||

|

||||

class DependencyParser(object):

|

||||

@ -83,7 +106,7 @@ class DependencyParser(object):

|

||||

self.lexer = lexer

|

||||

|

||||

if self.lexer:

|

||||

module_name = self.lexer.__module__.split('.')[-1]

|

||||

module_name = self.lexer.__module__.rsplit('.', 1)[-1]

|

||||

class_name = self.lexer.__class__.__name__.replace('Lexer', 'Parser', 1)

|

||||

else:

|

||||

module_name = 'unknown'

|

||||

@ -96,7 +119,7 @@ class DependencyParser(object):

|

||||

except AttributeError:

|

||||

log.debug('Module {0} is missing class {1}'.format(module.__name__, class_name))

|

||||

except ImportError:

|

||||

log.debug(traceback.format_exc())

|

||||

log.traceback(logging.DEBUG)

|

||||

|

||||

def parse(self):

|

||||

if self.parser:

|

||||

51

packages/wakatime/dependencies/c_cpp.py

Normal file

51

packages/wakatime/dependencies/c_cpp.py

Normal file

@ -0,0 +1,51 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.c_cpp

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from C++ code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

|

||||

|

||||

class CParser(TokenParser):

|

||||

exclude = [

|

||||

r'^stdio\.h$',

|

||||

r'^stdlib\.h$',

|

||||

r'^string\.h$',

|

||||

r'^time\.h$',

|

||||

]

|

||||

state = None

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Preproc' or self.partial(token) == 'PreprocFile':

|

||||

self._process_preproc(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_preproc(self, token, content):

|

||||

if self.state == 'include':

|

||||

if content != '\n' and content != '#':

|

||||

content = content.strip().strip('"').strip('<').strip('>').strip()

|

||||

self.append(content, truncate=True, separator='/')

|

||||

self.state = None

|

||||

elif content.strip().startswith('include'):

|

||||

self.state = 'include'

|

||||

else:

|

||||

self.state = None

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

|

||||

|

||||

class CppParser(CParser):

|

||||

pass

|

||||

@ -26,10 +26,8 @@ class JsonParser(TokenParser):

|

||||

state = None

|

||||

level = 0

|

||||

|

||||

def parse(self, tokens=[]):

|

||||

def parse(self):

|

||||

self._process_file_name(os.path.basename(self.source_file))

|

||||

if not tokens and not self.tokens:

|

||||

self.tokens = self._extract_tokens()

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

64

packages/wakatime/dependencies/dotnet.py

Normal file

64

packages/wakatime/dependencies/dotnet.py

Normal file

@ -0,0 +1,64 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.dotnet

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from .NET code.

|

||||

|

||||

:copyright: (c) 2014 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

from ..compat import u

|

||||

|

||||

|

||||

class CSharpParser(TokenParser):

|

||||

exclude = [

|

||||

r'^system$',

|

||||

r'^microsoft$',

|

||||

]

|

||||

state = None

|

||||

buffer = u('')

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Keyword':

|

||||

self._process_keyword(token, content)

|

||||

if self.partial(token) == 'Namespace' or self.partial(token) == 'Name':

|

||||

self._process_namespace(token, content)

|

||||

elif self.partial(token) == 'Punctuation':

|

||||

self._process_punctuation(token, content)

|

||||

else:

|

||||

self._process_other(token, content)

|

||||

|

||||

def _process_keyword(self, token, content):

|

||||

if content == 'using':

|

||||

self.state = 'import'

|

||||

self.buffer = u('')

|

||||

|

||||

def _process_namespace(self, token, content):

|

||||

if self.state == 'import':

|

||||

if u(content) != u('import') and u(content) != u('package') and u(content) != u('namespace') and u(content) != u('static'):

|

||||

if u(content) == u(';'): # pragma: nocover

|

||||

self._process_punctuation(token, content)

|

||||

else:

|

||||

self.buffer += u(content)

|

||||

|

||||

def _process_punctuation(self, token, content):

|

||||

if self.state == 'import':

|

||||

if u(content) == u(';'):

|

||||

self.append(self.buffer, truncate=True)

|

||||

self.buffer = u('')

|

||||

self.state = None

|

||||

elif u(content) == u('='):

|

||||

self.buffer = u('')

|

||||

else:

|

||||

self.buffer += u(content)

|

||||

|

||||

def _process_other(self, token, content):

|

||||

pass

|

||||

77

packages/wakatime/dependencies/go.py

Normal file

77

packages/wakatime/dependencies/go.py

Normal file

@ -0,0 +1,77 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.languages.go

|

||||

~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Parse dependencies from Go code.

|

||||

|

||||

:copyright: (c) 2016 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from . import TokenParser

|

||||

|

||||

|

||||

class GoParser(TokenParser):

|

||||

state = None

|

||||

parens = 0

|

||||

aliases = 0

|

||||

exclude = [

|

||||

r'^"fmt"$',

|

||||

]

|

||||

|

||||

def parse(self):

|

||||

for index, token, content in self.tokens:

|

||||

self._process_token(token, content)

|

||||

return self.dependencies

|

||||

|

||||

def _process_token(self, token, content):

|

||||

if self.partial(token) == 'Namespace':

|

||||

self._process_namespace(token, content)

|

||||

elif self.partial(token) == 'Punctuation':

|

||||

self._process_punctuation(token, content)

|

||||

elif self.partial(token) == 'String':

|

||||

self._process_string(token, content)

|

||||

elif self.partial(token) == 'Text':

|

||||

self._process_text(token, content)

|

||||

elif self.partial(token) == 'Other':

|

||||

self._process_other(token, content)

|

||||

else:

|

||||

self._process_misc(token, content)

|

||||

|

||||

def _process_namespace(self, token, content):

|

||||

self.state = content

|

||||

self.parens = 0

|

||||

self.aliases = 0

|

||||

|

||||

def _process_string(self, token, content):

|

||||

if self.state == 'import':

|

||||

self.append(content, truncate=False)

|

||||

|

||||

def _process_punctuation(self, token, content):

|

||||

if content == '(':

|

||||

self.parens += 1

|

||||

elif content == ')':

|

||||

self.parens -= 1

|

||||

elif content == '.':

|

||||

self.aliases += 1

|

||||

else:

|

||||

self.state = None

|

||||

|

||||

def _process_text(self, token, content):

|

||||

if self.state == 'import':

|

||||

if content == "\n" and self.parens <= 0:

|

||||

self.state = None

|

||||

self.parens = 0

|

||||

self.aliases = 0

|

||||

else:

|

||||

self.state = None

|

||||

|

||||

def _process_other(self, token, content):

|

||||

if self.state == 'import':

|

||||

self.aliases += 1

|

||||

else:

|

||||

self.state = None

|

||||

|

||||

def _process_misc(self, token, content):

|

||||

self.state = None

|

||||

96

packages/wakatime/dependencies/jvm.py

Normal file

96

packages/wakatime/dependencies/jvm.py

Normal file

@ -0,0 +1,96 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""