mirror of

https://github.com/wakatime/sublime-wakatime.git

synced 2023-08-10 21:13:02 +03:00

Compare commits

494 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| ef77e1f178 | |||

| 4025decc12 | |||

| 086c700151 | |||

| 650bb6fa26 | |||

| 389c84673e | |||

| 6fa1321a95 | |||

| f1a8fcab44 | |||

| 3937b083c5 | |||

| 711aab0d18 | |||

| cb8ce3a54e | |||

| 7db5fe0a5d | |||

| cebcfaa0e9 | |||

| f8faed6e47 | |||

| fb303e048f | |||

| 4f11222c2b | |||

| 72b72dc9f0 | |||

| 1b07d0442b | |||

| fbd8e84ea1 | |||

| 01c0e7758e | |||

| 28556de3b6 | |||

| 809e43cfe5 | |||

| 22ddbe27b0 | |||

| ddaf60b8b0 | |||

| 2e6a87c67e | |||

| 01503b1c20 | |||

| 5a4ac9c11d | |||

| fa0a3aacb5 | |||

| d588451468 | |||

| 483d8f596e | |||

| 03f2d6d580 | |||

| e1390d7647 | |||

| c87bdd041c | |||

| 3a65395636 | |||

| 0b2f3aa9a4 | |||

| 58ef2cd794 | |||

| 04173d3bcc | |||

| 3206a07476 | |||

| 9330236816 | |||

| 885c11f01a | |||

| 8acda0157a | |||

| 935ddbd5f6 | |||

| b57b1eb696 | |||

| 6ec097b9d1 | |||

| b3ed36d3b2 | |||

| 3669e4df6a | |||

| 3504096082 | |||

| 5990947706 | |||

| 2246e31244 | |||

| b55fe702d3 | |||

| e0fbbb50bb | |||

| 32c0cb5a97 | |||

| 67d8b0d24f | |||

| b8b2f4944b | |||

| a20161164c | |||

| 405211bb07 | |||

| ffc879c4eb | |||

| 1e23919694 | |||

| b2086a3cd2 | |||

| 005b07520c | |||

| 60608bd322 | |||

| cde8f8f1de | |||

| 4adfca154c | |||

| f7b3924a30 | |||

| db00024455 | |||

| 9a6be7ca4e | |||

| 1ea9b2a761 | |||

| bd5e87e030 | |||

| 0256ff4a6a | |||

| 9d170b3276 | |||

| c54e575210 | |||

| 07513d8f10 | |||

| 30902cc050 | |||

| aa7962d49a | |||

| d8c662f3db | |||

| 10d88ebf2d | |||

| 2f28c561b1 | |||

| 24968507df | |||

| 641cd539ed | |||

| 0c65d7e5b2 | |||

| f0532f5b8e | |||

| 8094db9680 | |||

| bf20551849 | |||

| 2b6e32b578 | |||

| 363c3d38e2 | |||

| 88466d7db2 | |||

| 122fcbbee5 | |||

| c41fcec5d8 | |||

| be09b34d44 | |||

| e1ee1c1216 | |||

| a37061924b | |||

| da01fa268b | |||

| c279418651 | |||

| 5cf2c8f7ac | |||

| d1455e77a8 | |||

| 8499e7bafe | |||

| abc26a0864 | |||

| 71ad97ffe9 | |||

| 3ec5995c99 | |||

| 195cf4de36 | |||

| b39eefb4f5 | |||

| bbf5761e26 | |||

| c4df1dc633 | |||

| 360a491cda | |||

| f61a34eda7 | |||

| 48123d7409 | |||

| c8a15d7ac0 | |||

| 202df81e04 | |||

| 5e34f3f6a7 | |||

| d4441e5575 | |||

| 9eac8e2bd3 | |||

| 11d8fc3a09 | |||

| d1f1f51f23 | |||

| b10bb36c09 | |||

| dc9474befa | |||

| b910807e98 | |||

| bc770515f0 | |||

| 9e102d7c5c | |||

| 5c1770fb48 | |||

| 683397534c | |||

| 1c92017543 | |||

| fda1307668 | |||

| 1c84d457c5 | |||

| 1e680ce739 | |||

| 376adbb7d7 | |||

| e0040e185b | |||

| c4a88541d0 | |||

| 0cf621d177 | |||

| db9d6cec97 | |||

| 2c17f49a6b | |||

| 95116d6007 | |||

| 8c52596f8f | |||

| 3109817dc7 | |||

| 0c0f965763 | |||

| 1573e9c825 | |||

| a0b8f349c2 | |||

| 2fb60b1589 | |||

| 02786a744e | |||

| 729a4360ba | |||

| 8f45de85ec | |||

| 4672f70c87 | |||

| 46a9aae942 | |||

| 9e77ce2697 | |||

| 385ba818cc | |||

| 7492c3ce12 | |||

| 03eed88917 | |||

| 60a7ad96b5 | |||

| 2d1d5d336a | |||

| e659759b2d | |||

| a290e5d86d | |||

| d5b922bb10 | |||

| ec7b5e3530 | |||

| aa3f2e8af6 | |||

| f4e53cd682 | |||

| aba72b0f1e | |||

| 5b9d86a57d | |||

| fa40874635 | |||

| 6d4a4cf9eb | |||

| f628b8dd11 | |||

| f932ee9fc6 | |||

| 2f14009279 | |||

| 453d96bf9c | |||

| 9de153f156 | |||

| dcc782338d | |||

| 9d0dba988a | |||

| e76f2e514e | |||

| 224f7cd82a | |||

| 3cce525a84 | |||

| ce885501ad | |||

| c9448a9a19 | |||

| 04f8c61ebc | |||

| 04a4630024 | |||

| 02138220fd | |||

| d0b162bdd8 | |||

| 1b8895cd38 | |||

| 938bbb73d1 | |||

| 008fdc6b49 | |||

| a788625dd0 | |||

| bcbce681c3 | |||

| 35299db832 | |||

| eb7814624c | |||

| 1c092b2fd8 | |||

| 507ef95f71 | |||

| 9777bc7788 | |||

| 20b78defa6 | |||

| 8cb1c557d9 | |||

| 20a1965f13 | |||

| 0b802a554e | |||

| 30186c9b2c | |||

| 311a0b5309 | |||

| b7602d89fb | |||

| 305de46e32 | |||

| c574234927 | |||

| a69c50f470 | |||

| f4b40089f3 | |||

| 08394357b7 | |||

| 205d4eb163 | |||

| c4c27e4e9e | |||

| 9167eb2558 | |||

| eaa3bb5180 | |||

| 7755971d11 | |||

| 7634be5446 | |||

| 5e17ad88f6 | |||

| 24d0f65116 | |||

| a326046733 | |||

| 9bab00fd8b | |||

| b4a13a48b9 | |||

| 21601f9688 | |||

| 4c3ec87341 | |||

| b149d7fc87 | |||

| 52e6107c6e | |||

| b340637331 | |||

| 044867449a | |||

| 9e3f438823 | |||

| 887d55c3f3 | |||

| 19d54f3310 | |||

| 514a8762eb | |||

| 957c74d226 | |||

| 7b0432d6ff | |||

| 09754849be | |||

| 25ad48a97a | |||

| 3b2520afa9 | |||

| 77c2041ad3 | |||

| 8af3b53937 | |||

| 5ef2e6954e | |||

| ca94272de5 | |||

| f19a448d95 | |||

| e178765412 | |||

| 6a7de84b9c | |||

| 48810f2977 | |||

| 260eedb31d | |||

| 02e2bfcad2 | |||

| f14ece63f3 | |||

| cb7f786ec8 | |||

| ab8711d0b1 | |||

| 2354be358c | |||

| 443215bd90 | |||

| c64f125dc4 | |||

| 050b14fb53 | |||

| c7efc33463 | |||

| d0ddbed006 | |||

| 3ce8f388ab | |||

| 90731146f9 | |||

| e1ab92be6d | |||

| 8b59e46c64 | |||

| 006341eb72 | |||

| b54e0e13f6 | |||

| 835c7db864 | |||

| 53e8bb04e9 | |||

| 4aa06e3829 | |||

| 297f65733f | |||

| 5ba5e6d21b | |||

| 32eadda81f | |||

| c537044801 | |||

| a97792c23c | |||

| 4223f3575f | |||

| 284cdf3ce4 | |||

| 27afc41bf4 | |||

| 1fdda0d64a | |||

| c90a4863e9 | |||

| 94343e5b07 | |||

| 03acea6e25 | |||

| 77594700bd | |||

| 6681409e98 | |||

| 8f7837269a | |||

| a523b3aa4d | |||

| 6985ce32bb | |||

| 4be40c7720 | |||

| eeb7fd8219 | |||

| 11fbd2d2a6 | |||

| 3cecd0de5d | |||

| c50100e675 | |||

| c1da94bc18 | |||

| 7f9d6ede9d | |||

| 192a5c7aa7 | |||

| 16bbe21be9 | |||

| 5ebaf12a99 | |||

| 1834e8978a | |||

| 22c8ed74bd | |||

| 12bbb4e561 | |||

| c71cb21cc1 | |||

| eb11b991f0 | |||

| 7ea51d09ba | |||

| b07b59e0c8 | |||

| 9d715e95b7 | |||

| 3edaed53aa | |||

| 865b0bcee9 | |||

| d440fe912c | |||

| 627455167f | |||

| aba89d3948 | |||

| 18d87118e1 | |||

| fd91b9e032 | |||

| 16b15773bf | |||

| f0b518862a | |||

| 7ee7de70d5 | |||

| fb479f8e84 | |||

| 7d37193f65 | |||

| 6bd62b95db | |||

| abf4a94a59 | |||

| 9337e3173b | |||

| 57fa4d4d84 | |||

| 9b5c59e677 | |||

| 71ce25a326 | |||

| f2f14207f5 | |||

| ac2ec0e73c | |||

| 040a76b93c | |||

| dab0621b97 | |||

| 675f9ecd69 | |||

| a6f92b9c74 | |||

| bfcc242d7e | |||

| 762027644f | |||

| 3c4ceb95fa | |||

| d6d8bceca0 | |||

| acaad2dc83 | |||

| 23c5801080 | |||

| 05a3bfbb53 | |||

| 8faaa3b0e3 | |||

| 4bcddf2a98 | |||

| b51ae5c2c4 | |||

| 5cd0061653 | |||

| 651c84325e | |||

| 89368529cb | |||

| f1f408284b | |||

| 7053932731 | |||

| b6c4956521 | |||

| 68a2557884 | |||

| c7ee7258fb | |||

| aaff2503fb | |||

| 00a1193bd3 | |||

| 2371daac1b | |||

| 4395db2b2d | |||

| fc8c61fa3f | |||

| aa30110343 | |||

| b671856341 | |||

| b801759cdf | |||

| 919064200b | |||

| 911b5656d7 | |||

| 48bbab33b4 | |||

| 3b2aafe004 | |||

| aa0b2d6d70 | |||

| 1a6f588d94 | |||

| 373ebf933f | |||

| 7fb47228f9 | |||

| 4fca5e1c06 | |||

| cb2d126c47 | |||

| 17404bf848 | |||

| 510eea0a8b | |||

| d16d1ca747 | |||

| 440e33b8b7 | |||

| 307029c37a | |||

| 60c8ea4454 | |||

| e4fe604a93 | |||

| 308187b2ed | |||

| 97f4077675 | |||

| 4960289ed1 | |||

| 82530cef4f | |||

| 08172098e2 | |||

| 56f54fb064 | |||

| 1bea7cde8c | |||

| 038847e665 | |||

| d233494a39 | |||

| 070ad5a023 | |||

| 757a4c6905 | |||

| dd61a4f5f4 | |||

| 69f9bbdc78 | |||

| e1dc4039fd | |||

| 7c07925527 | |||

| ee8c0dfed8 | |||

| ad4df93b04 | |||

| 9a600df969 | |||

| a0abeac3e2 | |||

| 12b8c36c5f | |||

| 7d4d50ee62 | |||

| 520db283cb | |||

| f3179b75d9 | |||

| 1bc8b9b9c7 | |||

| 584d109357 | |||

| 327c0e448b | |||

| 3182a45bbd | |||

| 4cd4a26f91 | |||

| 85856f2c53 | |||

| 8a09559364 | |||

| 5e2e1be779 | |||

| b1d344cb46 | |||

| 7245cbeb58 | |||

| 21395579ea | |||

| 08b64b4ff6 | |||

| 20571ec085 | |||

| e43dcc1c83 | |||

| 4610ff3e0c | |||

| c86d6254e0 | |||

| df331db5cc | |||

| baff0f415d | |||

| 499dc167a5 | |||

| 83f4a29a15 | |||

| 8f02adacf9 | |||

| e631d33944 | |||

| cbd92a69b3 | |||

| b7c047102d | |||

| d0bfd04602 | |||

| 101ab38c70 | |||

| 8632c4ff08 | |||

| 80556d0cbf | |||

| 253728545c | |||

| 49d9b1d7dc | |||

| 8574abe012 | |||

| 6b6f60d8e8 | |||

| 986e592d1e | |||

| 6ec3b171e1 | |||

| bcfb9862af | |||

| 85cf9f4eb5 | |||

| d2a996e845 | |||

| c863bde54a | |||

| e19f85f081 | |||

| 7b854d4041 | |||

| e122f73e6b | |||

| 474942eb6a | |||

| a5f031b046 | |||

| 66fddc07b9 | |||

| e56a07e909 | |||

| 64ea40b3f5 | |||

| 17fd6ef8e1 | |||

| e5e399dfbe | |||

| bcf037e8a4 | |||

| 7e678a38bd | |||

| 533aaac313 | |||

| 7f4f70cc85 | |||

| 4adb8a8796 | |||

| 48e1993b24 | |||

| 8a3375bb23 | |||

| 8bd54a7427 | |||

| fcbbf05933 | |||

| 9733087094 | |||

| da4e02199a | |||

| 09a16dea1e | |||

| 4c7adf0943 | |||

| 216a8eaa0a | |||

| 81f838489d | |||

| d6228b8dce | |||

| 7a2c2b9750 | |||

| d9cc911595 | |||

| 805e2fe222 | |||

| bbcb39b2cf | |||

| 9f9b97c69f | |||

| 908ff98613 | |||

| 37f74b4b56 | |||

| a1c0d7e489 | |||

| 3127f264b4 | |||

| 049bc57019 | |||

| 03ec38bb67 | |||

| 4fc1a55ff7 | |||

| f60815b813 | |||

| ca47c2308d | |||

| 146a959747 | |||

| 906184cd88 | |||

| a13e11d24d | |||

| d34432217f | |||

| f2e8f85198 | |||

| 05b08b6ab2 | |||

| 685d242c60 | |||

| 023c1dfbe3 | |||

| 9255fd2c34 | |||

| 784ad38c38 | |||

| 36def5c8b8 | |||

| 2c8dd6c9e7 | |||

| e8151535c1 | |||

| 744116079a | |||

| 791a969a10 | |||

| 46c5171d6a | |||

| fe641d01d4 | |||

| 4f03423333 | |||

| e812e9fe15 | |||

| a92ebad2f2 | |||

| 78a7e5cbcb | |||

| 5616206b48 | |||

| 165543d867 | |||

| e933f71bcd | |||

| 8c6cb8dc9c | |||

| 3e74625963 | |||

| a19e635ba3 | |||

| 6436cf6b62 | |||

| e1e8861a6e | |||

| 097027a3d4 | |||

| 37192c6333 | |||

| 57f4ca069b | |||

| bf6a6f7310 | |||

| fce10cea07 | |||

| b836f26226 | |||

| c3e08623c1 | |||

| af0dce46aa | |||

| be54a19207 | |||

| ce8d9af149 | |||

| 840d4e17f1 | |||

| 73ede90e69 | |||

| 65094ecf74 |

17

AUTHORS

Normal file

17

AUTHORS

Normal file

@ -0,0 +1,17 @@

|

||||

WakaTime is written and maintained by Alan Hamlett and

|

||||

various contributors:

|

||||

|

||||

|

||||

Development Lead

|

||||

----------------

|

||||

|

||||

- Alan Hamlett <alan.hamlett@gmail.com>

|

||||

|

||||

|

||||

Patches and Suggestions

|

||||

-----------------------

|

||||

|

||||

- Jimmy Selgen Nielsen <jimmy.selgen@gmail.com>

|

||||

- Patrik Kernstock <info@pkern.at>

|

||||

- Krishna Glick <krishnaglick@gmail.com>

|

||||

- Carlos Henrique Gandarez <gandarez@gmail.com>

|

||||

6

Default.sublime-commands

Normal file

6

Default.sublime-commands

Normal file

@ -0,0 +1,6 @@

|

||||

[

|

||||

{

|

||||

"caption": "WakaTime: Open Dashboard",

|

||||

"command": "wakatime_dashboard"

|

||||

}

|

||||

]

|

||||

1339

HISTORY.rst

Normal file

1339

HISTORY.rst

Normal file

File diff suppressed because it is too large

Load Diff

@ -1,5 +1,6 @@

|

||||

Copyright (c) 2013 Alan Hamlett https://wakati.me

|

||||

All rights reserved.

|

||||

BSD 3-Clause License

|

||||

|

||||

Copyright (c) 2014 Alan Hamlett.

|

||||

|

||||

Redistribution and use in source and binary forms, with or without

|

||||

modification, are permitted provided that the following conditions are met:

|

||||

@ -12,7 +13,7 @@ modification, are permitted provided that the following conditions are met:

|

||||

in the documentation and/or other materials provided

|

||||

with the distribution.

|

||||

|

||||

* Neither the names of Wakatime or Wakati.Me, nor the names of its

|

||||

* Neither the names of WakaTime, nor the names of its

|

||||

contributors may be used to endorse or promote products derived

|

||||

from this software without specific prior written permission.

|

||||

|

||||

@ -6,24 +6,37 @@

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"caption": "WakaTime",

|

||||

"mnemonic": "W",

|

||||

"id": "wakatime-settings",

|

||||

"caption": "Package Settings",

|

||||

"mnemonic": "P",

|

||||

"id": "package-settings",

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/WakaTime/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – Default"

|

||||

},

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/User/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – User"

|

||||

"caption": "WakaTime",

|

||||

"mnemonic": "W",

|

||||

"id": "wakatime-settings",

|

||||

"children":

|

||||

[

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/WakaTime/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – Default"

|

||||

},

|

||||

{

|

||||

"command": "open_file", "args":

|

||||

{

|

||||

"file": "${packages}/User/WakaTime.sublime-settings"

|

||||

},

|

||||

"caption": "Settings – User"

|

||||

},

|

||||

{

|

||||

"command": "wakatime_dashboard",

|

||||

"args": {},

|

||||

"caption": "WakaTime Dashboard"

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

59

README.md

59

README.md

@ -1,37 +1,56 @@

|

||||

sublime-wakatime

|

||||

================

|

||||

# sublime-wakatime

|

||||

|

||||

Automatic time tracking for Sublime Text 2 & 3.

|

||||

[](https://wakatime.com/badge/github/wakatime/sublime-wakatime)

|

||||

|

||||

Installation

|

||||

------------

|

||||

[WakaTime][wakatime] is an open source Sublime Text plugin for metrics, insights, and time tracking automatically generated from your programming activity.

|

||||

|

||||

Heads Up! For Sublime Text 2 on Windows & Linux, WakaTime depends on [Python](http://www.python.org/getit/) being installed to work correctly.

|

||||

|

||||

1. Get an api key from: https://wakati.me

|

||||

## Installation

|

||||

|

||||

2. Using [Sublime Package Control](http://wbond.net/sublime_packages/package_control):

|

||||

1. Install [Package Control](https://packagecontrol.io/installation).

|

||||

|

||||

a) Press `ctrl+shift+p`(Windows, Linux) or `cmd+shift+p`(OS X).

|

||||

2. In Sublime, press `ctrl+shift+p`(Windows, Linux) or `cmd+shift+p`(OS X).

|

||||

|

||||

b) Type `install`, then press `enter` with `Package Control: Install Package` selected.

|

||||

3. Type `install`, then press `enter` with `Package Control: Install Package` selected.

|

||||

|

||||

c) Type `wakatime`, then press `enter` with the `WakaTime` plugin selected.

|

||||

4. Type `wakatime`, then press `enter` with the `WakaTime` plugin selected.

|

||||

|

||||

3. You will see a prompt at the bottom asking for your [api key](https://www.wakati.me/#apikey). Enter your api key, then press `enter`.

|

||||

5. Enter your [api key](https://wakatime.com/settings#apikey), then press `enter`.

|

||||

|

||||

4. Use Sublime and your time will automatically be tracked for you.

|

||||

6. Use Sublime and your coding activity will be displayed on your [WakaTime dashboard](https://wakatime.com).

|

||||

|

||||

5. Visit https://wakati.me to see your logged time.

|

||||

|

||||

6. Consider installing [BIND9](https://help.ubuntu.com/community/BIND9ServerHowto#Caching_Server_configuration) to cache your repeated DNS requests: `sudo apt-get install bind9`

|

||||

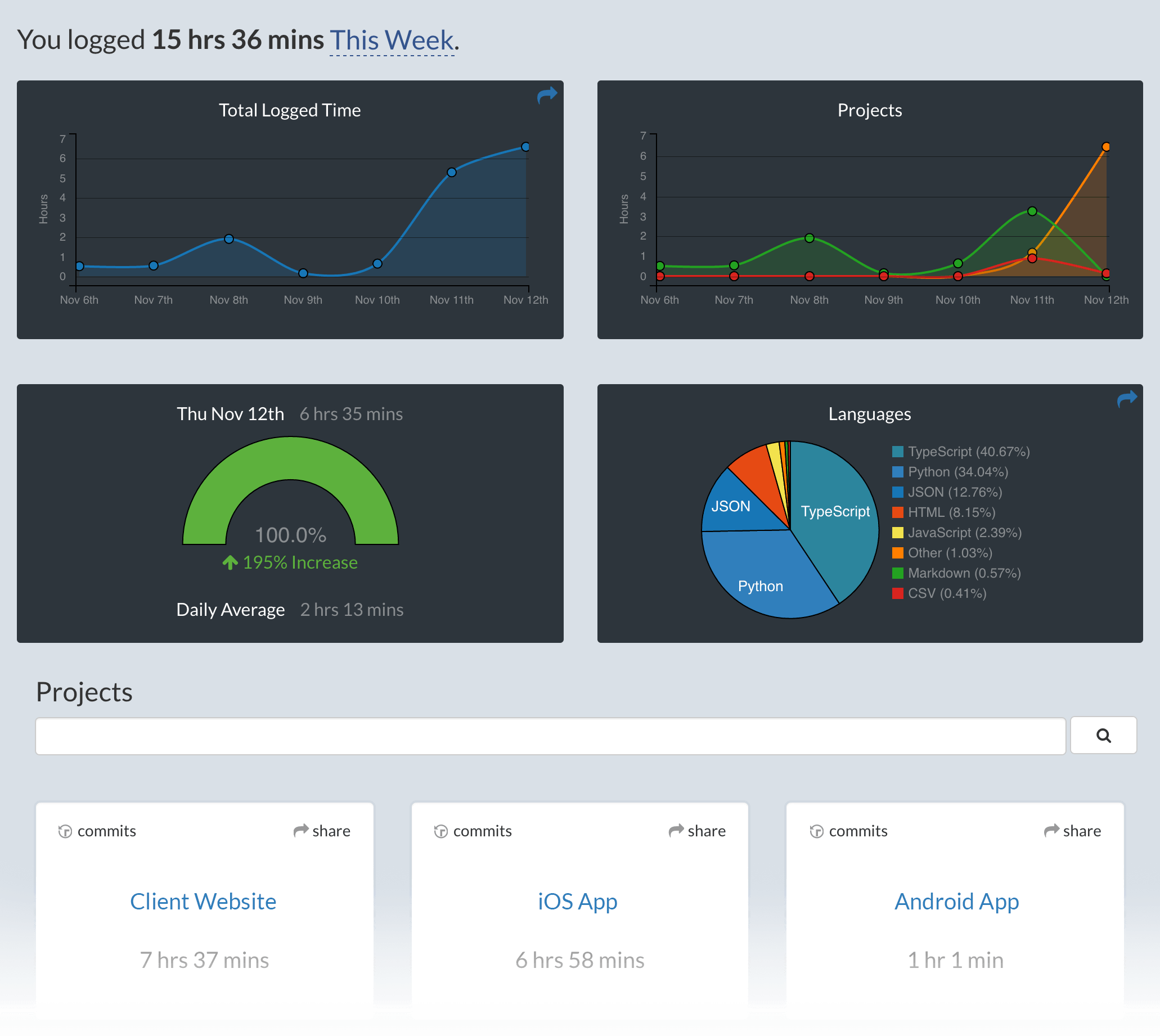

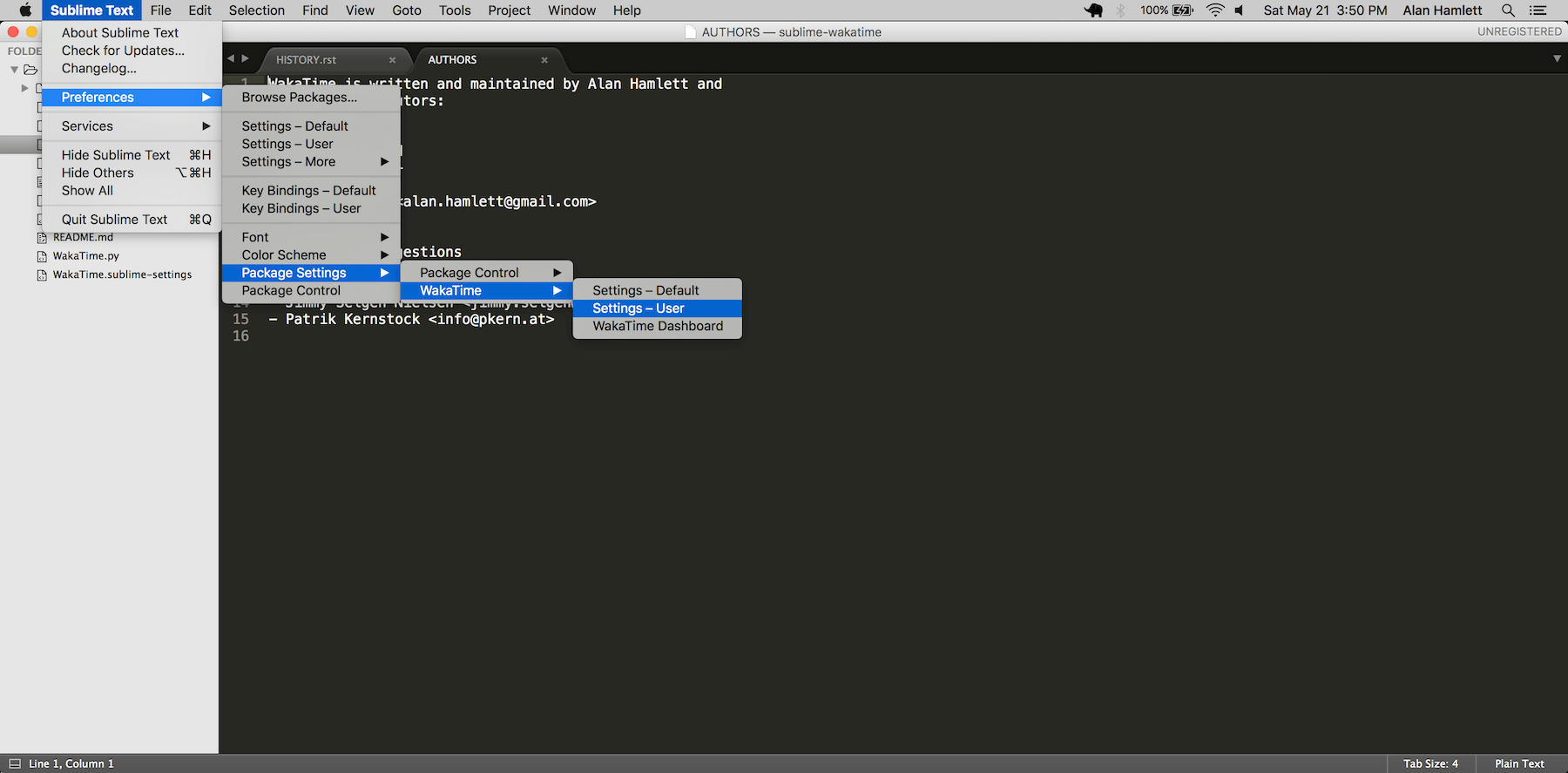

## Screen Shots

|

||||

|

||||

Screen Shots

|

||||

------------

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Unresponsive Plugin Warning

|

||||

|

||||

|

||||

In Sublime Text 2, if you get a warning message:

|

||||

|

||||

A plugin (WakaTime) may be making Sublime Text unresponsive by taking too long (0.017332s) in its on_modified callback.

|

||||

|

||||

To fix this, go to `Preferences → Settings - User` then add the following setting:

|

||||

|

||||

`"detect_slow_plugins": false`

|

||||

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

First, turn on debug mode in your `WakaTime.sublime-settings` file.

|

||||

|

||||

|

||||

|

||||

Add the line: `"debug": true`

|

||||

|

||||

Then, open your Sublime Console with `View → Show Console` ( CTRL + \` ) to see the plugin executing the wakatime cli process when sending a heartbeat.

|

||||

Also, tail your `$HOME/.wakatime.log` file to debug wakatime cli problems.

|

||||

|

||||

The [How to Debug Plugins][how to debug] guide shows how to check when coding activity was last received from your editor using the [User Agents API][user agents api].

|

||||

For more general troubleshooting info, see the [wakatime-cli Troubleshooting Section][wakatime-cli-help].

|

||||

|

||||

[wakatime]: https://wakatime.com/sublime-text

|

||||

[wakatime-cli-help]: https://github.com/wakatime/wakatime#troubleshooting

|

||||

[how to debug]: https://wakatime.com/faq#debug-plugins

|

||||

[user agents api]: https://wakatime.com/developers#user_agents

|

||||

|

||||

939

WakaTime.py

939

WakaTime.py

File diff suppressed because it is too large

Load Diff

@ -3,10 +3,38 @@

|

||||

// This settings file will be overwritten when upgrading.

|

||||

|

||||

{

|

||||

// Your api key from https://www.wakati.me/#apikey

|

||||

// Your api key from https://wakatime.com/api-key

|

||||

// Set this in your User specific WakaTime.sublime-settings file.

|

||||

"api_key": "",

|

||||

|

||||

|

||||

// Debug mode. Set to true for verbose logging. Defaults to false.

|

||||

"debug": false

|

||||

"debug": false,

|

||||

|

||||

// Proxy with format https://user:pass@host:port or socks5://user:pass@host:port or domain\\user:pass.

|

||||

"proxy": "",

|

||||

|

||||

// Ignore files; Files (including absolute paths) that match one of these

|

||||

// POSIX regular expressions will not be logged.

|

||||

"ignore": ["^/tmp/", "^/etc/", "^/var/(?!www/).*", "COMMIT_EDITMSG$", "PULLREQ_EDITMSG$", "MERGE_MSG$", "TAG_EDITMSG$"],

|

||||

|

||||

// Include files; Files (including absolute paths) that match one of these

|

||||

// POSIX regular expressions will bypass your ignore setting.

|

||||

"include": [".*"],

|

||||

|

||||

// Status bar for surfacing errors and displaying today's coding time. Set

|

||||

// to false to hide. Defaults to true.

|

||||

"status_bar_enabled": true,

|

||||

|

||||

// Show today's coding activity in WakaTime status bar item.

|

||||

// Defaults to true.

|

||||

"status_bar_coding_activity": true,

|

||||

|

||||

// Obfuscate file paths when sending to API. Your dashboard will no longer display coding activity per file.

|

||||

"hidefilenames": false,

|

||||

|

||||

// Python binary location. Uses python from your PATH by default.

|

||||

"python_binary": "",

|

||||

|

||||

// Use standalone compiled Python wakatime-cli (Will not work on ARM Macs)

|

||||

"standalone": false

|

||||

}

|

||||

|

||||

35

packages/wakatime/.gitignore

vendored

35

packages/wakatime/.gitignore

vendored

@ -1,35 +0,0 @@

|

||||

*.py[cod]

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Packages

|

||||

*.egg

|

||||

*.egg-info

|

||||

dist

|

||||

build

|

||||

eggs

|

||||

parts

|

||||

bin

|

||||

var

|

||||

sdist

|

||||

develop-eggs

|

||||

.installed.cfg

|

||||

lib

|

||||

lib64

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

.coverage

|

||||

.tox

|

||||

nosetests.xml

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

|

||||

# Mr Developer

|

||||

.mr.developer.cfg

|

||||

.project

|

||||

.pydevproject

|

||||

@ -1,65 +0,0 @@

|

||||

|

||||

History

|

||||

-------

|

||||

|

||||

|

||||

0.4.5 (2013-09-07)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fixed relative import error by adding packages directory to sys path

|

||||

|

||||

|

||||

0.4.4 (2013-09-06)

|

||||

++++++++++++++++++

|

||||

|

||||

- Using urllib2 again because of intermittent problems sending json with requests library

|

||||

|

||||

|

||||

0.4.3 (2013-09-04)

|

||||

++++++++++++++++++

|

||||

|

||||

- Encoding json as utf-8 before making request

|

||||

|

||||

|

||||

0.4.2 (2013-09-04)

|

||||

++++++++++++++++++

|

||||

|

||||

- Using requests package v1.2.3 from pypi

|

||||

|

||||

|

||||

0.4.1 (2013-08-25)

|

||||

++++++++++++++++++

|

||||

|

||||

- Fix bug causing requests library to omit POST content

|

||||

|

||||

|

||||

0.4.0 (2013-08-15)

|

||||

++++++++++++++++++

|

||||

|

||||

- Sending single branch instead of multiple tags

|

||||

|

||||

|

||||

0.3.1 (2013-08-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Using requests module instead of urllib2 to verify SSL certs

|

||||

|

||||

|

||||

0.3.0 (2013-08-08)

|

||||

++++++++++++++++++

|

||||

|

||||

- Allow importing directly from Python plugins

|

||||

|

||||

|

||||

0.1.1 (2013-07-07)

|

||||

++++++++++++++++++

|

||||

|

||||

- Refactored

|

||||

- Simplified action events schema

|

||||

|

||||

|

||||

0.0.1 (2013-07-05)

|

||||

++++++++++++++++++

|

||||

|

||||

- Birth

|

||||

|

||||

@ -1,2 +0,0 @@

|

||||

include README.rst LICENSE HISTORY.rst

|

||||

recursive-include wakatime *.py

|

||||

@ -1,12 +0,0 @@

|

||||

WakaTime

|

||||

========

|

||||

|

||||

Automatic time tracking for your text editor. This is the command line

|

||||

event appender for the WakaTime api. You shouldn't need to directly

|

||||

use this outside of a text editor plugin.

|

||||

|

||||

|

||||

Installation

|

||||

------------

|

||||

|

||||

https://www.wakati.me/help/plugins/installing-plugins

|

||||

@ -1,39 +0,0 @@

|

||||

from setuptools import setup

|

||||

|

||||

from wakatime.__init__ import __version__ as VERSION

|

||||

|

||||

|

||||

packages = [

|

||||

'wakatime',

|

||||

]

|

||||

|

||||

setup(

|

||||

name='wakatime',

|

||||

version=VERSION,

|

||||

license='BSD 3 Clause',

|

||||

description=' '.join([

|

||||

'Action event appender for Wakati.Me, a time',

|

||||

'tracking api for text editors.',

|

||||

]),

|

||||

long_description=open('README.rst').read(),

|

||||

author='Alan Hamlett',

|

||||

author_email='alan.hamlett@gmail.com',

|

||||

url='https://github.com/wakatime/wakatime',

|

||||

packages=packages,

|

||||

package_dir={'wakatime': 'wakatime'},

|

||||

include_package_data=True,

|

||||

zip_safe=False,

|

||||

platforms='any',

|

||||

entry_points={

|

||||

'console_scripts': ['wakatime = wakatime.__init__:main'],

|

||||

},

|

||||

classifiers=(

|

||||

'Development Status :: 3 - Alpha',

|

||||

'Environment :: Console',

|

||||

'Intended Audience :: Developers',

|

||||

'License :: OSI Approved :: BSD License',

|

||||

'Natural Language :: English',

|

||||

'Programming Language :: Python',

|

||||

'Topic :: Text Editors',

|

||||

),

|

||||

)

|

||||

@ -1,21 +0,0 @@

|

||||

#!/usr/bin/env python

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime-cli

|

||||

~~~~~~~~~~~~

|

||||

|

||||

Action event appender for Wakati.Me, auto time tracking for text editors.

|

||||

|

||||

:copyright: (c) 2013 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from __future__ import print_function

|

||||

|

||||

import os

|

||||

import sys

|

||||

sys.path.insert(0, os.path.dirname(os.path.abspath(__file__)))

|

||||

import wakatime

|

||||

|

||||

if __name__ == '__main__':

|

||||

sys.exit(wakatime.main(sys.argv))

|

||||

@ -1,200 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime

|

||||

~~~~~~~~

|

||||

|

||||

Action event appender for Wakati.Me, auto time tracking for text editors.

|

||||

|

||||

:copyright: (c) 2013 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

from __future__ import print_function

|

||||

|

||||

__title__ = 'wakatime'

|

||||

__version__ = '0.4.5'

|

||||

__author__ = 'Alan Hamlett'

|

||||

__license__ = 'BSD'

|

||||

__copyright__ = 'Copyright 2013 Alan Hamlett'

|

||||

|

||||

|

||||

import base64

|

||||

import logging

|

||||

import os

|

||||

import platform

|

||||

import re

|

||||

import sys

|

||||

import time

|

||||

import traceback

|

||||

|

||||

sys.path.insert(0, os.path.dirname(os.path.abspath(__file__)))

|

||||

sys.path.insert(0, os.path.join(os.path.dirname(os.path.abspath(__file__)), 'packages'))

|

||||

from .log import setup_logging

|

||||

from .project import find_project

|

||||

from .packages import argparse

|

||||

from .packages import simplejson as json

|

||||

try:

|

||||

from urllib2 import HTTPError, Request, urlopen

|

||||

except ImportError:

|

||||

from urllib.error import HTTPError

|

||||

from urllib.request import Request, urlopen

|

||||

|

||||

|

||||

log = logging.getLogger(__name__)

|

||||

|

||||

|

||||

class FileAction(argparse.Action):

|

||||

|

||||

def __call__(self, parser, namespace, values, option_string=None):

|

||||

values = os.path.realpath(values)

|

||||

setattr(namespace, self.dest, values)

|

||||

|

||||

|

||||

def parseArguments(argv):

|

||||

try:

|

||||

sys.argv

|

||||

except AttributeError:

|

||||

sys.argv = argv

|

||||

parser = argparse.ArgumentParser(

|

||||

description='Wakati.Me event api appender')

|

||||

parser.add_argument('--file', dest='targetFile', metavar='file',

|

||||

action=FileAction, required=True,

|

||||

help='absolute path to file for current action')

|

||||

parser.add_argument('--time', dest='timestamp', metavar='time',

|

||||

type=float,

|

||||

help='optional floating-point unix epoch timestamp; '+

|

||||

'uses current time by default')

|

||||

parser.add_argument('--endtime', dest='endtime',

|

||||

help='optional end timestamp turning this action into '+

|

||||

'a duration; if a non-duration action occurs within a '+

|

||||

'duration, the duration is ignored')

|

||||

parser.add_argument('--write', dest='isWrite',

|

||||

action='store_true',

|

||||

help='note action was triggered from writing to a file')

|

||||

parser.add_argument('--plugin', dest='plugin',

|

||||

help='optional text editor plugin name and version '+

|

||||

'for User-Agent header')

|

||||

parser.add_argument('--key', dest='key',

|

||||

help='your wakati.me api key; uses api_key from '+

|

||||

'~/.wakatime.conf by default')

|

||||

parser.add_argument('--logfile', dest='logfile',

|

||||

help='defaults to ~/.wakatime.log')

|

||||

parser.add_argument('--config', dest='config',

|

||||

help='defaults to ~/.wakatime.conf')

|

||||

parser.add_argument('--verbose', dest='verbose', action='store_true',

|

||||

help='turns on debug messages in log file')

|

||||

parser.add_argument('--version', action='version', version=__version__)

|

||||

args = parser.parse_args(args=argv[1:])

|

||||

if not args.timestamp:

|

||||

args.timestamp = time.time()

|

||||

if not args.key:

|

||||

default_key = get_api_key(args.config)

|

||||

if default_key:

|

||||

args.key = default_key

|

||||

else:

|

||||

parser.error('Missing api key')

|

||||

return args

|

||||

|

||||

|

||||

def get_api_key(configFile):

|

||||

if not configFile:

|

||||

configFile = os.path.join(os.path.expanduser('~'), '.wakatime.conf')

|

||||

api_key = None

|

||||

try:

|

||||

cf = open(configFile)

|

||||

for line in cf:

|

||||

line = line.split('=', 1)

|

||||

if line[0] == 'api_key':

|

||||

api_key = line[1].strip()

|

||||

cf.close()

|

||||

except IOError:

|

||||

print('Error: Could not read from config file.')

|

||||

return api_key

|

||||

|

||||

|

||||

def get_user_agent(plugin):

|

||||

ver = sys.version_info

|

||||

python_version = '%d.%d.%d.%s.%d' % (ver[0], ver[1], ver[2], ver[3], ver[4])

|

||||

user_agent = 'wakatime/%s (%s) Python%s' % (__version__,

|

||||

platform.platform(), python_version)

|

||||

if plugin:

|

||||

user_agent = user_agent+' '+plugin

|

||||

return user_agent

|

||||

|

||||

|

||||

def send_action(project=None, branch=None, key=None, targetFile=None,

|

||||

timestamp=None, endtime=None, isWrite=None, plugin=None, **kwargs):

|

||||

url = 'https://www.wakati.me/api/v1/actions'

|

||||

log.debug('Sending action to api at %s' % url)

|

||||

data = {

|

||||

'time': timestamp,

|

||||

'file': targetFile,

|

||||

}

|

||||

if endtime:

|

||||

data['endtime'] = endtime

|

||||

if isWrite:

|

||||

data['is_write'] = isWrite

|

||||

if project:

|

||||

data['project'] = project

|

||||

if branch:

|

||||

data['branch'] = branch

|

||||

log.debug(data)

|

||||

|

||||

# setup api request

|

||||

request = Request(url=url, data=str.encode(json.dumps(data)))

|

||||

request.add_header('User-Agent', get_user_agent(plugin))

|

||||

request.add_header('Content-Type', 'application/json')

|

||||

auth = 'Basic %s' % bytes.decode(base64.b64encode(str.encode(key)))

|

||||

request.add_header('Authorization', auth)

|

||||

|

||||

# log time to api

|

||||

response = None

|

||||

try:

|

||||

response = urlopen(request)

|

||||

except HTTPError as exc:

|

||||

exception_data = {

|

||||

'response_code': exc.getcode(),

|

||||

sys.exc_info()[0].__name__: str(sys.exc_info()[1]),

|

||||

}

|

||||

if log.isEnabledFor(logging.DEBUG):

|

||||

exception_data['traceback'] = traceback.format_exc()

|

||||

log.error(exception_data)

|

||||

except:

|

||||

exception_data = {

|

||||

sys.exc_info()[0].__name__: str(sys.exc_info()[1]),

|

||||

}

|

||||

if log.isEnabledFor(logging.DEBUG):

|

||||

exception_data['traceback'] = traceback.format_exc()

|

||||

log.error(exception_data)

|

||||

else:

|

||||

if response.getcode() == 201:

|

||||

log.debug({

|

||||

'response_code': response.getcode(),

|

||||

})

|

||||

return True

|

||||

log.error({

|

||||

'response_code': response.getcode(),

|

||||

'response_content': response.read(),

|

||||

})

|

||||

return False

|

||||

|

||||

|

||||

def main(argv=None):

|

||||

if not argv:

|

||||

argv = sys.argv

|

||||

args = parseArguments(argv)

|

||||

setup_logging(args, __version__)

|

||||

if os.path.isfile(args.targetFile):

|

||||

branch = None

|

||||

name = None

|

||||

project = find_project(args.targetFile)

|

||||

if project:

|

||||

branch = project.branch()

|

||||

name = project.name()

|

||||

if send_action(project=name, branch=branch, **vars(args)):

|

||||

return 0

|

||||

return 102

|

||||

else:

|

||||

log.debug('File does not exist; ignoring this action.')

|

||||

return 101

|

||||

|

||||

@ -1,92 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

"""

|

||||

wakatime.log

|

||||

~~~~~~~~~~~~

|

||||

|

||||

Provides the configured logger for writing JSON to the log file.

|

||||

|

||||

:copyright: (c) 2013 Alan Hamlett.

|

||||

:license: BSD, see LICENSE for more details.

|

||||

"""

|

||||

|

||||

import logging

|

||||

import os

|

||||

|

||||

from .packages import simplejson as json

|

||||

try:

|

||||

from collections import OrderedDict

|

||||

except ImportError:

|

||||

from .packages.ordereddict import OrderedDict

|

||||

|

||||

|

||||

class CustomEncoder(json.JSONEncoder):

|

||||

|

||||

def default(self, obj):

|

||||

if isinstance(obj, bytes):

|

||||

obj = bytes.decode(obj)

|

||||

return json.dumps(obj)

|

||||

return super(CustomEncoder, self).default(obj)

|

||||

|

||||

|

||||

class JsonFormatter(logging.Formatter):

|

||||

|

||||

def setup(self, timestamp, endtime, isWrite, targetFile, version, plugin):

|

||||

self.timestamp = timestamp

|

||||

self.endtime = endtime

|

||||

self.isWrite = isWrite

|

||||

self.targetFile = targetFile

|

||||

self.version = version

|

||||

self.plugin = plugin

|

||||

|

||||

def format(self, record):

|

||||

data = OrderedDict([

|

||||

('now', self.formatTime(record, self.datefmt)),

|

||||

('version', self.version),

|

||||

('plugin', self.plugin),

|

||||

('time', self.timestamp),

|

||||

('endtime', self.endtime),

|

||||

('isWrite', self.isWrite),

|

||||

('file', self.targetFile),

|

||||

('level', record.levelname),

|

||||

('message', record.msg),

|

||||

])

|

||||

if not self.endtime:

|

||||

del data['endtime']

|

||||

if not self.plugin:

|

||||

del data['plugin']

|

||||

if not self.isWrite:

|

||||

del data['isWrite']

|

||||

return CustomEncoder().encode(data)

|

||||

|

||||

def formatException(self, exc_info):

|

||||

return exec_info[2].format_exc()

|

||||

|

||||

|

||||

def set_log_level(logger, args):

|

||||

level = logging.WARN

|

||||

if args.verbose:

|

||||

level = logging.DEBUG

|

||||

logger.setLevel(level)

|

||||

|

||||

|

||||

def setup_logging(args, version):

|

||||

logger = logging.getLogger()

|

||||

set_log_level(logger, args)

|

||||

if len(logger.handlers) > 0:

|

||||

return logger

|

||||

logfile = args.logfile

|

||||

if not logfile:

|

||||

logfile = '~/.wakatime.log'

|

||||

handler = logging.FileHandler(os.path.expanduser(logfile))

|

||||

formatter = JsonFormatter(datefmt='%a %b %d %H:%M:%S %Z %Y')

|

||||

formatter.setup(

|

||||

timestamp=args.timestamp,

|

||||

endtime=args.endtime,

|

||||

isWrite=args.isWrite,

|

||||

targetFile=args.targetFile,

|

||||

version=version,

|

||||

plugin=args.plugin,

|

||||

)

|

||||

handler.setFormatter(formatter)

|

||||

logger.addHandler(handler)

|

||||

return logger

|

||||

File diff suppressed because it is too large

Load Diff

@ -1,127 +0,0 @@

|

||||

# Copyright (c) 2009 Raymond Hettinger

|

||||

#

|

||||

# Permission is hereby granted, free of charge, to any person

|

||||

# obtaining a copy of this software and associated documentation files

|

||||

# (the "Software"), to deal in the Software without restriction,

|

||||

# including without limitation the rights to use, copy, modify, merge,

|

||||

# publish, distribute, sublicense, and/or sell copies of the Software,

|

||||

# and to permit persons to whom the Software is furnished to do so,

|

||||

# subject to the following conditions:

|

||||

#

|

||||

# The above copyright notice and this permission notice shall be

|

||||

# included in all copies or substantial portions of the Software.

|

||||

#

|

||||

# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

|

||||

# EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES

|

||||

# OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

|

||||

# NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

|

||||

# HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

|

||||

# WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

|

||||

# FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR

|

||||

# OTHER DEALINGS IN THE SOFTWARE.

|

||||

|

||||

from UserDict import DictMixin

|

||||

|

||||

class OrderedDict(dict, DictMixin):

|

||||

|

||||

def __init__(self, *args, **kwds):

|

||||

if len(args) > 1:

|

||||

raise TypeError('expected at most 1 arguments, got %d' % len(args))

|

||||

try:

|

||||

self.__end

|

||||

except AttributeError:

|

||||

self.clear()

|

||||

self.update(*args, **kwds)

|

||||

|

||||

def clear(self):

|

||||

self.__end = end = []

|

||||

end += [None, end, end] # sentinel node for doubly linked list

|

||||

self.__map = {} # key --> [key, prev, next]

|

||||

dict.clear(self)

|

||||

|

||||

def __setitem__(self, key, value):

|

||||

if key not in self:

|

||||

end = self.__end

|

||||

curr = end[1]

|

||||

curr[2] = end[1] = self.__map[key] = [key, curr, end]

|

||||

dict.__setitem__(self, key, value)

|

||||

|

||||

def __delitem__(self, key):

|

||||

dict.__delitem__(self, key)

|

||||

key, prev, next = self.__map.pop(key)

|

||||

prev[2] = next

|

||||

next[1] = prev

|

||||

|

||||

def __iter__(self):

|

||||

end = self.__end

|

||||

curr = end[2]

|

||||

while curr is not end:

|

||||

yield curr[0]

|

||||

curr = curr[2]

|

||||

|

||||

def __reversed__(self):

|

||||

end = self.__end

|

||||

curr = end[1]

|

||||

while curr is not end:

|

||||

yield curr[0]

|

||||

curr = curr[1]

|

||||

|

||||

def popitem(self, last=True):

|

||||

if not self:

|

||||

raise KeyError('dictionary is empty')

|

||||

if last:

|

||||

key = reversed(self).next()

|

||||

else:

|

||||

key = iter(self).next()

|

||||

value = self.pop(key)

|

||||

return key, value

|

||||

|

||||

def __reduce__(self):

|

||||

items = [[k, self[k]] for k in self]

|

||||

tmp = self.__map, self.__end

|

||||

del self.__map, self.__end

|

||||

inst_dict = vars(self).copy()

|

||||

self.__map, self.__end = tmp

|

||||

if inst_dict:

|

||||

return (self.__class__, (items,), inst_dict)

|

||||

return self.__class__, (items,)

|

||||

|

||||

def keys(self):

|

||||

return list(self)

|

||||

|

||||

setdefault = DictMixin.setdefault

|

||||

update = DictMixin.update

|

||||

pop = DictMixin.pop

|

||||

values = DictMixin.values

|

||||

items = DictMixin.items

|

||||

iterkeys = DictMixin.iterkeys

|

||||

itervalues = DictMixin.itervalues

|

||||

iteritems = DictMixin.iteritems

|

||||

|

||||

def __repr__(self):

|

||||

if not self:

|

||||

return '%s()' % (self.__class__.__name__,)

|

||||

return '%s(%r)' % (self.__class__.__name__, self.items())

|

||||

|

||||

def copy(self):

|

||||

return self.__class__(self)

|

||||

|

||||

@classmethod

|

||||

def fromkeys(cls, iterable, value=None):

|

||||

d = cls()

|

||||

for key in iterable:

|

||||

d[key] = value

|

||||

return d

|

||||

|

||||

def __eq__(self, other):

|

||||

if isinstance(other, OrderedDict):

|

||||

if len(self) != len(other):

|

||||

return False

|

||||

for p, q in zip(self.items(), other.items()):

|

||||

if p != q:

|

||||

return False

|

||||

return True

|

||||

return dict.__eq__(self, other)

|

||||

|

||||

def __ne__(self, other):

|

||||

return not self == other

|

||||

@ -1,547 +0,0 @@

|

||||

r"""JSON (JavaScript Object Notation) <http://json.org> is a subset of

|

||||

JavaScript syntax (ECMA-262 3rd edition) used as a lightweight data

|

||||

interchange format.

|

||||

|

||||

:mod:`simplejson` exposes an API familiar to users of the standard library

|

||||

:mod:`marshal` and :mod:`pickle` modules. It is the externally maintained

|

||||

version of the :mod:`json` library contained in Python 2.6, but maintains

|

||||

compatibility with Python 2.4 and Python 2.5 and (currently) has

|

||||

significant performance advantages, even without using the optional C

|

||||

extension for speedups.

|

||||

|

||||

Encoding basic Python object hierarchies::

|

||||

|

||||

>>> import simplejson as json

|

||||

>>> json.dumps(['foo', {'bar': ('baz', None, 1.0, 2)}])

|

||||

'["foo", {"bar": ["baz", null, 1.0, 2]}]'

|

||||

>>> print(json.dumps("\"foo\bar"))

|

||||

"\"foo\bar"

|

||||

>>> print(json.dumps(u'\u1234'))

|

||||

"\u1234"

|

||||

>>> print(json.dumps('\\'))

|

||||

"\\"

|

||||

>>> print(json.dumps({"c": 0, "b": 0, "a": 0}, sort_keys=True))

|

||||

{"a": 0, "b": 0, "c": 0}

|

||||

>>> from simplejson.compat import StringIO

|

||||

>>> io = StringIO()

|

||||

>>> json.dump(['streaming API'], io)

|

||||

>>> io.getvalue()

|

||||

'["streaming API"]'

|

||||

|

||||

Compact encoding::

|

||||

|

||||

>>> import simplejson as json

|

||||

>>> obj = [1,2,3,{'4': 5, '6': 7}]

|

||||

>>> json.dumps(obj, separators=(',',':'), sort_keys=True)

|

||||

'[1,2,3,{"4":5,"6":7}]'

|

||||

|

||||

Pretty printing::

|

||||

|

||||

>>> import simplejson as json

|

||||

>>> print(json.dumps({'4': 5, '6': 7}, sort_keys=True, indent=' '))

|

||||

{

|

||||

"4": 5,

|

||||

"6": 7

|

||||

}

|

||||

|

||||

Decoding JSON::

|

||||

|

||||

>>> import simplejson as json

|

||||

>>> obj = [u'foo', {u'bar': [u'baz', None, 1.0, 2]}]

|

||||

>>> json.loads('["foo", {"bar":["baz", null, 1.0, 2]}]') == obj

|

||||

True

|

||||

>>> json.loads('"\\"foo\\bar"') == u'"foo\x08ar'

|

||||

True

|

||||

>>> from simplejson.compat import StringIO

|

||||

>>> io = StringIO('["streaming API"]')

|

||||

>>> json.load(io)[0] == 'streaming API'

|

||||

True

|

||||

|

||||

Specializing JSON object decoding::

|

||||

|

||||

>>> import simplejson as json

|

||||

>>> def as_complex(dct):

|

||||

... if '__complex__' in dct:

|

||||

... return complex(dct['real'], dct['imag'])

|

||||

... return dct

|

||||

...

|

||||

>>> json.loads('{"__complex__": true, "real": 1, "imag": 2}',

|

||||

... object_hook=as_complex)

|

||||

(1+2j)

|

||||

>>> from decimal import Decimal

|

||||

>>> json.loads('1.1', parse_float=Decimal) == Decimal('1.1')

|

||||

True

|

||||

|

||||

Specializing JSON object encoding::

|

||||

|

||||

>>> import simplejson as json

|

||||

>>> def encode_complex(obj):

|

||||

... if isinstance(obj, complex):

|

||||

... return [obj.real, obj.imag]

|

||||

... raise TypeError(repr(o) + " is not JSON serializable")

|

||||

...

|

||||

>>> json.dumps(2 + 1j, default=encode_complex)

|

||||

'[2.0, 1.0]'

|

||||

>>> json.JSONEncoder(default=encode_complex).encode(2 + 1j)

|

||||

'[2.0, 1.0]'

|

||||

>>> ''.join(json.JSONEncoder(default=encode_complex).iterencode(2 + 1j))

|

||||

'[2.0, 1.0]'

|

||||

|

||||

|

||||

Using simplejson.tool from the shell to validate and pretty-print::

|

||||

|

||||

$ echo '{"json":"obj"}' | python -m simplejson.tool

|

||||

{

|

||||

"json": "obj"

|

||||

}

|

||||

$ echo '{ 1.2:3.4}' | python -m simplejson.tool

|

||||

Expecting property name: line 1 column 3 (char 2)

|

||||

"""

|

||||

from __future__ import absolute_import

|

||||

__version__ = '3.3.0'

|

||||

__all__ = [

|

||||

'dump', 'dumps', 'load', 'loads',

|

||||

'JSONDecoder', 'JSONDecodeError', 'JSONEncoder',

|

||||

'OrderedDict', 'simple_first',

|

||||

]

|

||||

|

||||

__author__ = 'Bob Ippolito <bob@redivi.com>'

|

||||

|

||||

from decimal import Decimal

|

||||

|

||||

from .scanner import JSONDecodeError

|

||||

from .decoder import JSONDecoder

|

||||

from .encoder import JSONEncoder, JSONEncoderForHTML

|

||||

def _import_OrderedDict():

|

||||

import collections

|

||||

try:

|

||||

return collections.OrderedDict

|

||||

except AttributeError:

|

||||

from . import ordered_dict

|

||||

return ordered_dict.OrderedDict

|

||||

OrderedDict = _import_OrderedDict()

|

||||

|

||||

def _import_c_make_encoder():

|

||||

try:

|

||||

from ._speedups import make_encoder

|

||||

return make_encoder

|

||||

except ImportError:

|

||||

return None

|

||||

|

||||

_default_encoder = JSONEncoder(

|

||||

skipkeys=False,

|

||||

ensure_ascii=True,

|

||||

check_circular=True,

|

||||

allow_nan=True,

|

||||

indent=None,

|

||||

separators=None,

|

||||

encoding='utf-8',

|

||||

default=None,

|

||||

use_decimal=True,

|

||||

namedtuple_as_object=True,

|

||||

tuple_as_array=True,

|

||||

bigint_as_string=False,

|

||||

item_sort_key=None,

|

||||

for_json=False,

|

||||

ignore_nan=False,

|

||||

)

|

||||

|

||||

def dump(obj, fp, skipkeys=False, ensure_ascii=True, check_circular=True,

|

||||

allow_nan=True, cls=None, indent=None, separators=None,

|

||||

encoding='utf-8', default=None, use_decimal=True,

|

||||

namedtuple_as_object=True, tuple_as_array=True,

|

||||

bigint_as_string=False, sort_keys=False, item_sort_key=None,

|

||||

for_json=False, ignore_nan=False, **kw):

|

||||

"""Serialize ``obj`` as a JSON formatted stream to ``fp`` (a

|

||||

``.write()``-supporting file-like object).

|

||||

|

||||

If *skipkeys* is true then ``dict`` keys that are not basic types

|

||||

(``str``, ``unicode``, ``int``, ``long``, ``float``, ``bool``, ``None``)

|

||||

will be skipped instead of raising a ``TypeError``.

|

||||

|

||||

If *ensure_ascii* is false, then the some chunks written to ``fp``

|

||||

may be ``unicode`` instances, subject to normal Python ``str`` to

|

||||

``unicode`` coercion rules. Unless ``fp.write()`` explicitly

|

||||

understands ``unicode`` (as in ``codecs.getwriter()``) this is likely

|

||||

to cause an error.

|

||||

|

||||

If *check_circular* is false, then the circular reference check

|

||||

for container types will be skipped and a circular reference will

|

||||

result in an ``OverflowError`` (or worse).

|

||||

|

||||

If *allow_nan* is false, then it will be a ``ValueError`` to

|

||||

serialize out of range ``float`` values (``nan``, ``inf``, ``-inf``)

|

||||

in strict compliance of the original JSON specification, instead of using

|

||||

the JavaScript equivalents (``NaN``, ``Infinity``, ``-Infinity``). See

|

||||

*ignore_nan* for ECMA-262 compliant behavior.

|

||||

|

||||

If *indent* is a string, then JSON array elements and object members

|

||||

will be pretty-printed with a newline followed by that string repeated

|

||||

for each level of nesting. ``None`` (the default) selects the most compact

|

||||

representation without any newlines. For backwards compatibility with

|

||||

versions of simplejson earlier than 2.1.0, an integer is also accepted

|

||||

and is converted to a string with that many spaces.

|

||||

|

||||

If specified, *separators* should be an

|

||||

``(item_separator, key_separator)`` tuple. The default is ``(', ', ': ')``

|

||||

if *indent* is ``None`` and ``(',', ': ')`` otherwise. To get the most

|

||||

compact JSON representation, you should specify ``(',', ':')`` to eliminate

|

||||

whitespace.

|

||||

|

||||

*encoding* is the character encoding for str instances, default is UTF-8.

|

||||

|

||||

*default(obj)* is a function that should return a serializable version

|

||||

of obj or raise ``TypeError``. The default simply raises ``TypeError``.

|

||||

|

||||

If *use_decimal* is true (default: ``True``) then decimal.Decimal

|

||||

will be natively serialized to JSON with full precision.

|

||||

|

||||

If *namedtuple_as_object* is true (default: ``True``),

|

||||

:class:`tuple` subclasses with ``_asdict()`` methods will be encoded

|

||||

as JSON objects.

|

||||

|

||||

If *tuple_as_array* is true (default: ``True``),

|

||||

:class:`tuple` (and subclasses) will be encoded as JSON arrays.

|

||||

|

||||

If *bigint_as_string* is true (default: ``False``), ints 2**53 and higher

|

||||

or lower than -2**53 will be encoded as strings. This is to avoid the

|

||||

rounding that happens in Javascript otherwise. Note that this is still a

|

||||

lossy operation that will not round-trip correctly and should be used

|

||||

sparingly.

|

||||

|

||||

If specified, *item_sort_key* is a callable used to sort the items in

|

||||

each dictionary. This is useful if you want to sort items other than

|

||||

in alphabetical order by key. This option takes precedence over

|

||||

*sort_keys*.

|

||||

|

||||

If *sort_keys* is true (default: ``False``), the output of dictionaries

|

||||

will be sorted by item.

|

||||

|

||||

If *for_json* is true (default: ``False``), objects with a ``for_json()``

|

||||

method will use the return value of that method for encoding as JSON

|

||||

instead of the object.

|

||||

|

||||

If *ignore_nan* is true (default: ``False``), then out of range

|

||||

:class:`float` values (``nan``, ``inf``, ``-inf``) will be serialized as

|

||||

``null`` in compliance with the ECMA-262 specification. If true, this will

|

||||

override *allow_nan*.

|

||||

|

||||

To use a custom ``JSONEncoder`` subclass (e.g. one that overrides the

|

||||

``.default()`` method to serialize additional types), specify it with

|

||||

the ``cls`` kwarg. NOTE: You should use *default* or *for_json* instead

|

||||

of subclassing whenever possible.

|

||||

|

||||

"""

|

||||

# cached encoder

|

||||

if (not skipkeys and ensure_ascii and

|

||||

check_circular and allow_nan and

|

||||

cls is None and indent is None and separators is None and

|

||||

encoding == 'utf-8' and default is None and use_decimal

|

||||

and namedtuple_as_object and tuple_as_array

|

||||

and not bigint_as_string and not item_sort_key

|

||||

and not for_json and not ignore_nan and not kw):

|

||||

iterable = _default_encoder.iterencode(obj)

|

||||

else:

|

||||

if cls is None:

|

||||

cls = JSONEncoder

|

||||

iterable = cls(skipkeys=skipkeys, ensure_ascii=ensure_ascii,

|

||||

check_circular=check_circular, allow_nan=allow_nan, indent=indent,

|

||||

separators=separators, encoding=encoding,

|

||||

default=default, use_decimal=use_decimal,

|

||||

namedtuple_as_object=namedtuple_as_object,

|

||||

tuple_as_array=tuple_as_array,

|

||||

bigint_as_string=bigint_as_string,

|

||||

sort_keys=sort_keys,

|

||||

item_sort_key=item_sort_key,

|

||||

for_json=for_json,

|

||||

ignore_nan=ignore_nan,

|

||||

**kw).iterencode(obj)

|

||||

# could accelerate with writelines in some versions of Python, at

|

||||

# a debuggability cost

|

||||

for chunk in iterable:

|

||||

fp.write(chunk)

|

||||

|

||||

|

||||

def dumps(obj, skipkeys=False, ensure_ascii=True, check_circular=True,

|

||||

allow_nan=True, cls=None, indent=None, separators=None,

|

||||

encoding='utf-8', default=None, use_decimal=True,

|

||||

namedtuple_as_object=True, tuple_as_array=True,

|

||||

bigint_as_string=False, sort_keys=False, item_sort_key=None,

|

||||

for_json=False, ignore_nan=False, **kw):

|

||||

"""Serialize ``obj`` to a JSON formatted ``str``.

|

||||

|

||||

If ``skipkeys`` is false then ``dict`` keys that are not basic types

|

||||

(``str``, ``unicode``, ``int``, ``long``, ``float``, ``bool``, ``None``)

|

||||

will be skipped instead of raising a ``TypeError``.

|

||||

|

||||

If ``ensure_ascii`` is false, then the return value will be a

|

||||

``unicode`` instance subject to normal Python ``str`` to ``unicode``

|

||||

coercion rules instead of being escaped to an ASCII ``str``.

|

||||

|

||||

If ``check_circular`` is false, then the circular reference check

|

||||

for container types will be skipped and a circular reference will

|

||||

result in an ``OverflowError`` (or worse).

|

||||

|

||||

If ``allow_nan`` is false, then it will be a ``ValueError`` to

|

||||

serialize out of range ``float`` values (``nan``, ``inf``, ``-inf``) in

|

||||

strict compliance of the JSON specification, instead of using the

|

||||

JavaScript equivalents (``NaN``, ``Infinity``, ``-Infinity``).

|

||||

|

||||

If ``indent`` is a string, then JSON array elements and object members

|

||||

will be pretty-printed with a newline followed by that string repeated

|

||||

for each level of nesting. ``None`` (the default) selects the most compact

|

||||

representation without any newlines. For backwards compatibility with

|

||||

versions of simplejson earlier than 2.1.0, an integer is also accepted

|

||||

and is converted to a string with that many spaces.

|

||||

|

||||

If specified, ``separators`` should be an

|

||||

``(item_separator, key_separator)`` tuple. The default is ``(', ', ': ')``

|

||||

if *indent* is ``None`` and ``(',', ': ')`` otherwise. To get the most

|

||||

compact JSON representation, you should specify ``(',', ':')`` to eliminate

|

||||

whitespace.

|

||||

|

||||

``encoding`` is the character encoding for str instances, default is UTF-8.

|

||||

|

||||

``default(obj)`` is a function that should return a serializable version

|

||||

of obj or raise TypeError. The default simply raises TypeError.

|

||||

|

||||

If *use_decimal* is true (default: ``True``) then decimal.Decimal

|

||||

will be natively serialized to JSON with full precision.

|

||||

|

||||

If *namedtuple_as_object* is true (default: ``True``),

|

||||

:class:`tuple` subclasses with ``_asdict()`` methods will be encoded

|

||||

as JSON objects.

|

||||

|

||||

If *tuple_as_array* is true (default: ``True``),

|

||||

:class:`tuple` (and subclasses) will be encoded as JSON arrays.

|

||||

|

||||

If *bigint_as_string* is true (not the default), ints 2**53 and higher

|

||||

or lower than -2**53 will be encoded as strings. This is to avoid the

|

||||

rounding that happens in Javascript otherwise.

|

||||

|

||||

If specified, *item_sort_key* is a callable used to sort the items in

|

||||

each dictionary. This is useful if you want to sort items other than

|

||||

in alphabetical order by key. This option takes precendence over

|

||||

*sort_keys*.

|

||||

|

||||

If *sort_keys* is true (default: ``False``), the output of dictionaries

|

||||

will be sorted by item.

|

||||

|

||||

If *for_json* is true (default: ``False``), objects with a ``for_json()``

|

||||

method will use the return value of that method for encoding as JSON

|

||||

instead of the object.

|

||||

|

||||

If *ignore_nan* is true (default: ``False``), then out of range

|

||||

:class:`float` values (``nan``, ``inf``, ``-inf``) will be serialized as

|

||||

``null`` in compliance with the ECMA-262 specification. If true, this will

|

||||

override *allow_nan*.

|

||||

|

||||

To use a custom ``JSONEncoder`` subclass (e.g. one that overrides the

|

||||

``.default()`` method to serialize additional types), specify it with

|

||||

the ``cls`` kwarg. NOTE: You should use *default* instead of subclassing

|

||||

whenever possible.

|

||||

|

||||

"""

|

||||

# cached encoder

|

||||

if (not skipkeys and ensure_ascii and

|

||||

check_circular and allow_nan and

|

||||

cls is None and indent is None and separators is None and

|

||||

encoding == 'utf-8' and default is None and use_decimal

|

||||

and namedtuple_as_object and tuple_as_array

|

||||

and not bigint_as_string and not sort_keys

|

||||

and not item_sort_key and not for_json

|

||||

and not ignore_nan and not kw):

|

||||

return _default_encoder.encode(obj)

|

||||

if cls is None:

|

||||

cls = JSONEncoder

|

||||

return cls(

|

||||

skipkeys=skipkeys, ensure_ascii=ensure_ascii,

|

||||

check_circular=check_circular, allow_nan=allow_nan, indent=indent,

|

||||

separators=separators, encoding=encoding, default=default,

|

||||

use_decimal=use_decimal,

|

||||

namedtuple_as_object=namedtuple_as_object,

|

||||

tuple_as_array=tuple_as_array,

|

||||

bigint_as_string=bigint_as_string,

|

||||

sort_keys=sort_keys,

|

||||

item_sort_key=item_sort_key,

|

||||

for_json=for_json,

|

||||

ignore_nan=ignore_nan,

|

||||

**kw).encode(obj)

|

||||

|

||||

|

||||

_default_decoder = JSONDecoder(encoding=None, object_hook=None,

|

||||

object_pairs_hook=None)

|

||||

|

||||

|

||||

def load(fp, encoding=None, cls=None, object_hook=None, parse_float=None,

|

||||

parse_int=None, parse_constant=None, object_pairs_hook=None,

|

||||

use_decimal=False, namedtuple_as_object=True, tuple_as_array=True,

|

||||

**kw):

|

||||

"""Deserialize ``fp`` (a ``.read()``-supporting file-like object containing

|

||||

a JSON document) to a Python object.

|

||||

|

||||

*encoding* determines the encoding used to interpret any

|

||||

:class:`str` objects decoded by this instance (``'utf-8'`` by

|

||||

default). It has no effect when decoding :class:`unicode` objects.

|

||||

|

||||

Note that currently only encodings that are a superset of ASCII work,

|

||||

strings of other encodings should be passed in as :class:`unicode`.

|

||||

|

||||

*object_hook*, if specified, will be called with the result of every

|

||||

JSON object decoded and its return value will be used in place of the

|

||||

given :class:`dict`. This can be used to provide custom

|

||||

deserializations (e.g. to support JSON-RPC class hinting).

|

||||

|

||||

*object_pairs_hook* is an optional function that will be called with

|

||||

the result of any object literal decode with an ordered list of pairs.

|

||||

The return value of *object_pairs_hook* will be used instead of the

|

||||

:class:`dict`. This feature can be used to implement custom decoders

|

||||

that rely on the order that the key and value pairs are decoded (for

|

||||

example, :func:`collections.OrderedDict` will remember the order of